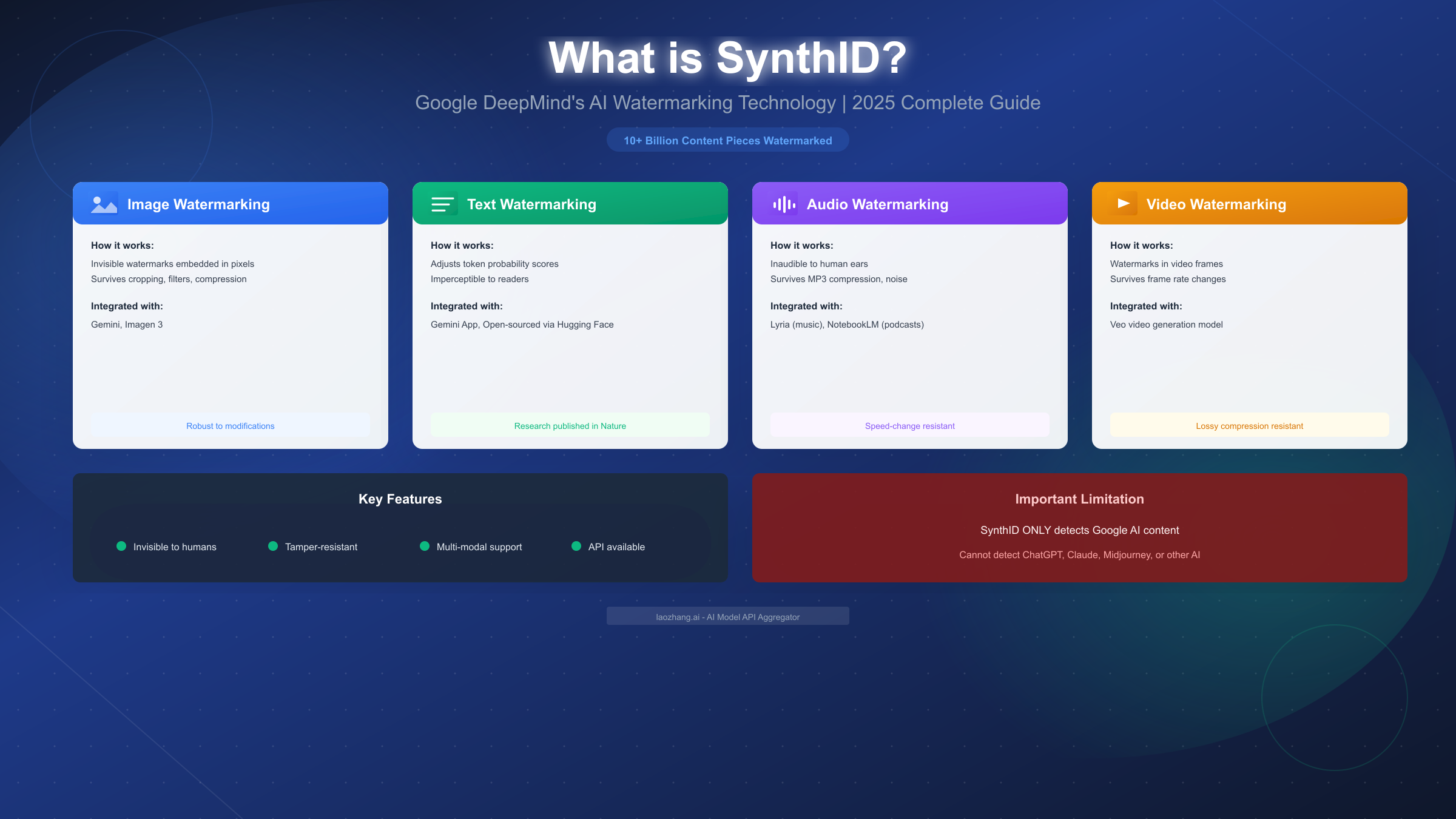

SynthID represents a significant advancement in AI content authentication, developed by Google DeepMind to address the growing challenge of distinguishing AI-generated content from human-created material. Launched at Google I/O 2023 and significantly expanded in 2025, this technology has already watermarked over 10 billion pieces of content across Google's AI ecosystem. Unlike traditional watermarking methods that add visible logos or easily-stripped metadata, SynthID embeds invisible, tamper-resistant signals directly into the content itself—whether that's images, text, audio, or video. However, it's crucial to understand upfront that SynthID only detects content generated by Google's AI tools; it cannot identify outputs from ChatGPT, Claude, Midjourney, or other non-Google AI systems.

Quick Answer: What is SynthID and Why Does It Matter?

SynthID is Google DeepMind's invisible watermarking technology designed to identify AI-generated content with high accuracy while remaining imperceptible to humans. The technology addresses one of the most pressing challenges in the AI era: how do we know if content was created by an AI or a human? This question has profound implications for journalism, academic integrity, content moderation, and combating misinformation.

The core value proposition of SynthID lies in its approach to watermarking. Traditional digital watermarks—like the copyright logos you see on stock photos—are visible and easily removable. Metadata-based approaches like C2PA attach information to files that can be stripped when content is downloaded, screenshotted, or converted between formats. SynthID takes a fundamentally different approach by embedding watermarks directly into the content's data structure, making them resistant to common modifications like cropping, compression, and format conversion.

Understanding the scale of SynthID adoption reveals its significance. According to Google's official announcements at I/O 2025, over 10 billion pieces of content have been watermarked with SynthID since its launch. This includes content generated through Gemini (text and images), Imagen 3 (images), Lyria (audio and music), and Veo (video). The technology is automatically applied to all AI-generated content from these Google products, meaning users don't need to take any action to have their generated content watermarked.

For content verification professionals, SynthID provides a new tool in the authentication arsenal. Journalists fact-checking images, content moderators reviewing submissions, and researchers studying AI-generated content can use the SynthID Detector portal to verify whether content originated from Google's AI systems. While this doesn't solve the entire problem of AI content detection (since it's limited to Google's ecosystem), it establishes an important precedent for responsible AI development.

The key statistics that define SynthID's current state include its multi-modal support (images, text, audio, video), the 10+ billion content pieces already watermarked, the open-source availability of SynthID Text through Hugging Face Transformers, and the research validation through a paper published in Nature in October 2024.

How SynthID Works: The Technology Behind Invisible Watermarking

Understanding how SynthID works requires examining different approaches for each content type, as the watermarking mechanisms vary significantly between images, text, audio, and video. The fundamental principle, however, remains consistent: embed information in ways that are imperceptible to humans but detectable by specialized algorithms.

For image watermarking, SynthID uses neural network-based signal embedding. The technology modifies pixel values in ways that are invisible to the human eye but create a detectable pattern when analyzed by SynthID's detection algorithms. According to Google DeepMind's research (https://deepmind.google/models/synthid/ ), these modifications are distributed across the entire image rather than concentrated in specific regions, making them robust against cropping. The watermark survives common image transformations including resizing, JPEG compression (even at low quality settings), color adjustments, and filter applications. This robustness comes from the distributed nature of the signal—even if parts of the image are removed or modified, enough of the watermark pattern remains for detection.

Text watermarking employs a different mechanism based on token probability manipulation. When a large language model generates text, it produces probability scores for each potential next word (token). SynthID Text adjusts these probability scores using a pseudorandom function, creating a statistical pattern that can be detected later. For example, in the sentence "My favourite tropical fruits are mango and...", multiple words could reasonably follow (bananas, papaya, pineapple). SynthID subtly influences which word is selected without degrading the quality or coherence of the output. The detection algorithm then analyzes the statistical properties of the token selections to determine if the text was generated with SynthID watermarking enabled.

Audio watermarking through Lyria integrates directly into music generation. The watermark is embedded into the audio waveform in ways that are inaudible to human ears—similar to how some audio codecs embed metadata in ultrasonic frequencies. According to Google's documentation, these watermarks survive common audio transformations including MP3 compression, speed changes, and background noise addition. This is particularly important for detecting AI-generated music and podcast content created through NotebookLM's audio features.

Video watermarking extends the image approach to temporal sequences. SynthID embeds watermarks in video frames using techniques similar to image watermarking but designed to remain consistent across frame rate changes, video compression, and other modifications common in video processing pipelines. The Veo video generation model automatically applies these watermarks to all generated content.

For developers interested in the technical implementation, the SynthID Text watermarking system uses several key parameters as documented in Google's AI developer resources (https://ai.google.dev/responsible/docs/safeguards/synthid ). The ngram_len parameter (recommended default: 5) balances robustness and detectability. The keys parameter contains unique random integers for computing g-function scores across the vocabulary. The detection uses a Bayesian approach that outputs three possible states: watermarked, not watermarked, or uncertain—with customizable thresholds to control false positive and negative rates.

What SynthID Supports: Multi-Modal Content Coverage

SynthID's multi-modal capabilities represent one of its most significant advantages, supporting watermarking across the four primary types of AI-generated content. Understanding which Google products integrate SynthID and how the watermarking differs across content types helps users know when and where to apply detection.

| Content Type | Google Products | Watermark Method | Robustness Level | Detection Method |

|---|---|---|---|---|

| Images | Gemini, Imagen 3 | Neural pixel embedding | High (survives cropping, compression, filters) | SynthID Detector Portal |

| Text | Gemini App/Web | Token probability adjustment | Medium (survives minor edits, weak against translation) | Portal or API |

| Audio | Lyria, NotebookLM | Inaudible waveform embedding | High (survives MP3 compression, speed changes) | Portal |

| Video | Veo | Frame-by-frame neural embedding | High (survives format conversion, compression) | Portal |

Image watermarking through Gemini and Imagen 3 provides the most mature implementation. When users generate images through Gemini's multimodal capabilities or Imagen 3, SynthID watermarks are automatically embedded. The watermark persists through screenshot capture, format conversion (PNG to JPG and vice versa), social media uploads (which typically recompress images), and moderate editing operations. Testing by Analytics Vidhya (https://www.analyticsvidhya.com/blog/2025/12/how-to-detect-ai-generated-content/ ) confirmed that Gemini-generated images consistently trigger positive detection in SynthID Detector.

Text watermarking represents the newest and most complex modality. The SynthID Text system was open-sourced through Hugging Face Transformers (version 4.46.0+), allowing developers to implement watermarking in their own text generation systems. However, several limitations apply to text watermarking that don't affect other modalities. Factual responses provide less opportunity for watermarking because there's limited flexibility in token selection without affecting accuracy. Translation to other languages significantly reduces detection confidence. Extensive paraphrasing or rewriting can remove the watermark entirely.

Audio watermarking through Lyria handles both music generation and podcast creation. NotebookLM's Audio Overview feature, which creates podcast-style discussions from uploaded documents, applies SynthID watermarks to all generated audio. The watermarks survive common transformations including speed adjustment, noise addition, and lossy compression, making them suitable for detecting AI-generated audio even after processing through audio editing software.

Video watermarking through Veo addresses the growing concern over AI-generated video content. As video generation capabilities improve, the ability to detect AI-generated video becomes increasingly important for identifying deepfakes and synthetic media. SynthID's video watermarking maintains detection capability even after frame rate changes and video compression, though like all watermarking systems, it can be defeated by sufficiently destructive modifications.

For developers building applications that work with AI-generated content, understanding these distinctions helps inform which content types can be reliably verified. If you're working with Gemini API pricing and usage, knowing that generated content will carry SynthID watermarks by default is important for transparency with end users.

How to Detect AI Content: Practical Step-by-Step Guide

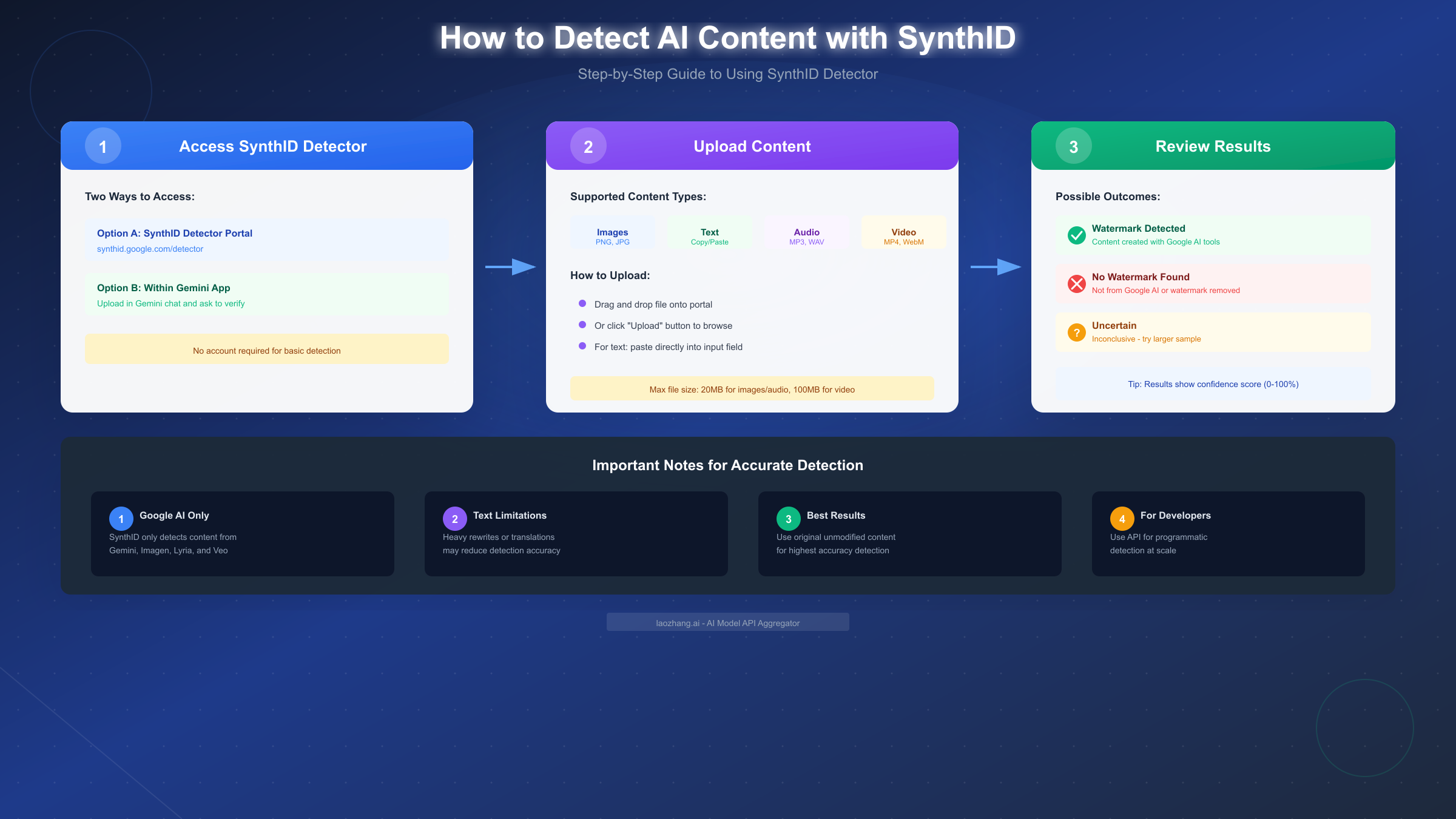

The SynthID Detector provides a straightforward way for anyone to verify whether content was generated by Google's AI systems. This practical guide walks through the detection process for different content types, including expected results and how to interpret them.

Step 1: Access the SynthID Detector Portal

Navigate to the SynthID Detector at synthid.google.com/detector (or access through Gemini by uploading content and asking for verification). No account is required for basic detection, making it accessible to journalists, researchers, and content moderators who need quick verification.

Step 2: Prepare Your Content for Upload

Before uploading, ensure your content meets the format requirements:

- Images: PNG, JPG, WebP formats supported; maximum 20MB file size

- Text: Direct paste into the text field; minimum 200 characters recommended for reliable detection

- Audio: MP3, WAV, OGG formats; maximum 20MB file size

- Video: MP4, WebM formats; maximum 100MB file size

Step 3: Upload and Analyze Content

Drag and drop your file into the detector interface, or click the upload button to browse. For text, paste directly into the provided text field. The analysis typically completes within 2-30 seconds depending on content size and type.

Step 4: Interpret Detection Results

SynthID Detector provides three possible outcomes:

The "Watermark Detected" result confirms the content was generated using Google AI tools with SynthID watermarking enabled. This result includes a confidence score indicating how certain the detection is. High confidence scores (>90%) indicate reliable detection, while lower scores may result from content modifications.

The "No Watermark Found" result means either the content was not generated by Google AI tools, or the watermark was removed through extensive modification. This result does NOT mean the content is human-created—it could be from ChatGPT, Claude, Midjourney, or any non-Google AI system.

The "Uncertain" result indicates the analysis was inconclusive. This often occurs with short text samples, heavily modified content, or edge cases where the watermark signal is present but weak. For text, try analyzing a longer sample; for images, try the original uncompressed version if available.

Use Case Scenarios for Different Professionals

Content moderators reviewing user submissions can use SynthID as one tool in their verification workflow. A positive SynthID detection confirms Google AI origin, while negative results require additional verification methods since non-Google AI content won't be detected.

Journalists fact-checking images can verify whether visual content originated from Gemini or Imagen. This is particularly useful for debunking fake images that claim to be photographs but were actually AI-generated using Google's tools.

Researchers studying AI-generated content can use the API for programmatic detection at scale, enabling analysis of large datasets. The API approach is documented in the developer integration section below.

Academic integrity officers can verify whether submitted text was generated using Gemini, though the limitations regarding rewriting and translation mean this should be one of multiple verification approaches rather than a sole determinant.

Limitations and Workarounds: What SynthID Cannot Do

Honest discussion of SynthID's limitations is essential for setting appropriate expectations and using the technology effectively. No watermarking system is perfect, and understanding these constraints helps users supplement SynthID with other verification methods.

The most significant limitation: SynthID only detects Google AI content. This cannot be emphasized enough—SynthID provides zero information about content generated by ChatGPT, Claude, Midjourney, Stable Diffusion, DALL-E, or any other non-Google AI system. A negative SynthID result does NOT mean content is human-created; it only means it wasn't created by Google's AI tools with watermarking enabled.

Text watermarking has specific weaknesses that image watermarking doesn't share. According to the research published in Nature, several transformations can significantly reduce or eliminate text watermarks:

- Translation to another language removes most watermark signal

- Extensive paraphrasing (>50% word changes) degrades detection

- Factual content has weaker watermarks because there's less flexibility in token selection

- Very short text samples may not contain enough signal for reliable detection

Adversarial attacks can defeat watermarking systems, including SynthID. While SynthID is more robust than metadata-based approaches, determined adversaries can still remove watermarks through extensive modification. Google's documentation explicitly states that "SynthID Text is not designed to directly stop motivated adversaries from causing harm"—it's a transparency tool, not a security system.

Detection confidence varies based on content modification history. The more a piece of content has been processed—compressed, resized, converted between formats, edited—the lower the detection confidence. While SynthID is designed to survive common modifications, accumulated changes can push detection into uncertain territory.

Partial content presents detection challenges. If someone extracts only a portion of a watermarked image (a small crop) or quotes only part of a watermarked text, there may not be enough watermark signal remaining for detection. The watermark is distributed throughout the content, so larger samples generally produce more reliable results.

For organizations needing comprehensive AI content detection, the recommendation is to use multiple approaches: SynthID for Google AI content, other detection tools for non-Google AI, and manual verification for edge cases. The AI detection ecosystem includes various commercial tools that attempt to detect AI-generated text regardless of source, though these generally have higher false positive and negative rates than watermark-based detection.

Developer Integration: API and SDK Implementation Guide

For developers building applications that need to detect or apply SynthID watermarks programmatically, Google provides several integration pathways. This section covers the technical implementation for both watermark application (text generation) and detection.

Setting Up SynthID Text Watermarking

The production-ready implementation is available through Hugging Face Transformers version 4.46.0+. Install the required dependencies:

pythonpip install "transformers>=4.46.0" torch # For GPU support with CUDA pip install "transformers>=4.46.0" torch --index-url https://download.pytorch.org/whl/cu118

Applying Watermarks During Text Generation

Watermark application requires only a configuration object passed to the model's generate method:

pythonfrom transformers import AutoModelForCausalLM, AutoTokenizer from transformers import SynthIDTextWatermarkingConfig # Load model and tokenizer model = AutoModelForCausalLM.from_pretrained("google/gemma-2b-it") tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b-it") # Configure watermarking watermark_config = SynthIDTextWatermarkingConfig( keys=[42, 123, 456], # Your unique secret keys ngram_len=5, # Recommended default ) # Generate with watermark input_text = "Explain quantum computing in simple terms:" inputs = tokenizer(input_text, return_tensors="pt") outputs = model.generate( **inputs, watermarking_config=watermark_config, max_new_tokens=200 ) watermarked_text = tokenizer.decode(outputs[0])

Model Requirements and Hardware Considerations

According to the GitHub repository (https://github.com/google-deepmind/synthid-text ), which has 709 stars and is licensed under Apache-2.0:

- Gemma 2B IT: Requires 16GB GPU memory

- Gemma 7B IT: Requires 32GB GPU memory

- GPT-2: Compatible with CPU (useful for testing)

Detecting Watermarks Programmatically

The detection system uses a Bayesian detector that requires training on sample data:

pythonfrom transformers import SynthIDTextWatermarkDetector # Initialize detector with same keys used for watermarking detector = SynthIDTextWatermarkDetector( keys=[42, 123, 456], ngram_len=5 ) # Analyze text result = detector.detect(watermarked_text) # Returns: 'watermarked', 'not_watermarked', or 'uncertain'

The reference implementation in Google DeepMind's GitHub repository includes Jupyter notebooks demonstrating end-to-end workflows with both Gemma and GPT-2 models. Note that the GitHub implementation is for research purposes; production deployments should use the Hugging Face Transformers integration.

For developers needing reliable API access to various AI models including those with SynthID integration, aggregated API services like laozhang.ai provide unified access to multiple models with competitive pricing and no rate limits. This can simplify development when working across multiple AI providers. Full documentation is available at https://docs.laozhang.ai/.

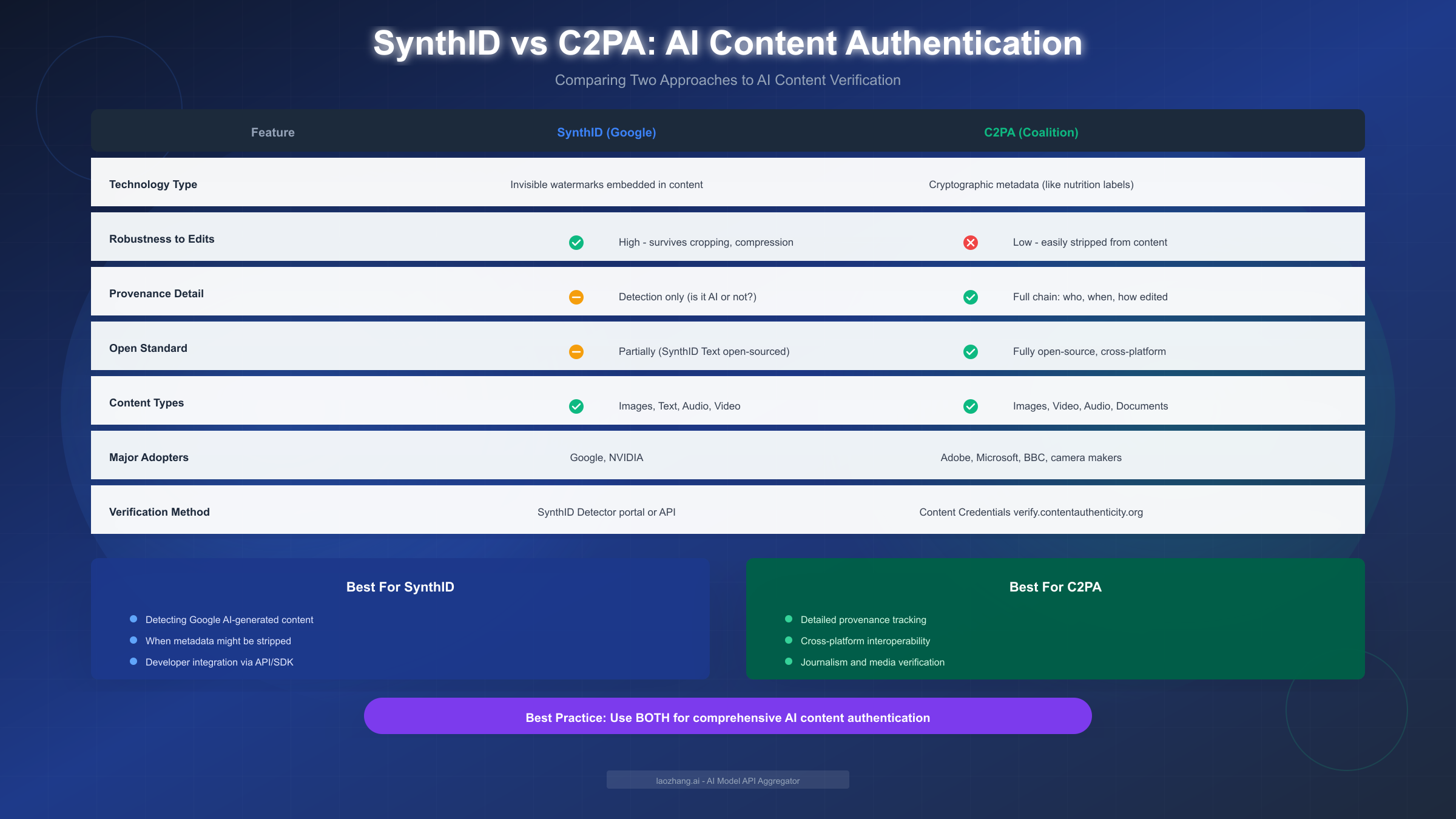

SynthID vs Alternatives: Comparing AI Content Authentication Methods

The landscape of AI content authentication includes multiple approaches, with SynthID and C2PA representing the two primary paradigms. Understanding how these complement each other helps organizations build comprehensive verification strategies.

SynthID and C2PA represent fundamentally different philosophies. SynthID focuses on embedded watermarks that survive content modification but provide limited provenance information (essentially just "this is from Google AI"). C2PA (Coalition for Content Provenance and Authenticity) focuses on cryptographic metadata that provides rich provenance information (who created it, when, how it was edited) but can be easily stripped from content.

| Aspect | SynthID | C2PA |

|---|---|---|

| Technology | Invisible watermarks embedded in content | Cryptographic metadata attached to files |

| Robustness | High - survives cropping, compression, conversion | Low - metadata can be stripped |

| Information Depth | Binary (AI or not) | Rich provenance chain |

| Openness | Partially open (SynthID Text) | Fully open standard |

| Detection Tools | SynthID Detector portal | Content Credentials verify portal |

| Industry Support | Google, NVIDIA | Adobe, Microsoft, BBC, camera manufacturers |

The complementary nature of these approaches suggests a hybrid strategy. Google itself has announced that AI-generated images from its models will include both SynthID watermarks AND C2PA metadata. This means that platforms preserving metadata can verify through C2PA, while the watermark remains as a fallback when metadata is stripped.

Other AI detection approaches exist but with significant limitations. Commercial AI text detectors attempt to identify AI-generated content regardless of source, but these tools have been criticized for high false positive rates (incorrectly flagging human-written content) and inconsistent accuracy across different AI models. These tools analyze writing patterns rather than embedded markers, making them fundamentally less reliable than watermark-based detection.

Meta's Video Seal represents another emerging approach. Meta has developed its own video watermarking technology, which combined with Google's SynthID and the open C2PA standard, suggests the industry is converging on watermarking as a key transparency mechanism for AI-generated content.

For organizations choosing between approaches, the practical recommendation is:

- Use SynthID for detecting Google AI content with high confidence

- Implement C2PA for detailed provenance tracking when metadata will be preserved

- Consider multiple detection tools for comprehensive coverage

- Recognize that no single approach solves all AI content verification challenges

For those comparing AI video generation models, understanding which services implement watermarking helps inform decisions about transparency and content verification capabilities.

Frequently Asked Questions

Can SynthID detect ChatGPT or Claude content?

No. SynthID only detects content generated by Google's AI systems (Gemini, Imagen, Lyria, Veo). It provides zero information about content from ChatGPT, Claude, Midjourney, Stable Diffusion, or any other non-Google AI. A negative SynthID result does not mean content is human-created—only that it's not from Google AI with watermarking enabled.

Is SynthID detection free to use?

Yes, the SynthID Detector portal is free to use for basic detection without requiring an account. For high-volume programmatic detection, developers can use the API, and SynthID Text watermarking is available through the open-source Hugging Face Transformers library at no cost.

Can watermarks be removed from SynthID content?

While SynthID watermarks are designed to be tamper-resistant and survive common modifications (cropping, compression, format conversion), they can be degraded or removed through extensive editing. Translation to other languages removes most text watermarks, and heavy image manipulation can reduce detection confidence. However, casual modifications like social media uploading or screenshot capture do not remove the watermarks.

How accurate is SynthID detection?

Detection accuracy depends on content type and modification history. For unmodified or lightly modified content, detection is highly reliable. For text, accuracy decreases with extensive paraphrasing or translation. The detector provides confidence scores and can report "uncertain" when results are inconclusive, which is preferable to false positives or negatives.

What happens if I use Gemini through an API instead of the web interface?

Content generated through Google's APIs (Gemini API, Imagen API, etc.) includes SynthID watermarks by default when the underlying models support it. If you're using Gemini Flash image API, generated images will carry SynthID watermarks just like images generated through the web interface.

Is SynthID required by law?

Currently, no laws mandate SynthID usage. However, regulations like the EU AI Act include provisions for AI-generated content transparency, and watermarking technologies like SynthID may become standard mechanisms for compliance. Several US states are also considering legislation requiring disclosure of AI-generated content in specific contexts like political advertising.

Can I use SynthID in my own applications?

SynthID Text has been open-sourced and can be integrated into your own text generation applications through Hugging Face Transformers. Image, audio, and video watermarking are currently only available through Google's native products and are not available for third-party integration. Check Google AI developer documentation for the latest availability.

How does SynthID affect content quality?

SynthID is designed to be imperceptible. Text watermarking may slightly influence word choice in ways that maintain semantic meaning but create detectable patterns. Image watermarking modifies pixels in ways invisible to human vision. Audio watermarks are inaudible. Google's research indicates no meaningful quality degradation from SynthID watermarking.

SynthID represents an important step toward transparency in AI-generated content, though it's one tool among many rather than a complete solution. As AI capabilities continue to advance, technologies like SynthID, combined with open standards like C2PA and ongoing research into detection methods, will be essential for maintaining trust in digital content. For developers, content moderators, and researchers, understanding both the capabilities and limitations of SynthID enables more effective use of this technology in verification workflows.