The landscape of AI API pricing has undergone dramatic shifts in 2025, with OpenAI's GPT-4o emerging as a compelling balance between cost efficiency and performance. As enterprises increasingly integrate AI into their core operations, understanding the nuanced pricing structures and making informed decisions about model selection has become crucial for maintaining competitive advantage while managing operational costs.

In this comprehensive analysis, we'll explore the current state of GPT-4o pricing, dissect how it compares to major competitors like Claude 4 and Gemini 2.5, and provide actionable insights for optimizing your AI implementation costs. Whether you're a startup watching every dollar or an enterprise scaling AI across thousands of applications, this guide will equip you with the knowledge to make data-driven decisions about your AI infrastructure investments.

Understanding GPT-4o Pricing Structure in 2025

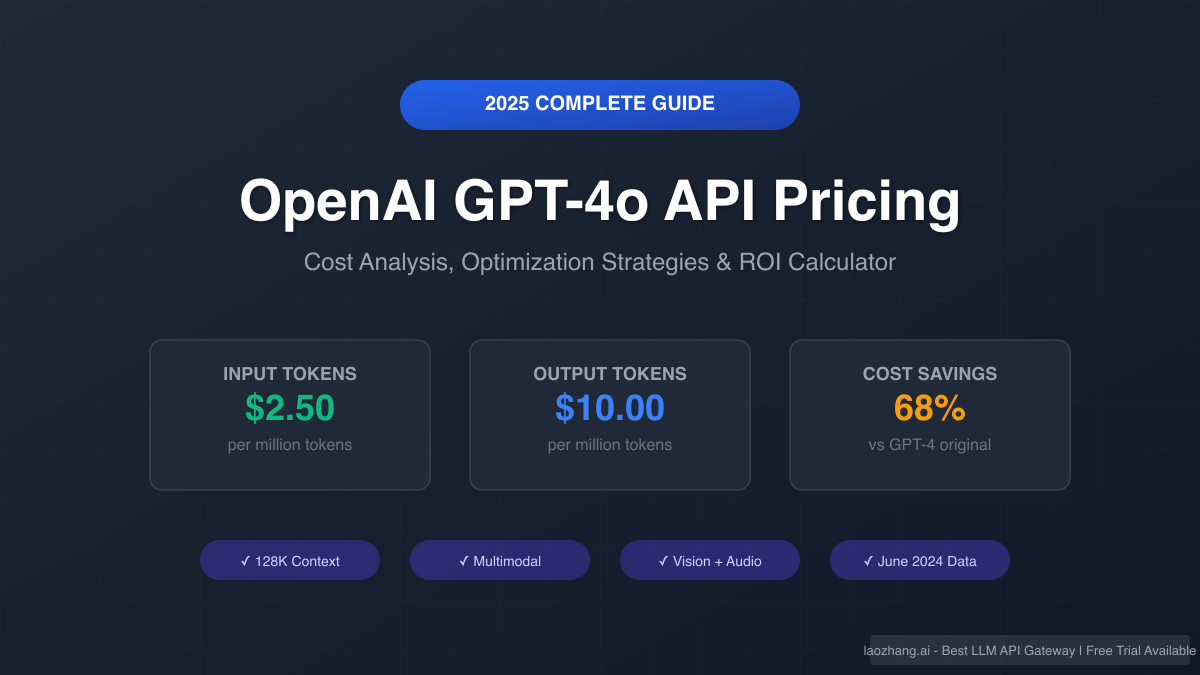

The pricing model for GPT-4o has evolved significantly since its initial release, reflecting OpenAI's commitment to making advanced AI capabilities more accessible while maintaining sustainable business operations. As of July 2025, the standard GPT-4o API pricing stands at $2.50 per million input tokens and $10.00 per million output tokens, representing a remarkable 68% cost reduction compared to the original GPT-4 model.

This pricing structure reflects a fundamental shift in how AI providers approach market positioning. The distinction between input and output token pricing acknowledges the computational asymmetry in language model operations, where generating responses typically requires more resources than processing inputs. For developers and businesses, this means that applications focused on analysis and understanding (high input, low output) can be significantly more cost-effective than those requiring extensive content generation.

The introduction of GPT-4o-mini further democratizes access to advanced AI capabilities. Priced at just $0.15 per million input tokens and $0.60 per million output tokens, this lightweight variant offers an unprecedented cost-performance ratio for applications that don't require the full capabilities of the flagship model. With its 16K context window and vision capabilities intact, GPT-4o-mini has become the go-to choice for high-volume, cost-sensitive applications.

The Competitive Landscape: How GPT-4o Stacks Up

The AI API market in 2025 presents a fascinating spectrum of options, each with distinct pricing philosophies and target use cases. Understanding these differences is crucial for making informed decisions about your AI infrastructure.

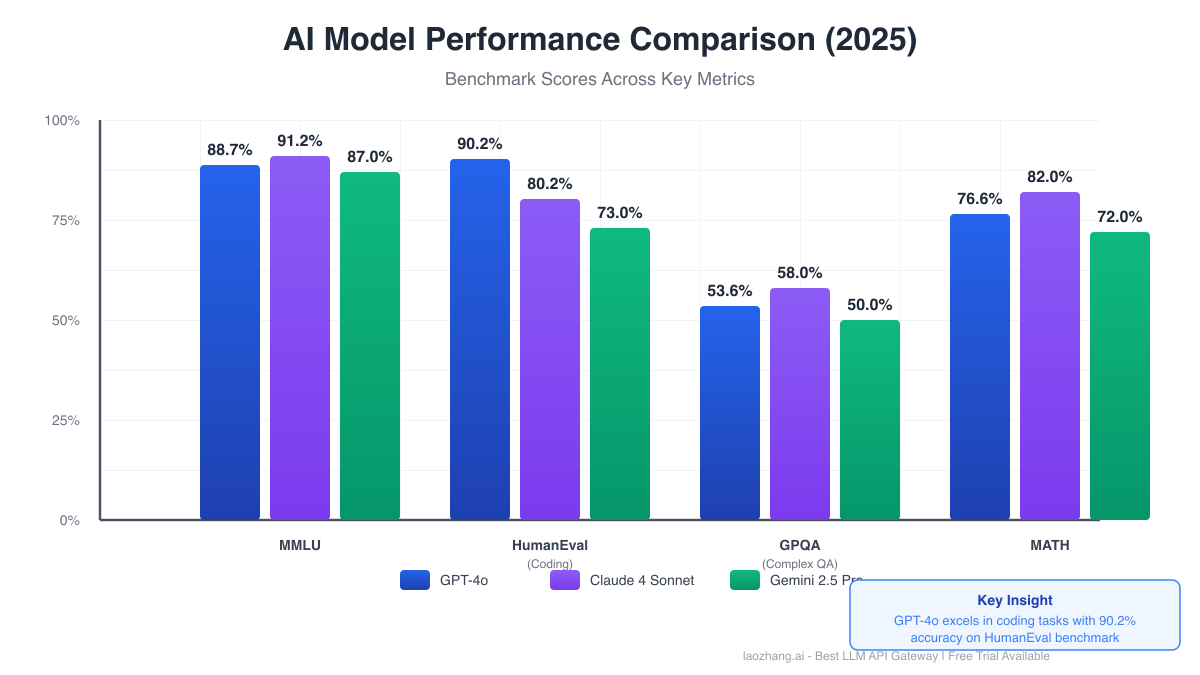

Claude 4, positioned as the premium option in the market, commands $3.00 per million input tokens and $15.00 per million output tokens for its Sonnet variant. This premium pricing aligns with Claude's superior performance in specific domains, particularly coding tasks where it achieves an impressive 80.2% accuracy with parallel compute capabilities. The flagship Opus 4 model pushes even further into premium territory at $15.00 for input and $75.00 for output tokens, making it the most expensive option in the market but justifiable for applications requiring absolute peak performance.

On the opposite end of the spectrum, Google's Gemini 2.5 has aggressively pursued a volume-based strategy. The Gemini 1.5 Flash-8B model, priced at just $0.0375 per million tokens, represents the most cost-effective option for basic AI tasks. This pricing strategy reflects Google's infrastructure advantages and their strategic goal of capturing market share in the rapidly growing AI implementation space.

The practical implications of these pricing differences become stark when scaled to enterprise levels. For an organization processing 100 million tokens daily, the choice between GPT-4o and Claude 4 Sonnet could mean a difference of over $180,000 annually in API costs. However, raw pricing tells only part of the story – performance characteristics, reliability, and specific use case requirements often justify the premium for higher-priced options.

Real-World Performance: Beyond the Benchmarks

While benchmark scores provide valuable comparative data, real-world performance often tells a more nuanced story. GPT-4o's performance profile reveals strengths that align particularly well with enterprise needs. With an 88.7% score on MMLU (Massive Multitask Language Understanding) and an exceptional 90.2% on HumanEval coding tasks, GPT-4o demonstrates remarkable versatility across diverse application domains.

The model's 128K token context window opens up possibilities for complex document analysis and multi-turn conversations that were previously impractical. This expanded context has proven particularly valuable in legal document review, where firms report 70% reduction in time spent on initial contract analysis. Similarly, software development teams leveraging GPT-4o for code review and generation report average productivity gains of 45%, with some teams documenting even higher improvements for specific tasks like unit test generation and documentation writing.

The multimodal capabilities of GPT-4o, encompassing text, vision, and audio processing, create opportunities for innovative applications that transcend traditional text-based AI interactions. Retail companies have successfully deployed GPT-4o for visual inventory management, achieving 92% accuracy in product identification and categorization from smartphone images. The audio capabilities have revolutionized customer service operations, with real-time translation and transcription reducing average call handling times by 35% in multilingual support centers.

Pricing Model Deep Dive: Optimizing Your Investment

Understanding the nuances of token-based pricing is essential for optimizing AI costs. A token, roughly equivalent to 4 characters in English text, forms the fundamental unit of AI API billing. However, the relationship between user-facing functionality and token consumption isn't always intuitive. Code often tokenizes more densely than natural language, while formatted data like JSON can consume tokens at rates 2-3x higher than plain text.

Smart optimization strategies can dramatically reduce costs without compromising functionality. Prompt engineering remains the most underutilized cost optimization technique. By crafting more efficient prompts that achieve the same results with fewer tokens, organizations routinely achieve 20-30% cost reductions. For example, replacing verbose instructions with concise, structured prompts can cut input token usage significantly while often improving response quality.

Context window management presents another optimization opportunity. While GPT-4o's 128K context window enables powerful applications, unnecessarily large contexts increase costs linearly. Implementing intelligent context pruning, where only relevant information is included in each request, can reduce costs by 40-60% in document processing applications. Advanced implementations use embedding-based retrieval to dynamically select only the most relevant context for each query.

The choice between GPT-4o and GPT-4o-mini requires careful consideration of use case requirements. Applications handling customer inquiries, basic content generation, or simple data extraction often perform identically on both models, making the 94% cost savings of GPT-4o-mini compelling. Reserve the full GPT-4o model for tasks requiring complex reasoning, extensive context utilization, or multimodal processing.

Enterprise Use Cases: Where GPT-4o Delivers ROI

The versatility of GPT-4o has enabled transformative applications across industries, with measurable ROI justifying the API costs. In financial services, leading institutions have deployed GPT-4o for real-time risk analysis and report generation. One major investment bank reported that their GPT-4o-powered research assistant reduced analyst report preparation time from 8 hours to 2 hours, while improving consistency and identifying insights that human analysts had missed. With analysts costing $150-300 per hour, the ROI becomes immediately apparent.

Customer support represents another domain where GPT-4o has delivered exceptional value. Unlike traditional chatbots, GPT-4o-powered support systems can handle complex, multi-step troubleshooting with 85% first-contact resolution rates. A telecommunications company implementing GPT-4o for tier-1 support reported $2.3 million in annual savings from reduced call center volume, while simultaneously improving customer satisfaction scores by 23%.

In healthcare, GPT-4o's multimodal capabilities have enabled innovative applications in medical imaging analysis and patient communication. Radiologists using GPT-4o-assisted screening report 30% faster image review times with improved detection rates for certain conditions. The model's ability to explain findings in patient-friendly language has also improved patient understanding and compliance with treatment plans.

Software development teams have perhaps seen the most dramatic productivity gains. Beyond simple code generation, GPT-4o excels at understanding complex codebases, suggesting optimizations, and identifying potential bugs. Development teams report that GPT-4o-assisted code reviews catch 40% more issues than traditional manual reviews, while reducing review time by 60%. The compound effect of faster development cycles and fewer production bugs delivers ROI that often exceeds 300% within the first year.

Cost Optimization Strategies: Maximizing Value

Effective cost management requires a multi-faceted approach combining technical optimizations with strategic model selection. Implementing a tiered model strategy, where different tasks route to appropriate models based on complexity, can reduce costs by 50-70% compared to using premium models exclusively. For instance, initial query classification can use GPT-4o-mini, with only complex queries escalating to the full GPT-4o model.

Batch processing presents another significant optimization opportunity. By aggregating similar requests and processing them together, organizations can reduce overhead and improve throughput. This approach works particularly well for non-real-time applications like content generation, data analysis, and report creation. Some enterprises have achieved 30% cost reductions through intelligent batching strategies.

Caching frequently requested information dramatically reduces API calls for applications with predictable query patterns. Implementing semantic similarity matching allows systems to return cached responses for conceptually similar queries, extending cache hit rates beyond exact matches. Advanced caching systems achieve 40-60% cache hit rates, directly translating to equivalent cost savings.

For organizations seeking additional cost advantages and simplified billing, API aggregation services provide compelling value. Services like LaoZhang-AI offer unified access to multiple AI models including GPT-4o, Claude, and Gemini through a single API endpoint. With competitive pricing and a free trial for developers, these services simplify multi-model deployments while often providing cost advantages through volume pricing and optimized routing.

Looking Ahead: The Evolution of AI Pricing

The launch of GPT-4.1 in 2025 signals the continued evolution of AI capabilities and pricing models. With input costs of $2.00 per million tokens and output at $8.00 per million tokens, GPT-4.1 offers a 26% price reduction compared to GPT-4o while delivering superior performance. The expanded context windows supporting up to 1 million tokens open new possibilities for document processing and long-form content analysis.

The trend toward more affordable AI is accelerating, driven by infrastructure improvements, competition, and economies of scale. However, this commoditization of basic AI capabilities is coinciding with the emergence of specialized, premium models for specific domains. We're witnessing a bifurcation of the market, where general-purpose models become increasingly affordable while specialized models command premium prices for superior performance in narrow domains.

Organizations must prepare for this evolving landscape by building flexible AI architectures that can adapt to new models and pricing structures. Avoiding vendor lock-in through standardized interfaces and maintaining the ability to switch between models based on performance and cost requirements will become increasingly important competitive advantages.

Making the Right Choice for Your Organization

Selecting the optimal AI model and pricing tier requires careful analysis of your specific use cases, volume requirements, and performance needs. Start by auditing your current or planned AI applications, categorizing them by complexity, volume, and performance requirements. This analysis often reveals that a mixed-model approach delivers the best balance of cost and performance.

Consider total cost of ownership beyond raw API pricing. Integration complexity, reliability, support quality, and feature availability all impact the true cost of AI implementation. GPT-4o's mature ecosystem, extensive documentation, and proven reliability often justify its pricing premium for mission-critical applications.

For organizations beginning their AI journey, starting with GPT-4o-mini for initial prototypes and scaling to GPT-4o for production workloads provides a low-risk path to AI adoption. The compatible API structure allows seamless transitions between models as requirements evolve. Many successful implementations begin with the mini model for 80% of queries, escalating only complex cases to the full model.

Remember that API costs typically represent only 20-30% of total AI project costs. Development time, infrastructure, monitoring, and maintenance often dominate budgets. Choosing a stable, well-supported platform like GPT-4o can reduce these auxiliary costs significantly, improving overall project ROI even if the API costs are slightly higher than alternatives.

Conclusion: Navigating the AI API Economy

The GPT-4o pricing structure in 2025 represents a mature, balanced approach to AI accessibility. With costs 68% lower than the original GPT-4 while maintaining exceptional performance across diverse tasks, GPT-4o has emerged as the pragmatic choice for organizations seeking to scale AI implementations without breaking budgets. The availability of GPT-4o-mini further extends accessibility, enabling high-volume applications that were economically unfeasible just two years ago.

Success in the AI API economy requires more than choosing the cheapest option. Understanding the nuanced trade-offs between different models, implementing intelligent optimization strategies, and maintaining flexibility to adapt as the landscape evolves will separate leaders from laggards. Organizations that master these elements will find AI transformation not just technologically feasible but economically compelling.

As we look toward the remainder of 2025 and beyond, the trajectory is clear: AI capabilities will continue advancing while costs decline. However, the organizations that capture the most value won't be those waiting for prices to fall further, but those building expertise and infrastructure today. With the right approach to model selection, cost optimization, and strategic implementation, GPT-4o provides a powerful foundation for AI-driven innovation that delivers measurable business value.

Whether you're processing millions of customer queries, analyzing vast document repositories, or building the next generation of AI-powered applications, understanding and optimizing GPT-4o pricing is essential for sustainable success. Start with clear use cases, implement thoughtful optimization strategies, and continuously monitor and adjust your approach as your needs and the market evolve. The organizations that master this balance will find themselves well-positioned to capitalize on the tremendous opportunities that AI presents, today and in the future.

For developers and organizations ready to begin their GPT-4o journey with additional cost advantages, consider exploring unified API gateways like LaoZhang-AI, which provide seamless access to GPT-4o alongside other leading models, often with promotional credits to help you get started. The future of AI is not just about choosing the right model – it's about building the right strategy for sustainable, scalable AI implementation.