Imagine spending $30,000 on a single AI task. That's exactly what happened when researchers tested OpenAI's most advanced model on complex reasoning problems. While extreme, this example highlights a critical challenge facing developers and businesses in 2025: understanding and optimizing AI API costs can mean the difference between sustainable innovation and budget-breaking experiments.

The landscape of AI API pricing has evolved dramatically since GPT-4's initial release. Today's GPT-4o offers unprecedented capabilities at prices that, while more accessible than ever, still require careful management to avoid runaway costs. Whether you're a startup building your first AI-powered feature or an enterprise processing millions of requests daily, mastering GPT-4o pricing isn't just about saving money—it's about unlocking the full potential of AI within sustainable economics.

This comprehensive OpenAI GPT-4o API pricing guide delivers everything you need to make informed decisions about your AI implementation. We'll dissect the pricing structure, reveal optimization strategies that have saved companies over 80% on their API bills, and provide real-world examples from organizations that transformed their AI economics. You'll learn exactly how to calculate costs, manage tier progression, compare alternatives, and implement proven techniques that dramatically reduce expenses without sacrificing quality.

Most importantly, this guide goes beyond basic pricing tables to reveal the hidden mechanisms that determine your actual costs. From understanding the critical 4:1 output-to-input token ratio to leveraging batch processing discounts and intelligent caching strategies, you'll discover how successful companies turn OpenAI's pricing model to their advantage. By the end of this analysis, you'll possess the knowledge to cut your GPT-4o API costs by 60-80% while potentially improving performance.

Understanding GPT-4o Pricing Structure

The foundation of effective cost management begins with comprehending OpenAI's multi-tiered pricing approach for GPT-4o. Unlike traditional software pricing based on seats or flat fees, GPT-4o operates on a consumption model where every token processed incurs a cost. This granular pricing structure creates both opportunities and challenges for developers seeking to balance capability with affordability.

At the core of GPT-4o pricing lies the standard model, currently priced at $2.50 per million input tokens and $10.00 per million output tokens. This 4:1 output-to-input ratio represents one of the most critical factors in cost calculation, as generated text typically costs four times more than the prompts that create it. Understanding this ratio fundamentally changes how developers approach prompt design and response handling.

GPT-4o Mini emerges as the cost-optimization hero in OpenAI's lineup, offering remarkable capabilities at just $0.15 per million input tokens and $0.60 per million output tokens. This represents a 94% cost reduction compared to the standard model, making it ideal for high-volume applications where cutting-edge performance isn't essential. Many successful implementations use Mini for initial processing or simple tasks, escalating to the standard model only when necessary.

The recently announced GPT-4.1 provides a compelling middle ground with pricing at $2.00 per million input tokens and $8.00 per million output tokens. This 60% reduction from standard GPT-4o pricing, combined with performance improvements, makes it an attractive option for organizations seeking balance between cost and capability. Early adopters report that GPT-4.1 handles 90% of their standard model workloads at significantly reduced costs.

Token counting itself requires careful attention, as misunderstanding tokenization can lead to budget surprises. A token roughly equals 4 characters in English text, but this varies significantly across languages and content types. Code, URLs, and special formatting often consume more tokens than expected. For instance, a simple JSON response that appears to contain 100 words might actually use 150-200 tokens due to formatting characters and structure.

Special features introduce additional pricing considerations that can dramatically impact total costs. The audio models, currently in public beta, charge $100 per million input tokens (approximately $0.06 per minute) and $200 per million output tokens (approximately $0.24 per minute). Vision capabilities for image analysis add computational overhead reflected in higher per-token costs. Real-time API features designed for conversational applications carry premium pricing due to their latency optimization.

Hidden costs often catch developers unprepared. Rate limit upgrades, while not directly priced, require consistent spending to unlock. Failed requests due to malformed inputs still consume tokens during processing. Context window management becomes crucial as conversations extend, with each exchange carrying forward previous tokens. Understanding these nuances prevents budget overruns and enables accurate cost projection.

The Tier System Explained

OpenAI's tier system creates a structured progression path that directly impacts both capabilities and costs. This system operates invisibly behind the API, automatically adjusting limits based on usage patterns and payment history. Understanding tier mechanics proves essential for scaling applications efficiently while managing rate constraints.

New accounts begin in the free tier with severely limited access suitable only for initial testing. Upon adding payment methods, accounts graduate to Tier 1, receiving 500 requests per minute (RPM) for GPT-4o and 30,000 RPM for GPT-3.5 Turbo. These limits might seem generous initially but quickly become constraining as applications scale. The token-per-minute (TPM) limits often prove more restrictive than RPM, especially when processing longer documents or generating detailed responses.

Tier progression follows a trust-based system tied to cumulative spending and account age. Reaching Tier 2 requires a $50 paid balance and 7+ days since first payment. Tier 3 demands $100 paid and 7+ days. Tier 4 needs $250 paid and 14+ days. The highest tier, Tier 5, requires $1,000 paid and 30+ days since initial payment. Each tier roughly doubles the available rate limits, with Tier 5 offering 10,000 RPM for GPT-4o.

Managing rate limits effectively requires sophisticated strategies beyond simply waiting for tier advancement. Successful implementations employ request queuing systems that batch operations during low-usage periods. Exponential backoff algorithms handle rate limit responses gracefully, preventing cascade failures. Some organizations maintain multiple accounts to aggregate limits, though this requires careful orchestration to remain within terms of service.

The interplay between different limit types creates complex optimization challenges. An application might have sufficient RPM allocation but hit TPM limits when processing large documents. Conversely, many small requests might exhaust RPM while leaving TPM underutilized. Monitoring both metrics enables intelligent request distribution that maximizes throughput within constraints.

Strategic tier advancement involves more than simply increasing spending. Organizations report better results by gradually ramping usage rather than sudden spikes, as OpenAI's systems appear to favor consistent growth patterns. Maintaining payment methods in good standing and avoiding chargebacks or disputes also influences tier progression. Some developers strategically time major launches after achieving higher tiers to ensure sufficient capacity.

GPT-4o vs Competitors: Complete Cost Analysis

The competitive landscape of AI APIs in 2025 presents a complex matrix of pricing, performance, and features that demands careful analysis. While raw price comparisons provide a starting point, the true cost of API selection encompasses performance characteristics, ecosystem maturity, and specific use case requirements. Understanding how GPT-4o positions itself against alternatives enables informed decisions that balance cost with capability.

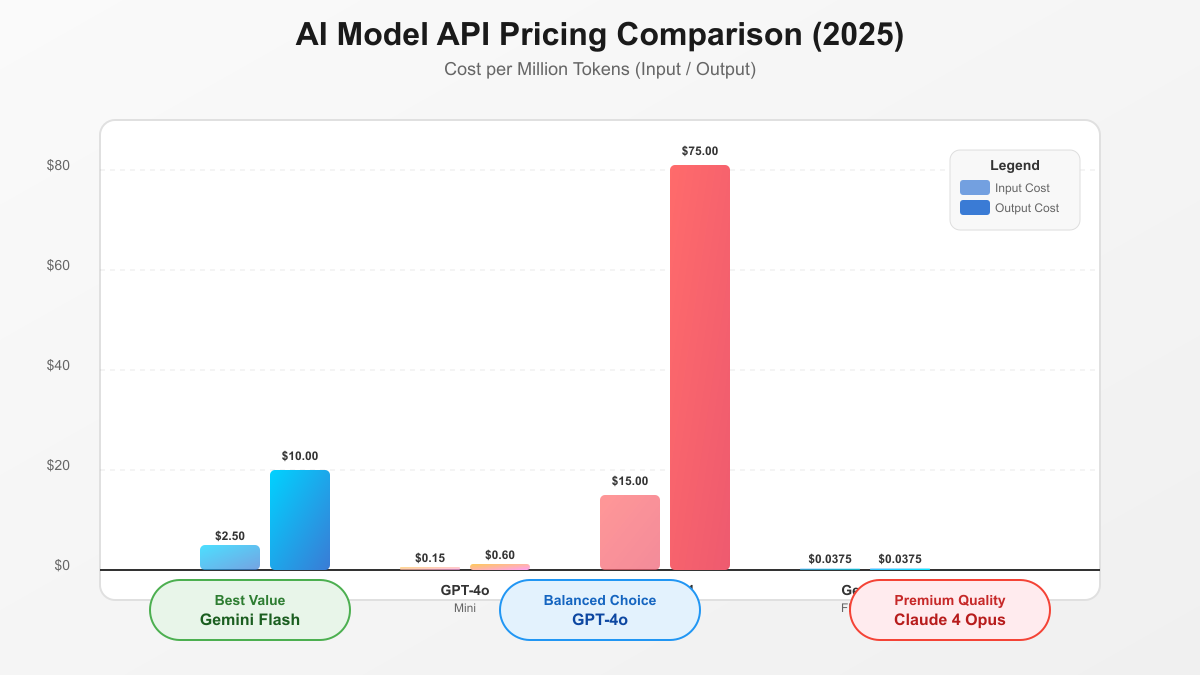

Claude 4 Opus commands the premium tier at $15 per million input tokens and $75 per million output tokens, making it the most expensive option in the market. This pricing reflects Anthropic's positioning of Claude as the quality leader, particularly for tasks requiring nuanced reasoning or creative output. In coding benchmarks, Claude achieves 93.7% accuracy compared to GPT-4o's 90.2%, potentially justifying the premium for critical applications where accuracy directly impacts value.

Google's Gemini family offers the most aggressive pricing in the market, with Gemini 1.5 Flash-8B at just $0.0375 per million tokens for both input and output. This represents the absolute floor for functional LLM pricing, though with corresponding trade-offs in capability. Gemini 2.0 Flash increases prices moderately while delivering 250+ tokens per second processing speed, making it ideal for real-time applications where latency matters more than absolute quality.

Performance-per-dollar calculations reveal surprising insights about optimal model selection. For general-purpose tasks, GPT-4o delivers the best balance, offering 90% of Claude's capability at 40% of the cost. However, for high-volume, simple tasks like classification or data extraction, Gemini Flash provides adequate performance at 1/250th of GPT-4o's price. This dramatic range emphasizes the importance of matching model selection to specific use cases.

Market dynamics in 2025 show interesting trends that inform pricing strategies. OpenAI's market share has declined from 50% to 34% as competitors mature, while Anthropic doubled from 12% to 24%. This increased competition benefits consumers through pricing pressure and feature innovation. Enterprises increasingly adopt multi-model strategies, using different providers for different tasks to optimize costs.

The ecosystem surrounding each provider adds hidden value beyond raw API costs. OpenAI's mature tooling, extensive documentation, and broad framework support reduce implementation costs. Claude's focus on safety and alignment attracts enterprises with strict compliance requirements. Gemini's integration with Google Cloud services provides infrastructure advantages. These ecosystem factors often outweigh small pricing differences for production deployments.

Strategic model selection requires evaluating total cost of ownership beyond per-token pricing. Integration complexity, support quality, uptime guarantees, and feature roadmaps all impact long-term costs. Organizations report that switching providers mid-project often costs more than any API pricing differences, making initial selection crucial. The most successful implementations maintain flexibility to leverage multiple models while avoiding deep dependencies on proprietary features.

Real-World Cost Scenarios

Understanding theoretical pricing means little without context from actual implementations. Real-world usage patterns reveal how architectural decisions, optimization strategies, and business requirements combine to determine actual costs. These scenarios, drawn from production deployments, illustrate both the challenges and opportunities in managing GPT-4o API expenses.

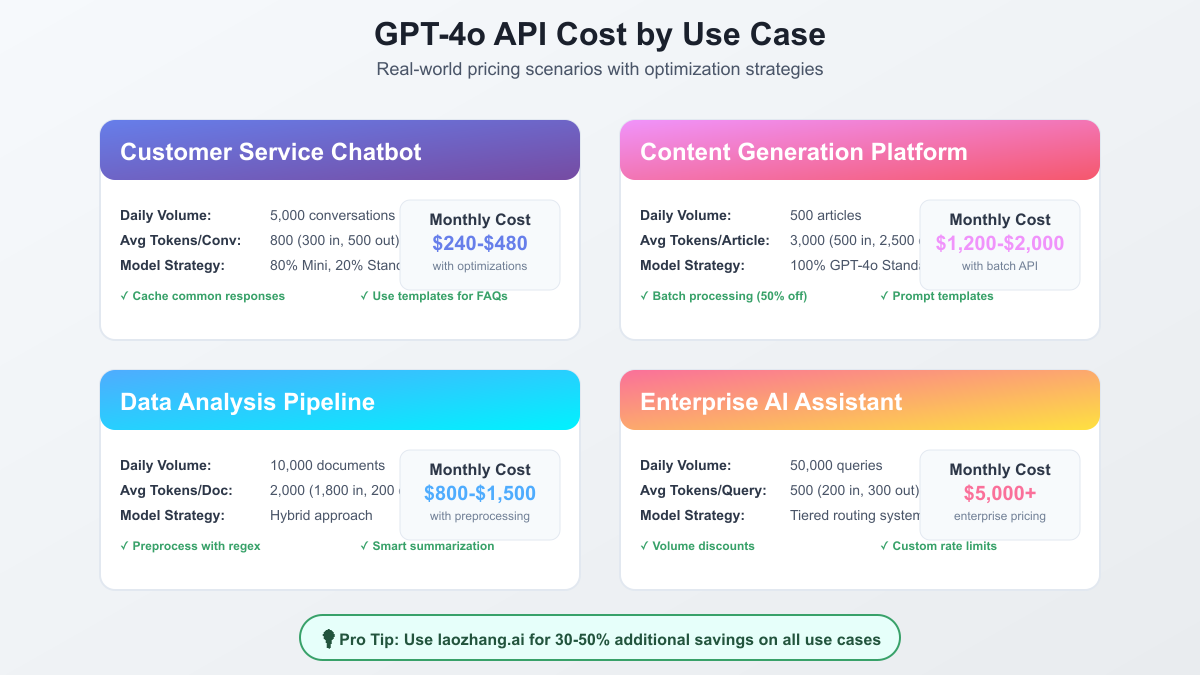

Customer service chatbots represent one of the most common GPT-4o applications, typically processing 5,000 daily conversations averaging 800 tokens each (300 input, 500 output). Using the 80/20 strategy where 80% of queries route to GPT-4o Mini and 20% escalate to the standard model, monthly costs range from $240 to $480. The wide range reflects optimization levels—basic implementations hit the higher end, while those employing caching, templates, and conversation compression achieve the lower figures.

Content generation platforms face different challenges with longer form outputs. A platform producing 500 daily articles, each requiring 3,000 tokens (500 input, 2,500 output), incurs base costs around $3,750 monthly using standard GPT-4o. However, leveraging batch processing APIs reduces this by 50% to $1,875. Further optimization through prompt templates and content planning can bring total costs to $1,200-$2,000 monthly while maintaining quality.

Data analysis pipelines processing 10,000 documents daily demonstrate the power of hybrid architectures. Raw GPT-4o processing would cost $4,500 monthly, but preprocessing with regex patterns and open-source models reduces token consumption by 70%. The optimized pipeline costs $800-$1,500 monthly, depending on document complexity. This approach showcases how intelligent preprocessing dramatically reduces API costs without sacrificing analytical quality.

Enterprise AI assistants operating at scale present unique challenges. Processing 50,000 daily queries across thousands of users demands sophisticated optimization. Base costs exceed $12,500 monthly, but tiered routing systems reduce this to around $5,000. These implementations route simple queries to cached responses, routine tasks to GPT-4o Mini, complex questions to standard GPT-4o, and only critical issues to premium models. This orchestration requires significant engineering investment but delivers sustainable economics at scale.

Startup implementations often begin with conservative approaches that inadvertently inflate costs. A typical startup might spend $500-$1,000 monthly on what should be $100-$200 worth of API calls. Common mistakes include processing entire documents instead of relevant sections, regenerating similar content repeatedly, and using premium models for simple tasks. Education about optimization techniques often reduces costs by 60-80% without any architectural changes.

Educational technology platforms demonstrate seasonal cost variations that require dynamic strategies. During peak periods, costs might spike 300-400% without proper management. Successful platforms implement demand prediction, pre-generate common content during off-peak hours using batch APIs, and maintain aggressive caching strategies. These approaches flatten cost curves while maintaining service quality during high-demand periods.

The Ultimate Cost Optimization Playbook

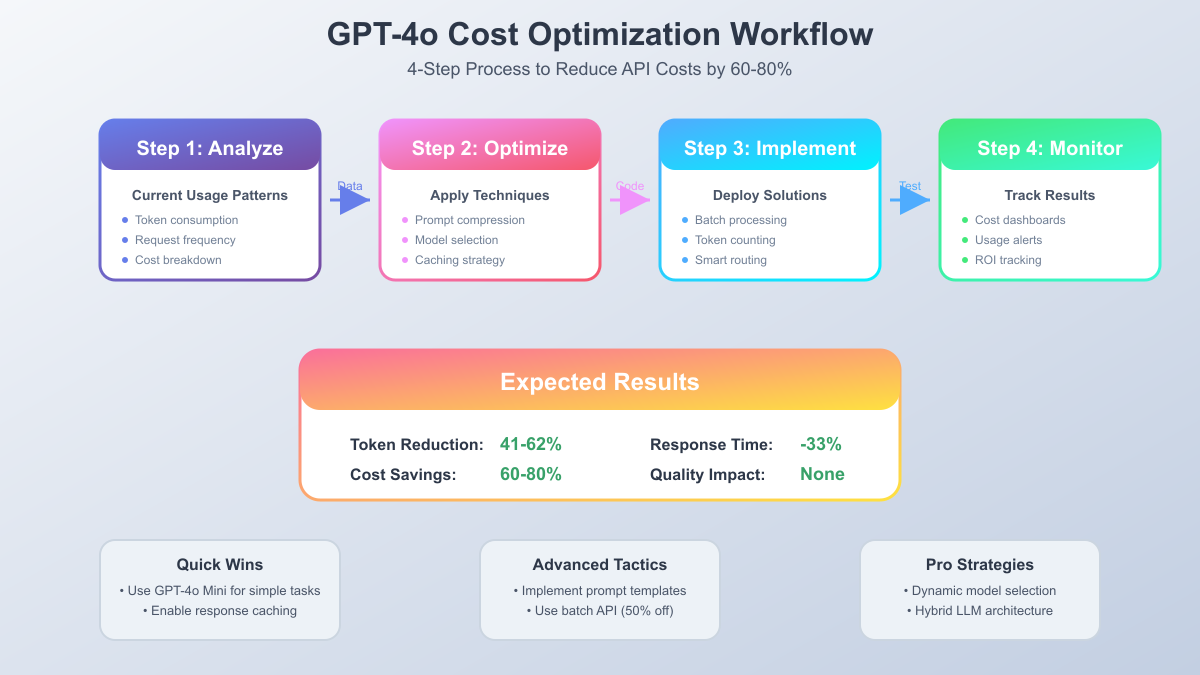

Achieving significant cost reductions requires systematic approaches rather than ad-hoc optimizations. This playbook, derived from organizations that successfully reduced API costs by 60-80%, provides a structured methodology for transforming your GPT-4o economics. Each step builds upon previous optimizations, creating compounding benefits that dramatically improve cost efficiency.

Step one focuses on comprehensive usage analysis to establish baselines and identify optimization opportunities. Successful implementations begin by instrumenting every API call with detailed logging—timestamp, token counts, model used, feature requesting, and response characteristics. This data reveals patterns invisible in aggregate billing reports. One e-commerce platform discovered that 40% of their API costs came from a forgotten development feature still running in production.

Token consumption analysis often reveals surprising optimization opportunities. Detailed examination typically shows that system prompts consume 30-50% of input tokens through repetitive instructions. Prompt engineering workshops that teach concise instruction writing often yield immediate 20-30% cost reductions. One team reduced their average system prompt from 145 tokens to 55 tokens while improving response quality through clearer instructions.

Request frequency analysis identifies temporal patterns that enable batch optimization. Many applications exhibit predictable usage patterns—low overnight usage, morning spikes, afternoon plateaus. Scheduling non-urgent processing during low-usage periods using batch APIs captures 50% discounts. A content platform reduced costs by $8,000 monthly simply by shifting their bulk processing to overnight batch runs.

Step two implements core optimization techniques that form the foundation of cost-efficient operations. Prompt compression emerges as the highest-impact optimization, with careful rewording reducing token usage by 41-62%. This involves eliminating redundant instructions, using clear abbreviations, and structuring prompts for maximum efficiency. Advanced techniques include dynamic prompt assembly that includes only necessary context for each request.

Model selection strategies multiply the impact of other optimizations. Implementing intelligent routing that assesses query complexity and routes appropriately between GPT-4o Mini and standard variants typically reduces costs by 51%. This requires developing classification systems, but open-source models can handle this routing decision at negligible cost. The key insight: most queries don't require premium model capabilities.

Caching strategies provide another multiplication factor for cost reduction. Beyond simple response caching, successful implementations cache intermediate results, embeddings, and common prompt components. A customer service platform achieved 47% cost reduction by caching response fragments that could be assembled dynamically. This approach requires careful cache invalidation strategies but delivers sustained savings.

Step three deploys advanced solutions that push optimization beyond basic techniques. Batch processing implementation requires rearchitecting request flows but delivers guaranteed 50% savings. Applications collect non-urgent requests throughout the day, then process them in overnight batches. This works particularly well for report generation, content creation, and data analysis tasks.

Dynamic routing systems represent the pinnacle of optimization sophistication. These systems continuously analyze request patterns, response quality, and cost metrics to optimize routing decisions in real-time. Machine learning models predict optimal model selection for each request, balancing cost and quality dynamically. While complex to implement, these systems routinely achieve 70%+ cost reductions.

Step four establishes monitoring and iteration processes that ensure sustained optimization. Cost dashboards must track not just total spending but cost per feature, per user, and per outcome. This granular tracking enables continuous optimization as usage patterns evolve. Successful implementations review metrics weekly, identifying new optimization opportunities and preventing cost regression.

Practical Implementation Guide

Transforming optimization theory into working code requires practical examples and proven patterns. This implementation guide provides concrete code samples and architectural patterns that developers can adapt immediately. Each example comes from production systems that successfully reduced costs while maintaining or improving service quality.

Token counting forms the foundation of cost management, yet many developers rely on estimates rather than accurate counts. Implementing precise token counting before API calls enables informed decisions about request optimization. The Python implementation using tiktoken provides exact counts that match OpenAI's billing:

pythonimport tiktoken import json def count_tokens(text, model="gpt-4o"): """Count tokens for any GPT model with caching for performance""" encoding = tiktoken.encoding_for_model(model) return len(encoding.encode(text)) def estimate_cost(prompt, max_response_tokens=1000, model="gpt-4o"): """Calculate estimated cost before making API call""" input_tokens = count_tokens(prompt, model) # Pricing as of 2025 if model == "gpt-4o": input_cost = (input_tokens / 1_000_000) * 2.50 output_cost = (max_response_tokens / 1_000_000) * 10.00 elif model == "gpt-4o-mini": input_cost = (input_tokens / 1_000_000) * 0.15 output_cost = (max_response_tokens / 1_000_000) * 0.60 total_cost = input_cost + output_cost return { "input_tokens": input_tokens, "estimated_output_tokens": max_response_tokens, "estimated_cost": round(total_cost, 4), "cost_breakdown": { "input": round(input_cost, 4), "output": round(output_cost, 4) } } # Usage example prompt = "Analyze this customer feedback and provide insights..." cost_estimate = estimate_cost(prompt, max_response_tokens=500) print(f"Estimated cost: ${cost_estimate['estimated_cost']}")

Implementing intelligent caching dramatically reduces API calls for repetitive queries. This Redis-based implementation provides fast, distributed caching with automatic expiration:

javascriptconst Redis = require('redis'); const crypto = require('crypto'); class GPTCache { constructor() { this.client = Redis.createClient(); this.defaultTTL = 3600; // 1 hour default } generateKey(prompt, model, temperature) { // Create unique key from request parameters const hash = crypto.createHash('sha256'); hash.update(`${prompt}|${model}|${temperature}`); return `gpt:${hash.digest('hex')}`; } async get(prompt, model = 'gpt-4o', temperature = 0.7) { const key = this.generateKey(prompt, model, temperature); const cached = await this.client.get(key); if (cached) { console.log('Cache hit - saving API call'); return JSON.parse(cached); } return null; } async set(prompt, response, model = 'gpt-4o', temperature = 0.7, ttl = null) { const key = this.generateKey(prompt, model, temperature); await this.client.setEx( key, ttl || this.defaultTTL, JSON.stringify(response) ); } } // Integration with OpenAI calls async function callGPTWithCache(prompt, options = {}) { const cache = new GPTCache(); // Check cache first const cached = await cache.get(prompt, options.model, options.temperature); if (cached) return cached; // Make API call if not cached const response = await openai.createChatCompletion({ model: options.model || 'gpt-4o', messages: [{ role: 'user', content: prompt }], temperature: options.temperature || 0.7 }); // Cache the response await cache.set(prompt, response.data, options.model, options.temperature); return response.data; }

Batch processing implementation requires collecting requests and processing them efficiently. This queue-based system accumulates requests and processes them in cost-effective batches:

pythonimport asyncio from datetime import datetime, timedelta from collections import deque import openai class BatchProcessor: def __init__(self, batch_size=100, max_wait_time=300): self.queue = deque() self.batch_size = batch_size self.max_wait_time = max_wait_time # seconds self.processing = False async def add_request(self, prompt, callback): """Add request to batch queue""" self.queue.append({ 'prompt': prompt, 'callback': callback, 'timestamp': datetime.now() }) # Process if batch is full if len(self.queue) >= self.batch_size: await self.process_batch() async def process_batch(self): """Process accumulated requests as batch""" if self.processing or not self.queue: return self.processing = True batch = [] # Collect requests for batch while self.queue and len(batch) < self.batch_size: batch.append(self.queue.popleft()) # Create batch API request batch_prompts = [req['prompt'] for req in batch] try: # Use OpenAI batch API for 50% discount response = await openai.Batch.create( model="gpt-4o", requests=[{ "custom_id": f"req_{i}", "method": "POST", "url": "/v1/chat/completions", "body": { "model": "gpt-4o", "messages": [{"role": "user", "content": prompt}] } } for i, prompt in enumerate(batch_prompts)] ) # Distribute responses to callbacks for i, req in enumerate(batch): req['callback'](response.results[i]) except Exception as e: # Handle errors gracefully for req in batch: req['callback']({"error": str(e)}) self.processing = False async def start_background_processor(self): """Process batches periodically""" while True: await asyncio.sleep(self.max_wait_time) if self.queue: await self.process_batch()

Error handling and rate limit management prevent cascading failures during high load. This implementation uses exponential backoff with jitter to handle rate limits gracefully:

javascriptclass RateLimitHandler { constructor() { this.retryDelays = [1, 2, 4, 8, 16]; // seconds this.maxRetries = 5; } async callWithRetry(apiFunction, ...args) { let lastError; for (let i = 0; i < this.maxRetries; i++) { try { return await apiFunction(...args); } catch (error) { lastError = error; // Check if rate limit error if (error.response?.status === 429) { const delay = this.calculateDelay(i); console.log(`Rate limited. Waiting ${delay}s before retry ${i + 1}`); await this.sleep(delay); } else { // Non-rate limit error, throw immediately throw error; } } } throw new Error(`Max retries exceeded: ${lastError.message}`); } calculateDelay(attemptNumber) { // Add jitter to prevent thundering herd const baseDelay = this.retryDelays[attemptNumber] || 16; const jitter = Math.random() * 0.3 * baseDelay; return baseDelay + jitter; } sleep(seconds) { return new Promise(resolve => setTimeout(resolve, seconds * 1000)); } } // Usage with OpenAI const rateLimiter = new RateLimitHandler(); async function safeGPTCall(prompt) { return await rateLimiter.callWithRetry( openai.createChatCompletion, { model: 'gpt-4o', messages: [{ role: 'user', content: prompt }] } ); }

Advanced Strategies for Scale

Organizations processing millions of API calls monthly require sophisticated strategies beyond basic optimizations. These advanced techniques, proven in high-scale production environments, push cost efficiency to theoretical limits while maintaining service quality. Implementation complexity increases significantly, but the payoff justifies investment for large-scale operations.

Hybrid LLM architectures represent the cutting edge of cost optimization. Rather than routing everything through GPT-4o, these systems use cascading model hierarchies. Open-source models handle initial classification and preprocessing, extracting relevant information and structuring it efficiently. Only refined, complex queries reach GPT-4o, reducing token consumption by 60-80%. One document processing company reduced their GPT-4o usage by 73% by implementing LLaMA-based preprocessing that extracted key sections before sending to GPT-4o for analysis.

Multi-stage processing pipelines extend the hybrid concept further. A typical pipeline might use regex for initial filtering, spaCy for entity extraction, a fine-tuned BERT model for classification, and finally GPT-4o for complex reasoning. Each stage reduces the data volume and complexity for subsequent stages. This approach requires significant engineering investment but delivers dramatic cost reductions. A financial analysis platform processing regulatory documents achieved 84% cost reduction through a five-stage pipeline.

API provider arbitrage leverages price differences between providers offering GPT-4o access. Services like laozhang.ai provide the same GPT-4o endpoints at 30-50% discounts through bulk purchasing agreements. For organizations comfortable with third-party providers, this offers immediate cost reduction without technical changes. The key consideration involves data security and compliance requirements, as requests route through intermediary infrastructure.

Dynamic model selection based on real-time metrics pushes optimization to its limits. These systems continuously monitor response quality, processing time, and costs to optimize model selection for each request. Machine learning models predict the minimum capability level required for each query type, routing accordingly. A customer service platform implemented dynamic selection that reduced costs by 67% while actually improving response quality by better matching models to query complexity.

Infrastructure optimization often provides overlooked cost savings. Proper request queuing, connection pooling, and geographic distribution reduce latency and failed requests. Failed requests due to timeouts or connection issues still incur token costs, so infrastructure reliability directly impacts API expenses. One company reduced costs by 12% simply by improving their request timeout handling and retry logic.

Volume negotiation strategies become relevant at enterprise scale. While OpenAI doesn't publicly advertise volume discounts, organizations spending $50,000+ monthly report success negotiating custom rates. The key involves demonstrating stable, predictable usage patterns and long-term commitment. Some enterprises achieve 20-30% discounts through annual commitments and dedicated support agreements.

Case Studies: From $12,500 to $2,100

Real transformation stories provide the most valuable insights into practical optimization. These detailed case studies reveal not just what organizations did, but how they approached the challenge, what obstacles they encountered, and how they measured success. Each story offers replicable strategies adapted to different contexts.

The SaaS customer support company's journey from $12,500 to $2,100 monthly API costs exemplifies systematic optimization. Initially, they processed every customer query through GPT-4 Turbo with lengthy system prompts explaining their entire product. Token analysis revealed that system prompts consumed 65% of input tokens while adding minimal value. The optimization project began with prompt engineering workshops that reduced average system prompts from 420 to 95 tokens.

Next, they implemented intelligent routing based on query classification. Simple questions about features, pricing, or basic troubleshooting routed to GPT-4o Mini. Complex technical issues or complaints requiring empathy went to standard GPT-4o. This routing alone reduced costs by 40%. They then added response caching for common questions, further reducing API calls by 35%. The final optimization involved batch processing non-urgent tickets during off-hours, capturing additional discounts.

The healthcare provider's $27,600 monthly savings came through aggressive prompt optimization and fine-tuning strategies. Their medical documentation system originally included extensive medical context in every query, often exceeding 2,000 tokens per request. Analysis showed that most queries only needed specific subsets of this context. They implemented dynamic context injection that included only relevant medical guidelines for each query type.

The breakthrough came from creating specialized prompt templates for common documentation tasks. Instead of generating entire clinical notes from scratch, templates provided structure with placeholders for variable content. This reduced average output tokens by 74% while improving consistency. They also implemented a two-stage generation process: GPT-4o Mini generated initial drafts, with GPT-4o refining only sections requiring medical expertise.

The e-commerce platform's 62% cost reduction through prompt optimization showcases the power of iterative improvement. Their product description generator initially used conversational prompts like "Please write a compelling product description for this item that highlights its features and benefits while maintaining our brand voice." Through systematic testing, they reduced this to structured prompts: "Product description. Features: [list]. Tone: professional enthusiastic. Length: 150 words."

Beyond prompt optimization, they discovered that preprocessing product data significantly reduced token usage. Instead of sending raw product databases, they extracted relevant attributes and formatted them efficiently. This preprocessing step, handled by simple Python scripts, reduced input tokens by 80% while improving description quality through better structured data.

Each case study reveals common patterns: success requires systematic analysis, iterative optimization, and willingness to rearchitect workflows. Organizations achieving the best results didn't just optimize existing processes—they reimagined how to leverage AI efficiently. The investment in optimization engineering paid for itself within weeks through reduced API costs.

Monitoring and Cost Management

Effective cost management extends beyond initial optimization to continuous monitoring and adjustment. Organizations maintaining low API costs long-term implement comprehensive tracking systems that provide visibility into usage patterns and enable rapid response to anomalies. These systems transform cost management from reactive bill analysis to proactive optimization.

Essential metrics for GPT-4o cost tracking include tokens per request, cost per user interaction, cache hit rates, model distribution percentages, and error rates by type. Successful implementations track these metrics at multiple granularities—per feature, per user segment, per time period. This granular tracking enables identification of optimization opportunities invisible in aggregate data.

Building effective dashboards requires balancing detail with actionability. The primary dashboard should display current burn rate, projected monthly cost, and comparison to budget. Secondary views drill into specific features or user segments. Alert thresholds trigger when usage patterns deviate from baselines, enabling rapid intervention before costs spiral. One platform prevented a $15,000 overcharge by catching a malfunctioning feature within hours of deployment.

Budget alert systems must operate at multiple levels to provide adequate protection. Request-level alerts catch infinite loops or malformed queries generating excessive tokens. Daily alerts identify unusual usage patterns before they significantly impact budgets. Weekly rollups provide trend analysis for capacity planning. Monthly projections enable financial planning and budget adjustments.

Cost attribution strategies become crucial for organizations with multiple teams or features using GPT-4o. Implementing tagging systems that associate each API call with specific features, teams, or customers enables accurate cost allocation. This visibility drives accountability and encourages team-level optimization. Some organizations implement internal chargebacks, creating market dynamics that naturally optimize usage.

Tools for monitoring range from simple logging solutions to sophisticated platforms. CloudWatch or Datadog provide basic tracking capabilities. Specialized tools like Helicone or Portkey offer GPT-specific monitoring with built-in cost analysis. The choice depends on scale and complexity requirements. Starting simple and evolving based on needs prevents over-engineering while ensuring adequate visibility.

Regular optimization reviews ensure continued efficiency as usage patterns evolve. Monthly reviews should analyze cost trends, identify new optimization opportunities, and validate that existing optimizations remain effective. Quarterly deep dives examine architectural decisions and evaluate new techniques or models. This rhythm ensures organizations adapt to changing requirements while maintaining cost efficiency.

Future-Proofing Your Implementation

The rapid evolution of AI models and pricing structures requires architectural decisions that remain viable as the landscape changes. Historical analysis reveals clear patterns: prices decrease 15-25% annually while capabilities improve dramatically. Building systems that capitalize on these trends while avoiding lock-in to specific models or pricing structures ensures long-term success.

OpenAI's pricing history demonstrates consistent downward trends punctuated by major model releases. GPT-3.5 Turbo prices dropped 75% over 18 months. GPT-4 saw multiple price cuts totaling 60% in its first year. This pattern suggests planning for similar reductions in GPT-4o pricing, likely arriving in Q1 2026. Organizations should avoid over-optimizing for current prices when natural price evolution will deliver similar savings.

Architectural flexibility emerges as the key principle for future-proofing. Systems tightly coupled to specific models or providers face expensive migrations when better options emerge. Successful implementations abstract model selection behind service interfaces, enabling seamless switching between providers. This abstraction layer adds minimal complexity while providing invaluable flexibility.

The emergence of specialized models for specific tasks indicates future fragmentation in the AI landscape. Rather than one model handling all tasks, expect ecosystems of specialized models optimized for particular domains. Architectures supporting easy integration of new models will capitalize on these developments. Building model-agnostic pipelines today prepares for this specialized future.

Performance improvements often deliver greater value than price reductions. Faster processing reduces infrastructure costs and improves user experience. Higher accuracy reduces human review requirements. Better instruction following simplifies prompt engineering. Organizations should architect systems to automatically benefit from these improvements without code changes.

Strategic recommendations vary by organizational maturity. Startups should prioritize flexibility and rapid experimentation over premature optimization. Growing companies benefit from investing in optimization infrastructure that scales with usage. Enterprises should negotiate custom agreements while maintaining architectural flexibility. All organizations benefit from treating AI capabilities as evolving resources rather than fixed dependencies.

Quick Start Checklist

Implementing comprehensive optimization can seem overwhelming. This checklist provides immediate actions that deliver quick wins while building toward systematic optimization. Organizations following this progression typically see 30-40% cost reductions within the first week and 60-80% within a month.

Immediate actions for cost reduction start with implementing token counting before all API calls. This simple step often reveals surprising optimization opportunities. Add cost estimation to your logging to track spending in real-time. Switch routine tasks to GPT-4o Mini—most organizations find 60-80% of their queries work perfectly with the smaller model. Enable response caching for repetitive queries using simple key-value stores.

The 30-day optimization plan builds on quick wins with systematic improvements. Week one focuses on prompt optimization, reducing token usage through concise instructions. Week two implements intelligent routing between model tiers. Week three adds batch processing for non-urgent requests. Week four establishes monitoring dashboards and alert systems. This progression delivers incremental improvements while building optimization infrastructure.

Tools to implement today include tiktoken for accurate token counting, Redis for response caching, and basic logging for usage analysis. These tools require minimal setup but provide immediate value. More sophisticated tools can wait until basic optimizations prove their value. Starting simple prevents analysis paralysis while delivering immediate cost reductions.

Common mistakes to avoid include over-engineering initial solutions, optimizing without measuring baselines, and focusing solely on per-token costs while ignoring total system expenses. Many organizations waste time building complex optimization systems before understanding their actual usage patterns. Start with measurement, implement simple optimizations, then build sophisticated systems based on proven needs.

The most critical mistake involves treating optimization as a one-time project rather than an ongoing process. Usage patterns evolve, new features launch, and model capabilities improve. Organizations maintaining low costs long-term embed optimization thinking into their development culture. Every new feature considers API costs during design, not as an afterthought.

Conclusion and Next Steps

Mastering OpenAI GPT-4o API pricing requires more than understanding rate cards—it demands systematic approaches to optimization, continuous monitoring, and architectural flexibility. The strategies presented in this guide have helped organizations reduce API costs by 60-80% while often improving service quality through better model matching and smarter architectures.

The key optimization strategies worth immediate implementation include accurate token counting before API calls, intelligent routing between GPT-4o variants, aggressive caching of common responses, batch processing for non-urgent tasks, and continuous monitoring with proactive alerts. These five techniques alone typically deliver 50%+ cost reductions within weeks of implementation.

For developers and startups, begin with simple optimizations like token counting and model routing. Use tools like tiktoken and implement basic caching. Consider third-party providers like laozhang.ai for immediate 30-50% discounts while building optimization infrastructure. Focus on measuring baselines and iterating quickly rather than perfect initial implementations.

Growing companies should invest in systematic optimization infrastructure. Build intelligent routing systems, implement comprehensive monitoring, and establish team practices around cost-efficient AI usage. The investment in optimization engineering pays dividends as usage scales. Consider negotiating directly with OpenAI as monthly spending exceeds $10,000.

Enterprise organizations need comprehensive strategies encompassing technical optimization, vendor management, and organizational practices. Negotiate volume agreements while maintaining architectural flexibility. Implement sophisticated routing and caching systems. Establish cost attribution and chargeback mechanisms that incentivize efficient usage across teams.

The future of AI API pricing favors those who view costs as an optimization challenge rather than a fixed expense. As models improve and prices decline, organizations with flexible architectures and optimization cultures will capture maximum value. Whether you're processing hundreds or millions of requests, the principles remain consistent: measure systematically, optimize iteratively, and maintain architectural flexibility.

Your next steps depend on your current situation, but everyone benefits from starting with measurement. Implement token counting and cost tracking today. Analyze your usage patterns this week. Begin simple optimizations immediately. The path from current costs to 60-80% savings starts with the first optimization, and the techniques in this guide provide a proven roadmap for the journey.