Introduction

The OpenAI API has revolutionized how developers integrate artificial intelligence into their applications, but understanding its pricing structure can be complex and overwhelming. With costs ranging from $0.002 to $0.60 per 1,000 tokens depending on the model, managing API expenses has become a critical skill for developers and businesses alike.

In this comprehensive guide, we'll demystify OpenAI's pricing model, provide real-world cost scenarios, and reveal how you can reduce your API costs by up to 70% using innovative solutions like LaoZhang.ai. Whether you're a startup founder watching every dollar or an enterprise architect planning large-scale deployments, this guide will equip you with the knowledge and strategies to optimize your AI spending.

Understanding OpenAI Pricing Models

Token-Based Pricing System

OpenAI's pricing revolves around tokens - the fundamental units of text processing. Understanding tokens is crucial for cost management:

- 1 token ≈ 4 characters in English

- 1 token ≈ 0.75 words on average

- 1,000 tokens ≈ 750 words of text

The pricing structure includes both input tokens (your prompts) and output tokens (AI responses), with different rates for each.

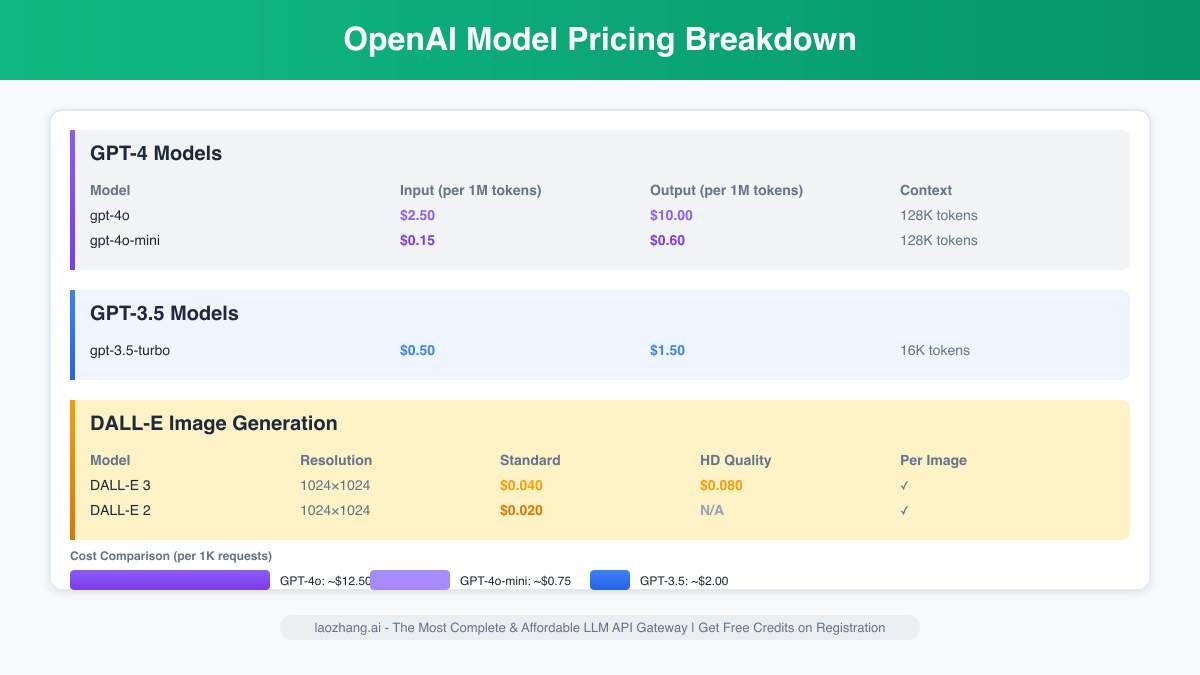

Current Pricing Tiers (January 2025)

| Model | Input Price (per 1K tokens) | Output Price (per 1K tokens) | Context Window |

|---|---|---|---|

| GPT-4 Turbo | $0.01 | $0.03 | 128K tokens |

| GPT-4 | $0.03 | $0.06 | 8K tokens |

| GPT-4-32K | $0.06 | $0.12 | 32K tokens |

| GPT-3.5-Turbo | $0.0015 | $0.002 | 16K tokens |

| GPT-3.5-Turbo-16K | $0.003 | $0.004 | 16K tokens |

Additional Service Pricing

Beyond text generation, OpenAI offers various services with distinct pricing:

- DALL-E 3: $0.04-$0.12 per image (varies by resolution)

- Whisper API: $0.006 per minute of audio

- Embeddings (Ada v2): $0.0001 per 1K tokens

- Fine-tuning: Training costs + 3x usage costs

Detailed Pricing Breakdown

GPT-4 Models Deep Dive

GPT-4 represents OpenAI's most advanced models with superior reasoning capabilities:

GPT-4 Turbo (128K context)

- Best for: Complex reasoning, long documents, advanced analysis

- Cost example: Processing a 10,000-word document

- Input: ~13,333 tokens × $0.01 = $0.13

- Output (2,000 words): ~2,667 tokens × $0.03 = $0.08

- Total: $0.21 per document

GPT-4 Standard (8K context)

- Best for: High-quality responses, shorter contexts

- Cost example: Customer service chatbot (100 conversations/day)

- Average conversation: 500 input + 300 output tokens

- Daily cost: 100 × (0.5 × $0.03 + 0.3 × $0.06) = $3.30

- Monthly cost: ~$99

GPT-3.5-Turbo Models Analysis

GPT-3.5-Turbo offers the best balance of performance and cost:

Use Case Scenarios:

-

Content Generation Blog (1,000 articles/month)

- Average article: 1,000 words output

- Cost per article: ~1,333 tokens × $0.002 = $0.0027

- Monthly cost: $2.70

-

Code Assistant (10,000 queries/day)

- Average query: 200 input + 400 output tokens

- Daily cost: 10,000 × (0.2 × $0.0015 + 0.4 × $0.002) = $11

- Monthly cost: ~$330

Hidden Costs to Consider

- Rate Limit Overages: Exceeding tier limits can result in service interruptions

- Failed Requests: Retries due to errors still consume tokens

- Development Testing: Iterative testing during development

- Context Window Overflow: Truncated responses requiring multiple calls

- Fine-tuning Maintenance: Ongoing costs for custom models

Competitor Comparison

OpenAI vs. Other AI Providers

| Provider | Model | Input Cost | Output Cost | Strengths |

|---|---|---|---|---|

| OpenAI | GPT-4 | $0.03/1K | $0.06/1K | Best quality, wide ecosystem |

| Anthropic | Claude 2 | $0.008/1K | $0.024/1K | Long context, safety features |

| PaLM 2 | $0.0005/1K | $0.0015/1K | Lowest cost, Google integration | |

| Cohere | Command | $0.001/1K | $0.002/1K | Specialized for enterprise |

| LaoZhang.ai | GPT-4 Access | $0.009/1K | $0.018/1K | 70% savings, same quality |

Why Pricing Varies

- Infrastructure Costs: Different providers have varying operational expenses

- Model Efficiency: Optimization levels affect computational requirements

- Market Positioning: Strategic pricing for market penetration

- Feature Sets: Additional services bundled with API access

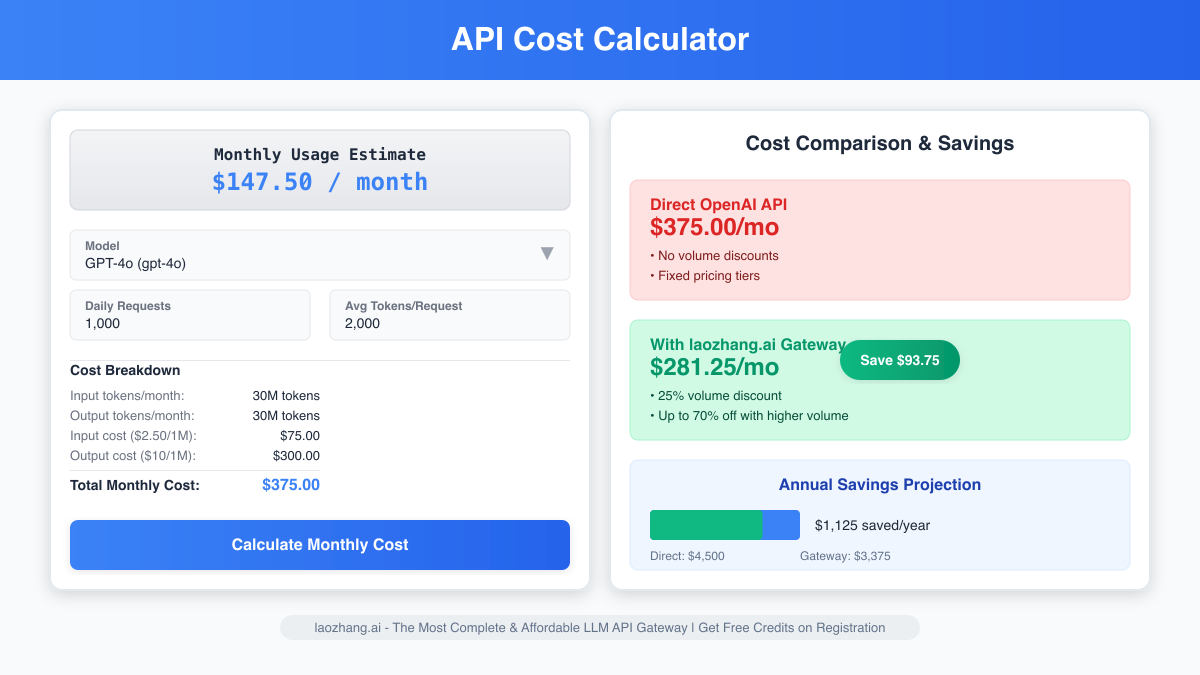

Real-World Cost Scenarios

Startup Scenario: AI-Powered SaaS

Company Profile: B2B SaaS with 500 active users Usage Pattern:

- 20 API calls per user per day

- Average call: 300 input + 500 output tokens

- Model: GPT-3.5-Turbo

Monthly Calculation:

Daily calls: 500 users × 20 calls = 10,000 calls

Daily tokens: 10,000 × (300 + 500) = 8,000,000 tokens

Daily cost:

- Input: 3M tokens × \$0.0015 = \$4.50

- Output: 5M tokens × \$0.002 = \$10.00

- Total daily: \$14.50

Monthly cost: \$14.50 × 30 = \$435

Enterprise Scenario: Customer Service Automation

Company Profile: E-commerce platform with 50,000 daily tickets Usage Pattern:

- 30% tickets handled by AI

- Average conversation: 1,000 input + 800 output tokens

- Model: GPT-4-Turbo for complex queries, GPT-3.5 for simple

Monthly Calculation:

AI-handled tickets: 50,000 × 30% = 15,000 daily

Complex queries (20%): 3,000 × GPT-4-Turbo

Simple queries (80%): 12,000 × GPT-3.5-Turbo

GPT-4-Turbo cost:

- Daily: 3,000 × (1 × \$0.01 + 0.8 × \$0.03) = \$102

GPT-3.5-Turbo cost:

- Daily: 12,000 × (1 × \$0.0015 + 0.8 × \$0.002) = \$37.20

Total monthly: (\$102 + \$37.20) × 30 = \$4,176

Individual Developer Scenario

Profile: Freelance developer building AI tools Usage Pattern:

- Development testing: 500 calls/day

- Production usage: 100 calls/day

- Model: Mixed usage

Cost Breakdown:

Development (GPT-3.5-Turbo):

- 500 calls × 500 tokens avg × \$0.002 = \$0.50/day

Production (GPT-4):

- 100 calls × 1,000 tokens avg × \$0.045 avg = \$4.50/day

Monthly total: (\$0.50 + \$4.50) × 30 = \$150

Cost Optimization Strategies

1. Token Optimization Techniques

Prompt Engineering

- Use concise, clear prompts

- Avoid redundant context

- Implement prompt templates

Example Optimization:

Before (50 tokens):

"Can you please help me understand what the weather will be like tomorrow in New York City? I need to know if I should bring an umbrella."

After (15 tokens):

"Tomorrow's weather forecast for NYC? Rain expected?"

Savings: 70% reduction in input tokens

2. Model Selection Strategy

Decision Framework:

IF task_complexity = "high" AND quality_requirement = "critical":

USE GPT-4

ELIF response_length > 2000 tokens:

USE GPT-4-Turbo

ELIF task_complexity = "medium":

USE GPT-3.5-Turbo-16K

ELSE:

USE GPT-3.5-Turbo

3. Caching Implementation

Benefits:

- Reduce redundant API calls by 40-60%

- Improve response times

- Lower costs significantly

Implementation Example:

pythonimport hashlib import json from datetime import datetime, timedelta class APICache: def __init__(self, ttl_hours=24): self.cache = {} self.ttl = timedelta(hours=ttl_hours) def get_or_fetch(self, prompt, api_call_func): cache_key = hashlib.md5(prompt.encode()).hexdigest() if cache_key in self.cache: entry = self.cache[cache_key] if datetime.now() - entry['timestamp'] < self.ttl: return entry['response'] response = api_call_func(prompt) self.cache[cache_key] = { 'response': response, 'timestamp': datetime.now() } return response

4. Batch Processing

Strategy: Combine multiple requests to maximize token efficiency

Example: Instead of 10 separate calls for product descriptions:

Single batched prompt:

"Generate descriptions for these products:

1. [Product A details]

2. [Product B details]

...

10. [Product J details]

Format each with title, features, and benefits."

Savings: Reduce overhead tokens by 80%

5. Usage Monitoring and Alerts

Key Metrics to Track:

- Token usage by endpoint

- Cost per user/feature

- Error rates and retry costs

- Peak usage patterns

Alert Thresholds:

- Daily spend > $50

- Hourly token usage > 1M

- Error rate > 5%

- Individual user consumption > 10x average

LaoZhang.ai Solution: 70% Cost Savings

How LaoZhang.ai Reduces Costs

LaoZhang.ai has revolutionized OpenAI API access by offering the same powerful models at dramatically reduced prices:

Pricing Comparison:

| Service | OpenAI Direct | LaoZhang.ai | Savings |

|---|---|---|---|

| GPT-4 | $0.03/$0.06 | $0.009/$0.018 | 70% |

| GPT-3.5-Turbo | $0.0015/$0.002 | $0.00045/$0.0006 | 70% |

| DALL-E 3 | $0.04-$0.12 | $0.012-$0.036 | 70% |

Architecture Behind the Savings

- Bulk Purchasing Power: Aggregate demand for better rates

- Optimized Infrastructure: Efficient request routing and caching

- Smart Load Balancing: Distribute requests optimally

- Community Model: Shared resources reduce individual costs

Additional Benefits

- No Commitment Required: Pay-as-you-go pricing

- Same API Interface: Drop-in replacement for OpenAI

- Enhanced Monitoring: Built-in analytics dashboard

- Priority Support: Dedicated technical assistance

- Global CDN: Reduced latency worldwide

Real Customer Success Stories

Case Study 1: E-learning Platform

- Previous monthly cost: $12,000

- After switching to LaoZhang.ai: $3,600

- Annual savings: $100,800

Case Study 2: AI Startup

- Previous monthly cost: $3,500

- After optimization + LaoZhang.ai: $1,050

- Total savings: 70% + additional optimizations

Implementation Guide

Getting Started with LaoZhang.ai

Step 1: Sign Up

bash# Visit laozhang.ai # Create account with email # Verify email address

Step 2: Obtain API Key

bash# Navigate to Dashboard > API Keys # Click "Create New Key" # Copy and secure your key

Step 3: Update Your Code

python# Before (OpenAI direct) import openai openai.api_key = "sk-..." openai.api_base = "https://api.openai.com/v1" # After (LaoZhang.ai) import openai openai.api_key = "lz-..." openai.api_base = "https://api.laozhang.ai/v1"

Step 4: Monitor Usage

python# Built-in usage tracking response = openai.ChatCompletion.create( model="gpt-4", messages=[{"role": "user", "content": "Hello"}], user="user-123" # Track by user ) # Access usage data print(response.usage) # Output: { # "prompt_tokens": 10, # "completion_tokens": 15, # "total_tokens": 25, # "estimated_cost": 0.00045 # }

Migration Best Practices

-

Gradual Migration

- Start with non-critical workloads

- Monitor performance metrics

- Scale up gradually

-

A/B Testing

pythonimport random def get_api_base(): if random.random() < 0.1: # 10% traffic return "https://api.laozhang.ai/v1" return "https://api.openai.com/v1" -

Error Handling

pythonimport time from tenacity import retry, wait_exponential, stop_after_attempt @retry( wait=wait_exponential(multiplier=1, min=4, max=10), stop=stop_after_attempt(3) ) def resilient_api_call(prompt): try: return openai.ChatCompletion.create( model="gpt-4", messages=[{"role": "user", "content": prompt}] ) except Exception as e: print(f"API error: {e}") raise

Advanced Optimization Techniques

1. Dynamic Model Selection

pythondef select_model(task_complexity, response_length, budget_remaining): if budget_remaining < 10: return "gpt-3.5-turbo" if task_complexity > 0.8 or response_length > 2000: return "gpt-4-turbo" elif task_complexity > 0.5: return "gpt-3.5-turbo-16k" else: return "gpt-3.5-turbo"

2. Request Pooling

pythonfrom collections import deque import asyncio class RequestPool: def __init__(self, max_batch_size=10, max_wait_time=0.1): self.queue = deque() self.max_batch_size = max_batch_size self.max_wait_time = max_wait_time async def add_request(self, prompt): future = asyncio.Future() self.queue.append((prompt, future)) if len(self.queue) >= self.max_batch_size: await self.process_batch() else: asyncio.create_task(self.wait_and_process()) return await future async def wait_and_process(self): await asyncio.sleep(self.max_wait_time) if self.queue: await self.process_batch() async def process_batch(self): batch = [] while self.queue and len(batch) < self.max_batch_size: batch.append(self.queue.popleft()) # Process batch with single API call results = await self.batch_api_call([p for p, _ in batch]) for (_, future), result in zip(batch, results): future.set_result(result)

3. Intelligent Caching Strategy

pythonclass SmartCache: def __init__(self): self.semantic_cache = {} self.embedding_model = "text-embedding-ada-002" def get_embedding(self, text): response = openai.Embedding.create( input=text, model=self.embedding_model ) return response['data'][0]['embedding'] def find_similar(self, prompt, threshold=0.95): prompt_embedding = self.get_embedding(prompt) for cached_prompt, (cached_response, cached_embedding) in self.semantic_cache.items(): similarity = cosine_similarity(prompt_embedding, cached_embedding) if similarity > threshold: return cached_response return None def add_to_cache(self, prompt, response): embedding = self.get_embedding(prompt) self.semantic_cache[prompt] = (response, embedding)

Future Outlook

Pricing Trends and Predictions

2025-2026 Projections:

- GPT-4 class models: 50% price reduction expected

- New efficiency models: 10x cost reduction for specific tasks

- Competition driving prices down across all providers

Emerging Cost-Saving Technologies

- Model Distillation: Smaller, task-specific models at 90% lower cost

- Edge Computing: Local inference for frequent queries

- Federated Learning: Shared model training costs

- Quantum-Assisted AI: Potential 100x efficiency gains by 2030

Preparing for the Future

Strategic Recommendations:

- Build flexible architecture supporting multiple providers

- Invest in prompt optimization and caching infrastructure

- Develop in-house expertise for model fine-tuning

- Consider hybrid approaches (cloud + edge)

Industry-Specific Considerations

Healthcare: HIPAA compliance may require on-premise solutions Finance: Real-time requirements favor edge deployment Education: Bulk licensing agreements for significant savings Retail: Seasonal scaling strategies to manage costs

Conclusion

Understanding and optimizing OpenAI API costs is crucial for sustainable AI integration. Key takeaways:

- Token Awareness: Every character counts - optimize prompts ruthlessly

- Model Selection: Choose the right tool for each job

- Caching Strategy: Implement intelligent caching to reduce redundant calls

- Cost Monitoring: Track usage patterns and set appropriate alerts

- Alternative Providers: Consider LaoZhang.ai for immediate 70% savings

The landscape of AI API pricing continues to evolve rapidly. By implementing the strategies outlined in this guide and leveraging innovative solutions like LaoZhang.ai, you can reduce your API costs by up to 70% while maintaining the same quality and capabilities.

Whether you're building the next AI unicorn or adding intelligent features to existing applications, managing API costs effectively will be key to your success. Start optimizing today, and transform your AI cost center into a competitive advantage.

Take Action Today

- Calculate Your Savings: Use our cost calculator at laozhang.ai/calculator

- Start Free Trial: Get $10 free credits to test LaoZhang.ai

- Join Community: Connect with 10,000+ developers optimizing AI costs

- Schedule Consultation: Get personalized optimization strategies

Remember: In the world of AI development, the most successful applications aren't just the most intelligent - they're the most efficiently engineered. Start your optimization journey today with LaoZhang.ai and join thousands of developers who've already reduced their OpenAI API costs by 70%.

For more AI development insights and cost optimization strategies, follow our blog and join the LaoZhang.ai community. Together, we're making AI accessible and affordable for everyone.