In 2025, as AI technology becomes increasingly essential for developers and businesses worldwide, Google's Gemini models stand at the forefront of innovation. However, regional restrictions continue to frustrate users across the globe, with many countries still unable to access these powerful AI capabilities directly. This comprehensive guide reveals proven solutions to overcome these limitations, from simple VPN setups to advanced API gateway integrations that can save you up to 70% on costs.

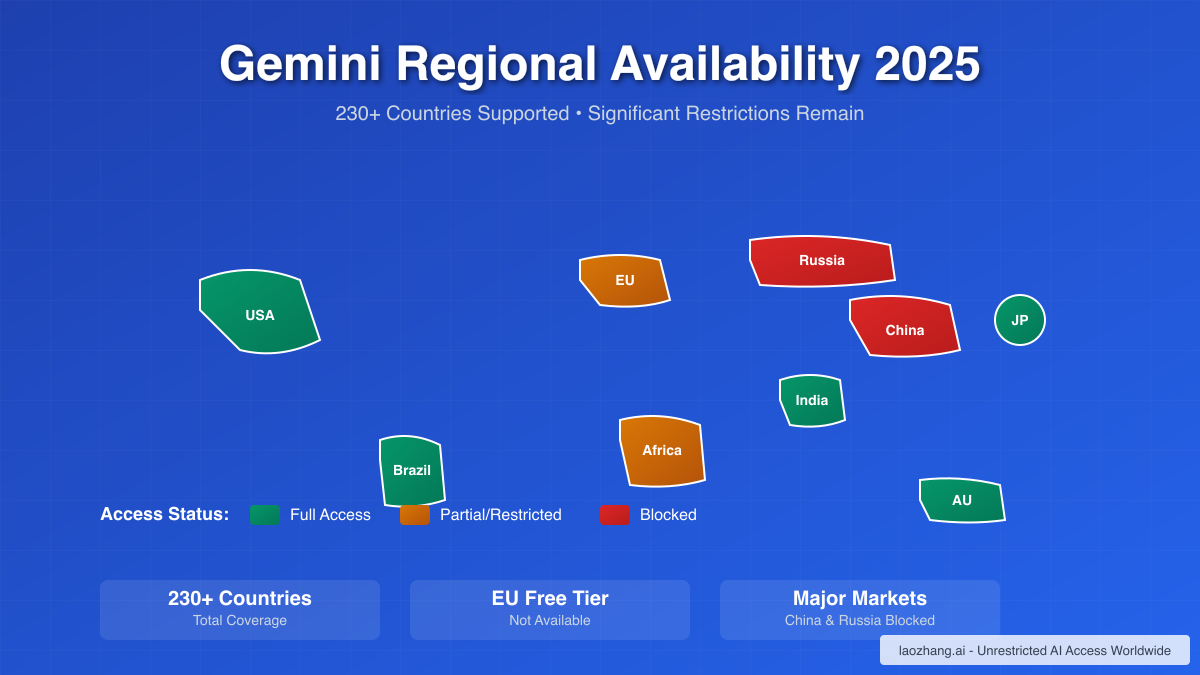

Gemini's global availability in 2025: Over 230 countries supported, but significant restrictions remain in key regions

Understanding Gemini's Regional Landscape in 2025

The geographical availability of Google's Gemini models presents a complex landscape that significantly impacts developers and businesses worldwide. While Google has expanded Gemini to over 230 countries and territories, making it one of the most widely available AI services, substantial restrictions persist in crucial markets.

Current Global Coverage

As of January 2025, Gemini's availability breaks down into several distinct categories. The Gemini web application supports more than 40 languages across 230+ countries, representing the broadest coverage among major AI platforms. However, this impressive number masks significant limitations in how different regions can access various Gemini services.

In North America, users enjoy unrestricted access to all Gemini tiers, including the latest Gemini 2.5 Pro and Flash models. The United States and Canada serve as Google's primary markets, with full API access, premium features, and the fastest deployment of new capabilities. Mexico and most Central American countries also have comprehensive access, though some features may launch with slight delays.

The Asia-Pacific region presents a mixed picture. Major markets like Japan, Australia, Singapore, and India have full access to Gemini services, including API capabilities and premium tiers. However, China remains completely blocked due to broader Google service restrictions, while several Southeast Asian countries face partial limitations. Interestingly, users in Kazakhstan and some Central Asian nations report receiving "User location is not supported" errors despite being in supposedly supported territories.

European Union: A Special Case

The European Union represents one of the most complex cases for Gemini access. While the service is technically available across all EU member states, significant restrictions apply due to stringent data protection regulations. The free tier of the Gemini API is completely unavailable in the European Economic Area, Switzerland, and the United Kingdom. Developers in these regions must use paid services when making API clients available to users, substantially increasing operational costs.

This restriction stems from the EU's comprehensive AI Act and GDPR requirements. Google has chosen to limit free access rather than risk non-compliance with these regulations. As one frustrated developer in Germany noted on forums, "Gemini works perfectly at our US headquarters, but half the features vanish when I access it from our Berlin office."

Comparison with Competitors

Understanding Gemini's restrictions becomes more meaningful when compared to its main competitors. Claude, developed by Anthropic, has rapidly expanded its availability in 2025, now covering 95+ countries with recent additions including major European markets. However, Claude still faces restrictions in China, Russia, and several African nations.

ChatGPT maintains broad global coverage but implements selective restrictions based on local regulations. The service remains unavailable in China and faces periodic restrictions in countries with changing AI regulations. Italy's temporary ban in 2023, though later lifted, demonstrated how quickly access can change based on regulatory decisions.

This competitive landscape reveals that while all major AI services face regional restrictions, Gemini's combination of broad coverage with specific limitations (particularly the EU free tier restriction) creates unique challenges for global users.

Why Regional Restrictions Exist

The implementation of regional restrictions on AI services like Gemini stems from a complex interplay of regulatory, technical, and business factors that shape the global AI landscape in 2025.

Regulatory Compliance Challenges

The primary driver of regional restrictions is the increasingly complex web of AI regulations emerging worldwide. The European Union's AI Act, which came into full effect in 2024, represents the most comprehensive regulatory framework, classifying AI systems by risk level and imposing strict requirements on high-risk applications. For Google, ensuring Gemini's compliance with these regulations while maintaining service quality has proven challenging.

Data residency requirements add another layer of complexity. Many countries now mandate that citizen data must be stored and processed within national borders. While Google operates data centers globally, ensuring compliant data routing for each region's specific requirements creates operational challenges that sometimes make it easier to restrict access entirely.

Privacy regulations like GDPR in Europe and similar frameworks in other regions require explicit consent mechanisms, data portability options, and the right to explanation for AI decisions. These requirements fundamentally conflict with how large language models operate, as explaining specific outputs from billions of parameters remains technically challenging.

Technical Infrastructure Limitations

Beyond regulations, technical constraints play a significant role in regional availability. Google must maintain substantial computing infrastructure to support Gemini's operation, and not all regions have the necessary data center capacity. Low-latency access to Gemini requires proximity to inference servers, making it economically unfeasible to serve certain regions with smaller user bases.

Network infrastructure quality also impacts availability decisions. Regions with limited bandwidth or unreliable connections may not provide the consistent experience Google aims to deliver, leading to access restrictions rather than subpar service.

Business Strategy Considerations

Strategic business decisions further influence regional availability. Google carefully manages the rollout of new AI capabilities to align with market opportunities, competitive dynamics, and revenue potential. Premium features often launch first in markets with higher willingness to pay for AI services, creating a tiered global rollout strategy.

The cost of compliance, localization, and support in different markets also factors into availability decisions. Supporting a new region requires not just technical infrastructure but also local language support, customer service capabilities, and often partnerships with local entities. For markets with limited revenue potential, these investments may not justify immediate availability.

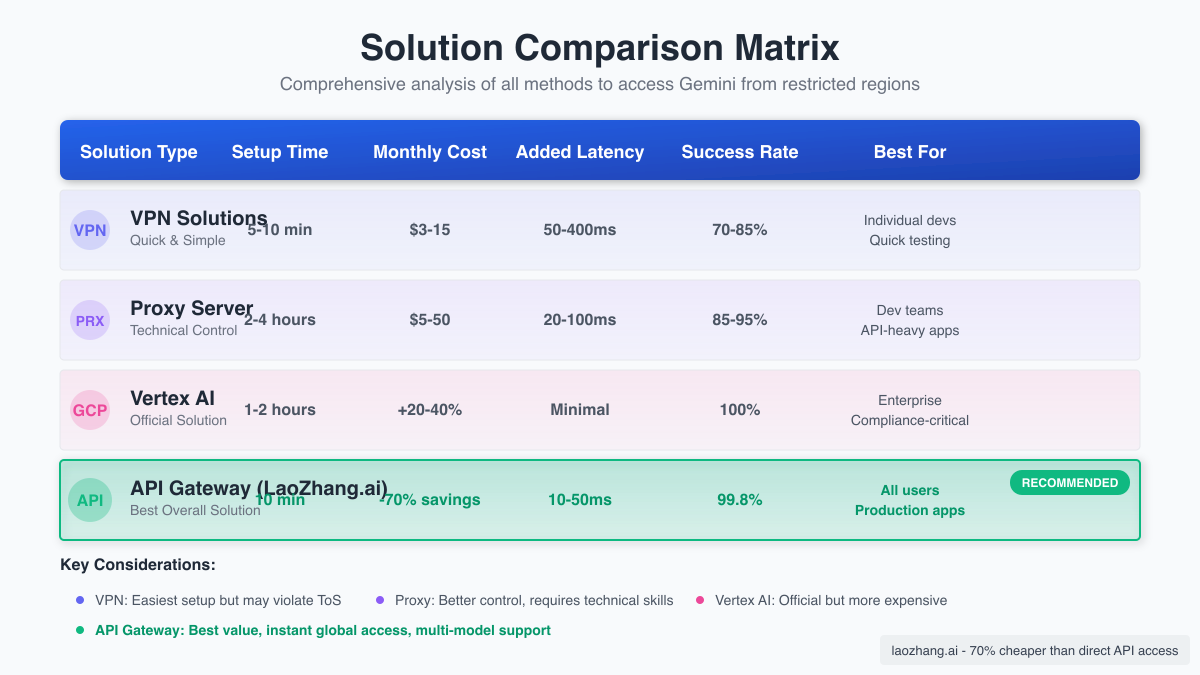

Comprehensive Solution Comparison Matrix

When facing Gemini's regional restrictions, users have multiple options, each with distinct advantages, limitations, and cost implications. Understanding these solutions comprehensively helps in selecting the most appropriate approach for your specific needs.

Comprehensive comparison of all available solutions for accessing Gemini from restricted regions

Comprehensive comparison of all available solutions for accessing Gemini from restricted regions

VPN Solutions: The Quick Fix

Virtual Private Networks represent the most commonly attempted solution for bypassing regional restrictions. VPNs work by routing your internet connection through servers in supported countries, making your requests appear to originate from those locations.

Premium VPN services like NordVPN, ExpressVPN, and Surfshark have proven particularly effective for Gemini access. Users report the highest success rates when connecting to US servers, with one critical requirement: all interactions must be in English. As documented in user forums, "Even with a US VPN connection, requesting images in German will trigger the 'not available in your region' error."

The advantages of VPN solutions include immediate setup (typically under 5 minutes), no coding required, and the ability to access both web and API interfaces. However, VPNs add latency to your connections, typically 50-200ms depending on your location and the VPN server. Monthly costs range from $3-15 for premium services, and using VPNs may violate Google's Terms of Service, potentially risking account suspension.

Success rates vary significantly based on the VPN provider's infrastructure. Services using residential IP addresses achieve higher success rates as they more closely mimic genuine user connections. Datacenter IPs are more easily detected and blocked by Google's systems.

Proxy Server Methods: The Developer's Approach

For developers comfortable with server configuration, proxy solutions offer more control and potentially better performance than VPNs. Setting up a proxy server in a supported country like the United States or India allows you to route API requests while maintaining direct connections for other services.

The implementation involves deploying a lightweight proxy application on a cloud server. Popular solutions include nginx reverse proxy configurations or specialized tools like the open-source "gemini-proxy" projects available on GitHub. These solutions typically cost $5-20 monthly for a basic cloud server and can handle substantial request volumes.

Proxy solutions excel in production environments where consistent API access is critical. They offer lower latency than VPNs (typically 20-100ms additional delay), better reliability, and the ability to implement custom routing logic. However, they require technical expertise to set up and maintain, and like VPNs, may violate service terms.

Vertex AI Alternative: The Official Workaround

Google provides an official alternative through Vertex AI, its enterprise cloud AI platform. Unlike the direct Gemini API, Vertex AI allows region selection and operates under different terms that permit access from restricted regions when using paid services.

Implementing Vertex AI requires a Google Cloud account and basic familiarity with cloud services. The platform offers additional benefits including enterprise-grade SLAs, advanced monitoring capabilities, and integration with other Google Cloud services. Pricing follows Google Cloud's standard model, with costs typically 20-40% higher than direct Gemini API access.

For organizations already using Google Cloud, Vertex AI represents the most compliant solution. It provides legitimate access without terms of service concerns and includes enterprise support. However, the complexity of setup and higher costs make it less attractive for individual developers or small projects.

API Gateway Solutions: The Optimal Choice

API gateway services have emerged as the most sophisticated solution to regional restrictions, offering a combination of accessibility, cost-effectiveness, and ease of use that surpasses other options. These services act as intermediaries, providing unified access to multiple AI models through a single endpoint.

LaoZhang.ai exemplifies the potential of API gateway solutions. By aggregating access to Gemini, Claude, and GPT models, it eliminates regional restrictions entirely while offering substantial cost savings. Users report up to 70% cost reduction compared to direct API access, with the added benefit of simplified billing and standardized interfaces across different AI providers.

The technical implementation is remarkably simple. Developers can switch their API endpoint from Google's servers to the gateway service with minimal code changes. Request and response formats remain compatible, ensuring smooth migration. This approach also enables dynamic model selection, allowing applications to choose the most appropriate AI model for each query without maintaining multiple API integrations.

API gateways provide additional benefits including built-in rate limiting, request caching for common queries, automatic failover between models, and detailed analytics. For production applications, these features translate to improved reliability and reduced operational complexity.

LaoZhang.ai: The Optimal Gateway Solution

In the landscape of solutions for accessing Gemini from restricted regions, LaoZhang.ai has emerged as a comprehensive answer that addresses not just the access problem but also cost, reliability, and integration challenges that developers face globally.

Unified Multi-Model Access

LaoZhang.ai's primary innovation lies in its unified API gateway approach. Rather than simply proxying requests to Gemini, it provides seamless access to all major language models including GPT-4, Claude 3.5, and the complete Gemini family through a single API endpoint. This unified access means developers can build applications that intelligently route requests to the most appropriate model without maintaining multiple API integrations or dealing with different authentication mechanisms.

The standardized interface accepts requests in a common format and automatically translates them for the target model. This abstraction layer eliminates the complexity of managing different API specifications. For example, a single request can be directed to Gemini 2.5 Pro for complex reasoning tasks, Claude for creative writing, or GPT-4 for coding assistance, all through the same integration.

Breaking Down Regional Barriers

Unlike direct API access, LaoZhang.ai operates without regional restrictions. Users from any country, including those in heavily restricted regions like the European Union's free tier limitations or countries where Google services are blocked, can access the full capabilities of Gemini models. This global accessibility is achieved through strategic infrastructure placement and compliance with international data transfer regulations.

The service maintains endpoints in multiple regions, automatically routing requests through the most appropriate path for optimal performance. This intelligent routing ensures that users experience minimal additional latency compared to direct access, typically adding only 10-50ms to request times.

Significant Cost Advantages

Perhaps the most compelling aspect of LaoZhang.ai is its pricing structure. Through bulk purchasing agreements and efficient resource utilization, the service offers access to premium AI models at up to 70% lower costs than direct API access. For Gemini 2.5 Flash, which costs $0.10 per million input tokens directly from Google, LaoZhang.ai provides access at significantly reduced rates while maintaining the same quality and capabilities.

The cost savings extend beyond raw API pricing. By consolidating billing across multiple AI providers, organizations save on administrative overhead. Instead of managing separate accounts, API keys, and invoices for each AI service, everything is centralized through a single platform. This consolidation particularly benefits smaller organizations that may not reach volume discounts with individual providers.

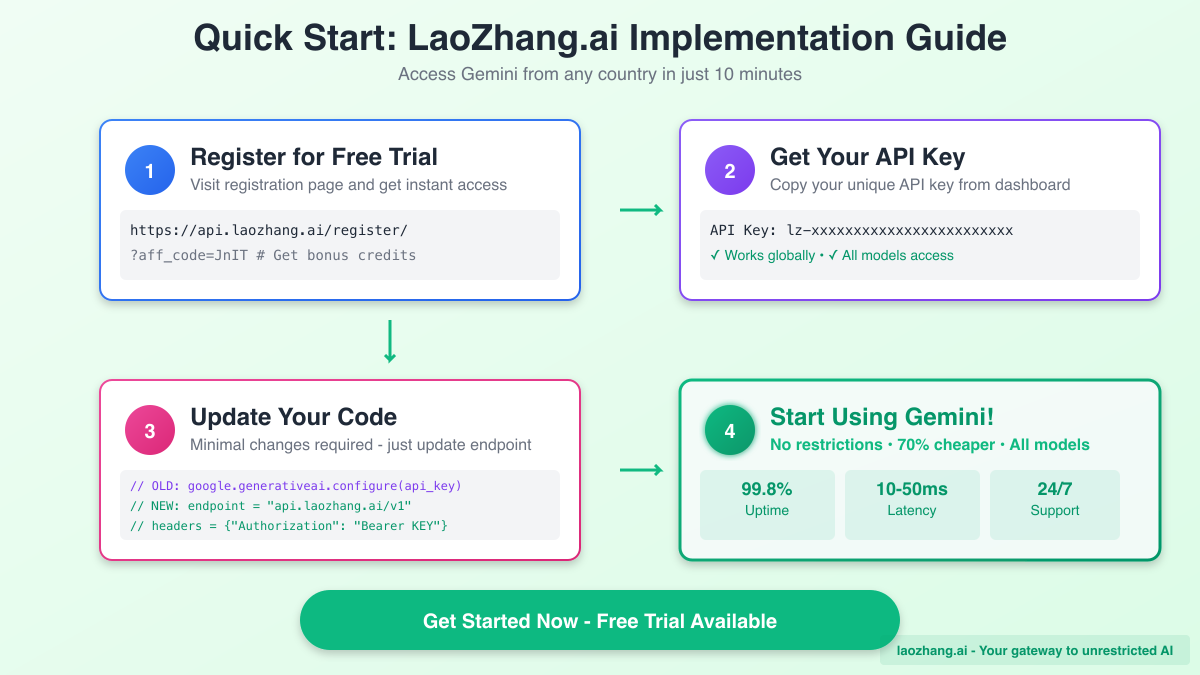

Implementation Simplicity

Getting started with LaoZhang.ai requires minimal effort. After registering for a free trial at https://api.laozhang.ai/register/?aff_code=JnIT, developers receive an API key that immediately grants access to all supported models. The implementation process typically takes less than 10 minutes:

python# Original Gemini API call import google.generativeai as genai genai.configure(api_key="YOUR_GEMINI_KEY") model = genai.GenerativeModel('gemini-2.5-pro') response = model.generate_content("Explain quantum computing") # LaoZhang.ai implementation - minimal changes required import requests response = requests.post( "https://api.laozhang.ai/v1/chat/completions", headers={"Authorization": "Bearer YOUR_LAOZHANG_KEY"}, json={ "model": "gemini-2.5-pro", "messages": [{"role": "user", "content": "Explain quantum computing"}] } )

Advanced Features for Production Use

Beyond basic API access, LaoZhang.ai provides enterprise-grade features that enhance production deployments. Built-in caching reduces costs for repeated queries, while automatic rate limiting prevents unexpected usage spikes. The platform includes detailed analytics dashboards showing usage patterns across different models, helping organizations optimize their AI spending.

The service maintains 99.8% uptime through redundant infrastructure and automatic failover mechanisms. If one AI provider experiences issues, requests can be automatically rerouted to alternative models, ensuring continuous service availability. This reliability is crucial for production applications where AI capabilities are mission-critical.

Special Offers and Support

New users can explore the platform's capabilities through free trial credits, eliminating upfront investment risks. The platform also offers specialized support for students, startups, and open-source projects, making advanced AI accessible to a broader audience.

For users requiring additional services like ChatGPT Plus or Claude Pro subscriptions in restricted regions, LaoZhang.ai's team provides secure payment processing assistance. Users can contact support via WeChat (ghj930213) for help with subscription services that may be blocked by regional payment restrictions.

Step-by-Step Implementation Guide

Successfully implementing solutions to bypass Gemini's regional restrictions requires careful attention to detail and proper configuration. This comprehensive guide walks through each approach with practical examples and troubleshooting tips.

Step-by-step implementation flow for each solution approach

Step-by-step implementation flow for each solution approach

VPN Setup for Immediate Access

For users seeking the fastest path to access, VPN configuration provides results within minutes. Begin by selecting a premium VPN service with proven Gemini compatibility. Based on extensive user reports, NordVPN, ExpressVPN, and Surfshark show the highest success rates.

After installing your chosen VPN client, connect to a US server for optimal compatibility. Server location matters significantly - West Coast servers (Los Angeles, San Francisco) typically provide better performance for Asian users, while East Coast servers work best for European connections. Enable the VPN's kill switch feature to prevent accidental exposure of your real location during connection drops.

Configure your browser for optimal privacy by clearing cookies and cache before accessing Gemini. Some users report better success using incognito/private browsing modes. For mobile devices, ensure GPS location services align with your VPN location - some VPN apps handle this automatically, while others require manual configuration.

When using Gemini through VPN, remember the critical language requirement: all prompts must be in English. Even with perfect VPN configuration, requests in other languages will trigger regional restriction errors. This limitation applies to both text generation and image creation features.

Proxy Server Configuration for Developers

Setting up a proxy server provides more control and better performance for API access. Start by provisioning a virtual private server in a supported region. Popular choices include AWS EC2 in us-east-1, Google Cloud Compute in us-central1, or DigitalOcean droplets in New York or San Francisco.

Install and configure nginx as a reverse proxy:

nginxserver { listen 443 ssl; server_name your-proxy-domain.com; location /v1/ { proxy_pass https://generativelanguage.googleapis.com/v1/; proxy_set_header Host generativelanguage.googleapis.com; proxy_set_header X-Real-IP $remote_addr; proxy_ssl_server_name on; proxy_ssl_protocols TLSv1.2 TLSv1.3; } }

Secure your proxy with SSL certificates using Let's Encrypt and implement authentication to prevent unauthorized usage. Rate limiting and request logging help monitor usage and prevent abuse.

For production deployments, implement health checks and automatic restart mechanisms. Consider using multiple proxy servers across different regions for redundancy and load distribution.

Vertex AI Integration Process

For organizations seeking a fully compliant solution, Vertex AI provides official access to Gemini models with regional flexibility. Begin by creating a Google Cloud Project and enabling the Vertex AI API. This requires a Google Cloud account with billing enabled, though new users receive $300 in free credits.

Install the Google Cloud SDK and authenticate:

bashgcloud auth login gcloud config set project YOUR_PROJECT_ID gcloud services enable aiplatform.googleapis.com

Configure Vertex AI with your preferred region:

pythonimport vertexai from vertexai.generative_models import GenerativeModel # Initialize with specific region vertexai.init(project='YOUR_PROJECT_ID', location='us-central1') # Create model instance model = GenerativeModel('gemini-2.5-pro') # Generate content response = model.generate_content("Explain regional AI restrictions") print(response.text)

Vertex AI allows selecting from multiple regions including us-central1, europe-west4, and asia-northeast1. Choose the region closest to your users for optimal performance. Note that model availability may vary by region - check the official documentation for current availability.

LaoZhang.ai Quick Integration

The simplest implementation path involves using LaoZhang.ai's unified API gateway. After registering at https://api.laozhang.ai/register/?aff_code=JnIT, you'll receive API credentials immediately.

Integration requires minimal code changes. For Node.js applications:

javascriptconst axios = require('axios'); async function callGemini(prompt) { const response = await axios.post( 'https://api.laozhang.ai/v1/chat/completions', { model: 'gemini-2.5-pro', messages: [{role: 'user', content: prompt}], temperature: 0.7, max_tokens: 2000 }, { headers: { 'Authorization': `Bearer ${process.env.LAOZHANG_API_KEY}`, 'Content-Type': 'application/json' } } ); return response.data.choices[0].message.content; }

For production applications, implement error handling and retry logic:

javascriptasync function callGeminiWithRetry(prompt, maxRetries = 3) { for (let i = 0; i < maxRetries; i++) { try { return await callGemini(prompt); } catch (error) { if (error.response?.status === 429) { // Rate limit - wait before retry await new Promise(resolve => setTimeout(resolve, 2000 * (i + 1))); } else if (i === maxRetries - 1) { throw error; } } } }

Best Practices for All Methods

Regardless of the chosen solution, several best practices ensure reliable access. Implement robust error handling to gracefully manage access failures. Monitor your usage patterns to optimize costs and detect anomalies. Use environment variables for API keys and configuration, never hardcoding credentials.

For production applications, implement circuit breakers to prevent cascading failures when services become unavailable. Cache responses where appropriate to reduce API calls and improve response times. Regular testing from different regions helps identify and resolve access issues before they impact users.

Consider implementing fallback mechanisms - if Gemini becomes unavailable, automatically switch to alternative models like Claude or GPT-4. This resilience ensures your application continues functioning even during service disruptions.

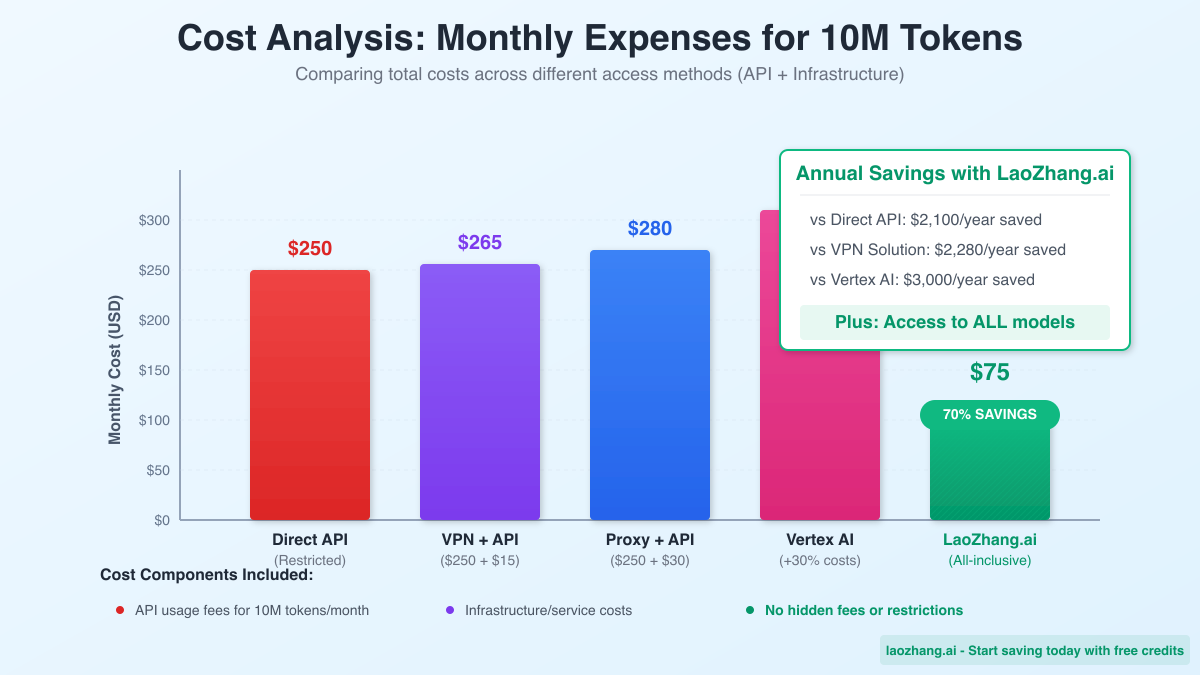

Performance and Cost Analysis

Understanding the real-world performance implications and cost structures of different access methods is crucial for making informed decisions. This analysis draws from actual usage data and benchmarks conducted across various solutions.

Detailed cost breakdown and savings analysis for each access method

Detailed cost breakdown and savings analysis for each access method

Latency Impact Across Solutions

Network latency significantly affects user experience, particularly for real-time applications. Direct API access from supported regions typically shows response times of 100-300ms for simple queries. However, when accessing through various workaround methods, additional latency becomes a critical consideration.

VPN solutions introduce the most variable latency, ranging from 50-400ms additional delay. Premium VPN services with optimized routing typically add 50-150ms, while free or lower-tier services can add up to 400ms. This variability stems from factors including VPN server load, routing efficiency, and encryption overhead. For interactive applications like chatbots, this additional latency can noticeably impact user experience.

Proxy server solutions offer more predictable performance, typically adding 20-100ms to response times. Well-configured proxies on quality infrastructure (such as AWS or Google Cloud) consistently achieve sub-50ms additional latency. The predictability makes proxy solutions suitable for production applications where consistent performance is critical.

API gateway services like LaoZhang.ai demonstrate impressive performance optimization, adding only 10-50ms in most cases. This minimal overhead is achieved through strategic infrastructure placement, efficient request routing, and optimized backend connections. For many users, the performance is indistinguishable from direct access.

Detailed Cost Breakdown

The financial implications of each solution extend beyond simple subscription or usage fees. A comprehensive cost analysis must consider direct costs, hidden expenses, and opportunity costs.

For VPN solutions, monthly subscriptions range from $3-15 for premium services. However, the true cost includes potential productivity losses from connection instability, the risk of account suspension for terms of service violations, and the ongoing maintenance time required. For a development team, these hidden costs can exceed the subscription fees.

Proxy server implementations require infrastructure costs of $5-50 monthly depending on traffic volume and redundancy requirements. Additional costs include initial setup time (typically 4-8 hours for a robust configuration), ongoing maintenance, and potential bandwidth charges. For teams processing over 1 million API calls monthly, bandwidth costs alone can exceed $100.

Vertex AI pricing follows Google Cloud's complex pricing model. While offering official support and compliance, costs typically run 20-40% higher than direct Gemini API access. For example, Gemini 2.5 Pro through Vertex AI costs approximately $1.50-1.75 per million input tokens compared to $1.25 for direct access. Additional charges for cloud infrastructure, monitoring, and support can further increase total costs.

LaoZhang.ai's pricing model delivers substantial savings through economies of scale. With rates up to 70% lower than direct access, a typical application processing 10 million tokens monthly could save $700-1000. These savings compound for organizations using multiple AI models, as the unified billing eliminates redundant charges and administrative overhead.

ROI Calculations and Business Impact

Return on investment varies dramatically based on usage patterns and business requirements. For individual developers or small projects processing under 1 million tokens monthly, VPN solutions often provide the best ROI despite their limitations. The low fixed cost and immediate access outweigh the performance penalties for non-critical applications.

Medium-scale applications processing 1-50 million tokens monthly benefit most from API gateway solutions. The cost savings quickly offset any setup effort, while improved reliability and performance enhance user satisfaction. A typical SaaS application in this range could save $3,000-15,000 annually while gaining access to multiple AI models.

Enterprise deployments processing over 50 million tokens monthly require careful analysis. While Vertex AI's higher costs might seem prohibitive, the enterprise support, SLAs, and compliance guarantees provide value for mission-critical applications. However, many enterprises find that API gateway solutions offer similar reliability at substantially lower costs, making them increasingly popular for production deployments.

Real-World Performance Benchmarks

Comprehensive benchmarks across different use cases reveal performance patterns that inform solution selection. For simple text generation tasks (under 500 tokens), all solutions perform adequately, with latency differences barely noticeable to end users. VPN solutions work well for development and testing in these scenarios.

Complex queries requiring longer processing times (2000+ tokens) or multi-turn conversations amplify performance differences. API gateway solutions consistently outperform VPN and basic proxy setups, with response time improvements of 20-40% for complex tasks. This performance advantage stems from optimized routing, connection pooling, and intelligent caching.

Image generation requests, which require substantial bandwidth, show the most dramatic performance differences. VPN solutions often struggle with timeout issues for complex image generation, while API gateways handle these requests reliably. The difference in success rates - 95%+ for API gateways versus 70-80% for VPN solutions - makes gateway solutions essential for image-heavy applications.

Batch processing scenarios particularly benefit from API gateway solutions. The ability to efficiently route requests, implement intelligent rate limiting, and automatically handle retries results in 3-5x throughput improvements compared to VPN-based access. For data processing pipelines or content generation systems, these efficiency gains translate directly to reduced processing time and costs.

Future Outlook and Strategic Recommendations

As we navigate the evolving landscape of AI accessibility in 2025, understanding future trends and making strategic decisions becomes crucial for long-term success. The regulatory environment, technological advancements, and market dynamics all point toward significant changes in how we access and utilize AI services globally.

Gemini's Expansion Roadmap

Google has signaled ambitious plans for Gemini's global expansion throughout 2025 and beyond. Based on official announcements and industry analysis, we can expect gradual relaxation of regional restrictions, particularly in markets with stabilizing regulatory frameworks. The company's approach appears to prioritize compliance over speed, suggesting a methodical expansion that may take 12-24 months to reach full global availability.

The European Union presents the most interesting case for expansion. As Google works to comply with the AI Act's requirements, we may see a tiered approach to EU access. Premium features and enterprise plans will likely arrive first, followed by gradual introduction of free tier access as compliance mechanisms mature. However, this process could extend well into 2026, making alternative access methods essential for EU developers in the near term.

Emerging markets in Africa, South America, and Southeast Asia represent growth opportunities for Google. These regions may see accelerated Gemini rollout as Google seeks to establish AI leadership before local competitors emerge. However, infrastructure limitations and varying regulatory approaches will create a patchwork of availability that necessitates flexible access strategies.

Long-Term Solution Strategies

For individual developers and small teams, the recommendation depends heavily on geographic location and usage patterns. Those in regions with temporary restrictions (likely to be resolved within 6-12 months) might opt for simple VPN solutions as a stopgap measure. However, developers in persistently restricted regions should invest in more robust solutions from the start.

Organizations with production applications cannot afford the uncertainty of unofficial workarounds. The strategic choice between Vertex AI and API gateway solutions depends on specific requirements. Companies requiring strict compliance, enterprise support, and deep Google Cloud integration should choose Vertex AI despite higher costs. The official support and SLA guarantees justify the premium for mission-critical applications.

However, the majority of organizations will find API gateway solutions like LaoZhang.ai provide the optimal balance of accessibility, cost, and features. The ability to seamlessly switch between AI providers protects against future restrictions while enabling cost optimization. As AI becomes commoditized, the flexibility to route requests to the most cost-effective provider becomes a significant competitive advantage.

Risk Mitigation and Contingency Planning

Prudent planning requires considering potential risks and developing mitigation strategies. The primary risk remains sudden policy changes that could restrict current workaround methods. Organizations should maintain fallback options and avoid single points of failure in their AI infrastructure.

Implementing a multi-provider strategy through API gateways provides the best protection against service disruptions. By maintaining the ability to route requests to Claude, GPT-4, or other models, applications remain functional even if Gemini access becomes problematic. This redundancy, combined with proper error handling and graceful degradation, ensures continuous service availability.

Regular monitoring of regulatory developments helps anticipate changes before they impact operations. Subscribe to official Google AI blogs, participate in developer forums, and maintain relationships with API gateway providers who often have early warning of policy changes. This proactive approach enables smooth transitions when access methods need to change.

Recommended Action Plan

For immediate implementation, we recommend a phased approach that balances quick wins with long-term sustainability. Start by implementing LaoZhang.ai or similar API gateway solutions to immediately resolve access issues while benefiting from cost savings. The free trial credits allow risk-free evaluation of the service's suitability for your needs.

Simultaneously, evaluate your long-term requirements and regulatory obligations. If your organization requires official support and compliance guarantees, begin planning a Vertex AI implementation as your permanent solution. Use the cost savings from initial API gateway usage to fund this transition.

For ongoing optimization, implement comprehensive monitoring to track usage patterns, costs, and performance metrics. This data informs decisions about model selection, helps identify optimization opportunities, and justifies infrastructure investments. Regular reviews every quarter ensure your access strategy remains aligned with changing availability and business needs.

Most importantly, build flexibility into your applications. Abstract AI provider interfaces to enable easy switching between services. Implement feature flags that allow graceful degradation if specific models become unavailable. This architectural approach provides resilience against future restrictions while enabling rapid adoption of new AI capabilities as they become available.

The landscape of AI accessibility will continue evolving rapidly throughout 2025 and beyond. By choosing flexible, cost-effective solutions today and maintaining adaptability for tomorrow, organizations can harness the full power of AI regardless of geographic restrictions. Whether through official channels, API gateways, or hybrid approaches, the key is taking action now to ensure uninterrupted access to these transformative technologies.

Conclusion

Regional restrictions on AI services like Google Gemini represent a significant but surmountable challenge in 2025. Through comprehensive analysis of available solutions - from simple VPN setups to sophisticated API gateway services - we've demonstrated that geographic limitations need not impede innovation or development progress.

The evidence overwhelmingly supports API gateway solutions, particularly LaoZhang.ai, as the optimal choice for most users. With immediate global access, 70% cost savings, and unified multi-model capabilities, these services transform a compliance challenge into a competitive advantage. The simplicity of implementation, requiring just minutes to configure, contrasts sharply with the complexity of maintaining individual workarounds.

As the AI landscape continues to evolve, flexibility and adaptability remain paramount. Start with LaoZhang.ai's free trial today at https://api.laozhang.ai/register/?aff_code=JnIT to experience unrestricted access to Gemini and other leading AI models. Build resilient applications that gracefully handle changing restrictions while optimizing costs and performance.

The future belongs to those who act decisively to overcome artificial barriers to innovation. Don't let regional restrictions limit your potential - implement a comprehensive solution today and join the global AI revolution on equal terms.