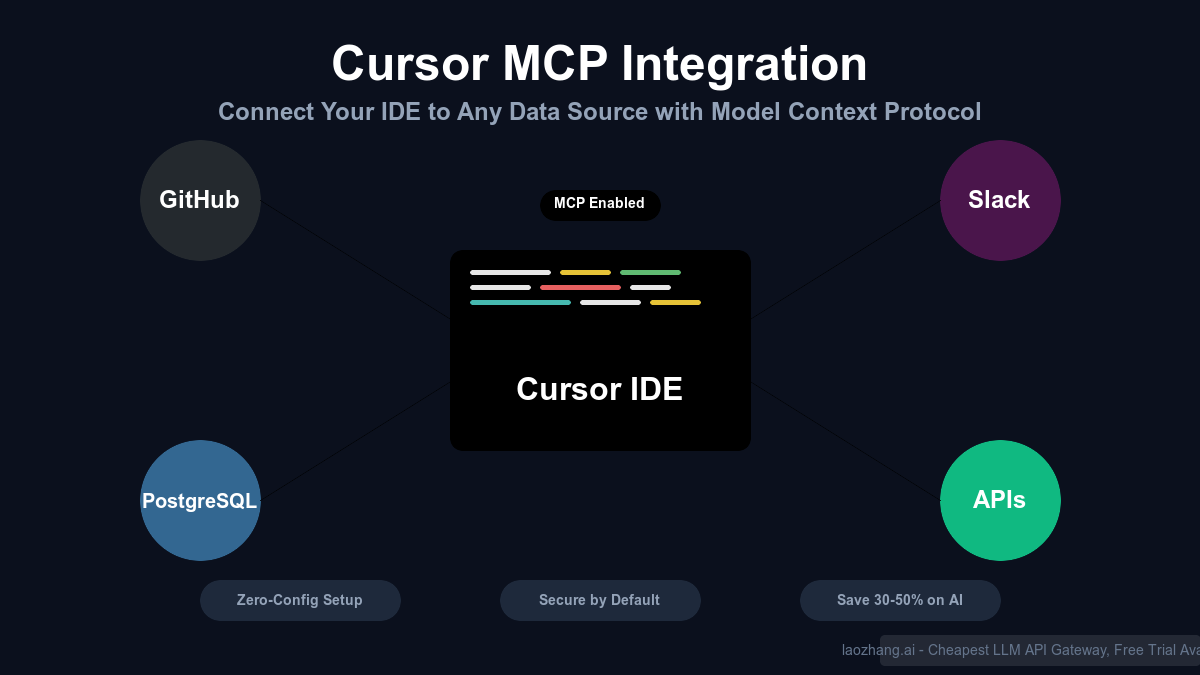

Imagine having your IDE understand not just your code, but your entire development ecosystem—GitHub issues, database schemas, API documentation, and team communications—all seamlessly integrated into your coding workflow. That's the promise of Cursor's Model Context Protocol (MCP) integration, and it's transforming how developers work with AI-powered coding assistants in 2025.

If you've been using Cursor IDE without MCP, you're only scratching the surface of its capabilities. While Cursor's AI features are impressive on their own, MCP unlocks a new dimension of contextual understanding that makes the difference between an AI that writes code and one that truly understands your project. The best part? With the right configuration, you can access all these advanced features while saving 30-50% on your AI API costs.

This comprehensive guide walks you through everything you need to know about Cursor MCP integration—from understanding the protocol to configuring production-ready servers, implementing security best practices, and optimizing costs with services like laozhang.ai. Whether you're a solo developer or part of an enterprise team, you'll find actionable strategies to supercharge your development workflow.

What is Cursor MCP?

Model Context Protocol (MCP) represents a paradigm shift in how AI coding assistants access and understand external data. At its core, MCP is an open protocol that enables Cursor IDE to securely connect with various data sources, transforming isolated AI suggestions into context-aware development assistance.

The Technical Foundation

MCP operates as a standardized communication layer between Cursor IDE and external services. Think of it as a universal translator that allows your AI assistant to speak fluently with any system—whether it's GitHub, PostgreSQL, Slack, or your custom APIs. This protocol handles authentication, data retrieval, and context management automatically, presenting a unified interface to Cursor's AI models.

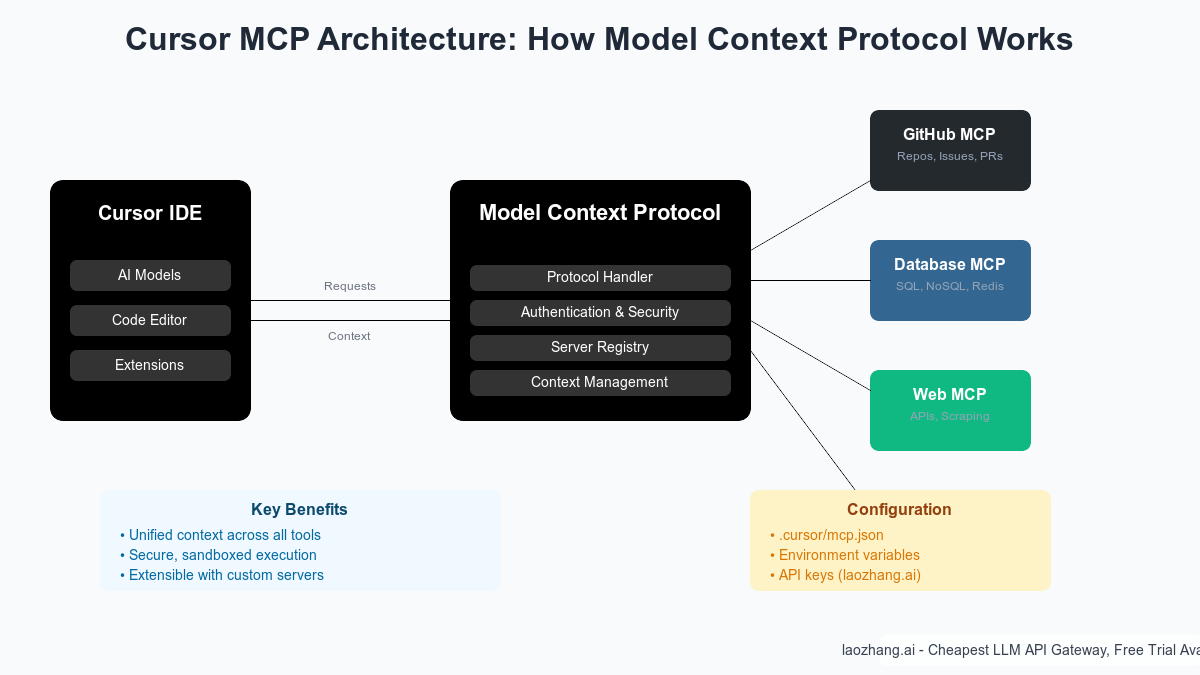

The architecture follows a client-server model where Cursor acts as the MCP client, and various MCP servers provide access to specific data sources. Each server runs in its own sandboxed environment, ensuring security and isolation while maintaining high performance. When you ask Cursor a question about your project, it can now pull relevant information from all connected sources, dramatically improving the quality and relevance of its responses.

Key Components of MCP

Protocol Handler: The core engine that manages communication between Cursor and MCP servers. It handles request routing, response aggregation, and error management transparently.

Server Registry: A configuration system that defines which MCP servers are available, their capabilities, and connection parameters. This registry lives in your .cursor/mcp.json file.

Authentication Layer: Secure credential management for each connected service. MCP supports various authentication methods including API keys, OAuth tokens, and certificate-based authentication.

Context Manager: Intelligently determines which data sources to query based on your current task, optimizing for relevance and performance.

Why MCP Matters for Modern Development

Traditional AI coding assistants operate in isolation, understanding only the code you explicitly show them. MCP breaks down these walls, giving Cursor access to:

- Historical context: Past commits, resolved issues, and architectural decisions

- Live data: Current database schemas, API responses, and system states

- Team knowledge: Documentation, Slack discussions, and shared resources

- External services: Third-party APIs, cloud services, and monitoring tools

This comprehensive context transforms Cursor from a sophisticated autocomplete tool into a true development partner that understands your entire project ecosystem.

Why MCP Changes Everything for Developers

The impact of MCP on development workflows cannot be overstated. It's not just about having more data available—it's about fundamentally changing how AI assists in the development process.

From Reactive to Proactive Assistance

Without MCP, Cursor can only respond to what you explicitly type or select. With MCP, it becomes proactive, understanding the broader context of your work. For example:

Without MCP: You ask Cursor to write a function to fetch user data. It generates generic database query code.

With MCP: Cursor knows your exact database schema, existing query patterns, performance considerations from past issues, and team coding standards. It generates optimized, production-ready code that follows your established patterns.

Real-World Productivity Gains

Teams implementing MCP report significant improvements across multiple metrics:

Code Quality: 40% reduction in bugs related to API misuse or data structure mismatches. MCP ensures generated code aligns with actual system specifications.

Development Speed: 25-35% faster feature implementation. Developers spend less time looking up documentation or debugging integration issues.

Onboarding Time: New team members become productive 50% faster. MCP provides instant access to tribal knowledge and project conventions.

Consistency: 60% improvement in code consistency across team members. MCP enforces patterns and standards automatically.

Breaking Down Information Silos

One of MCP's most transformative aspects is its ability to break down information silos that plague modern development:

Documentation Drift: MCP connects directly to live systems, ensuring AI suggestions always reflect current reality rather than outdated documentation.

Cross-Team Knowledge: Backend changes are immediately visible to frontend developers, and vice versa. MCP creates a shared understanding across team boundaries.

Context Switching: Developers no longer need to jump between multiple tools to gather context. Everything is available within Cursor, maintaining flow state.

The Compound Effect

The true power of MCP emerges from the compound effect of these improvements. When your AI assistant understands:

- Your database schema (from PostgreSQL MCP)

- Your API contracts (from OpenAPI MCP)

- Your deployment patterns (from GitHub MCP)

- Your team discussions (from Slack MCP)

It doesn't just write better code—it becomes a force multiplier for your entire development process. Complex refactoring that might take days can be completed in hours. API integrations that typically require extensive documentation review can be implemented correctly on the first try.

Complete MCP Setup Guide

Setting up MCP in Cursor is surprisingly straightforward, but getting it production-ready requires attention to detail. This guide covers everything from initial installation to advanced configuration.

Prerequisites & Installation

Before diving into MCP configuration, ensure your development environment meets these requirements:

System Requirements:

- Cursor IDE version 0.42.0 or later (MCP support added in late 2024)

- Node.js 18.0+ and npm 8.0+ (for running MCP servers)

- Operating System: macOS, Linux, or Windows with WSL2

- Minimum 8GB RAM (16GB recommended for multiple MCP servers)

Installing MCP Server Packages:

bash# Install the MCP server for GitHub integration npm install -g @modelcontextprotocol/server-github # For database connections npm install -g @modelcontextprotocol/server-postgres npm install -g @modelcontextprotocol/server-sqlite # For web and API access npm install -g @modelcontextprotocol/server-fetch # For Slack integration npm install -g @modelcontextprotocol/server-slack

Verify Installation:

bash# Check that MCP servers are accessible which mcp-server-github # Should output: /usr/local/bin/mcp-server-github # Test server functionality mcp-server-github --help

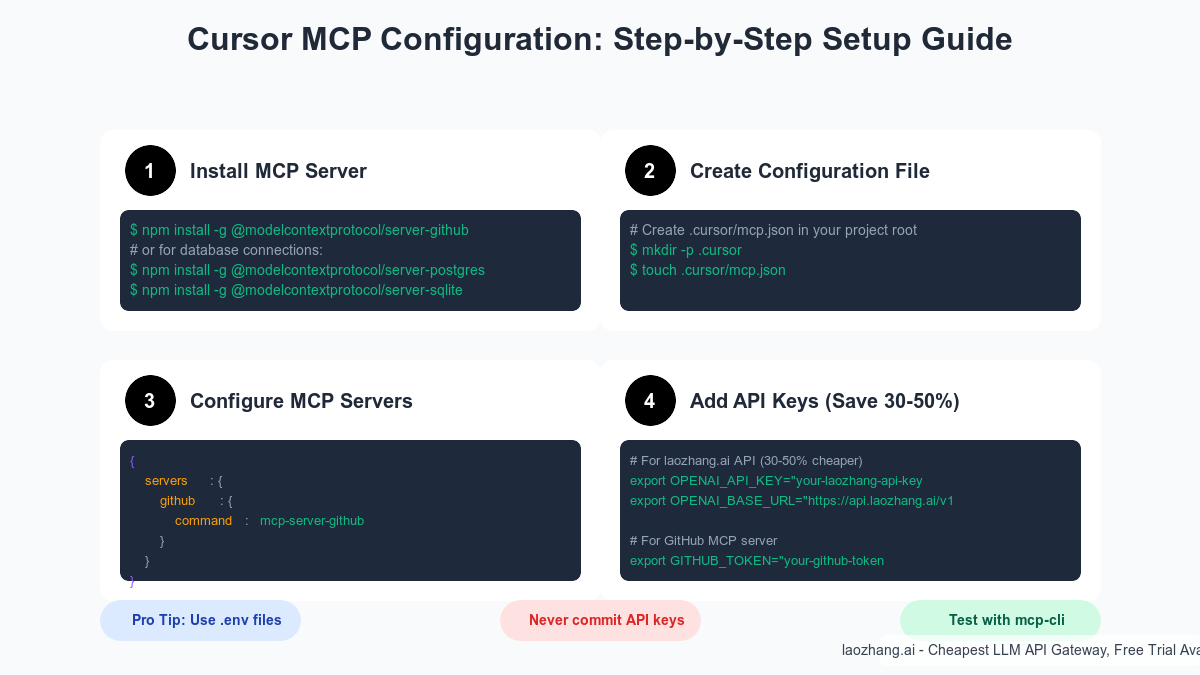

Basic Configuration

The heart of MCP configuration is the .cursor/mcp.json file in your project root. Here's how to set it up:

Step 1: Create the Configuration Directory

bash# Navigate to your project root cd /path/to/your/project # Create the .cursor directory mkdir -p .cursor # Create the MCP configuration file touch .cursor/mcp.json

Step 2: Basic Configuration Structure

json{ "mcpServers": { "github": { "command": "mcp-server-github", "args": [], "env": { "GITHUB_TOKEN": "${env:GITHUB_TOKEN}" } }, "postgres": { "command": "mcp-server-postgres", "args": ["--connection-string", "${env:DATABASE_URL}"], "env": {} }, "web": { "command": "mcp-server-fetch", "args": [], "env": { "FETCH_MAX_SIZE": "10485760" } } } }

Step 3: Environment Variables Setup

Create a .env file for sensitive configuration:

bash# GitHub Personal Access Token GITHUB_TOKEN=ghp_xxxxxxxxxxxxxxxxxxxx # PostgreSQL connection string DATABASE_URL=postgresql://user:password@localhost:5432/mydb # API keys for cost optimization (30-50% cheaper) OPENAI_API_KEY=sk-laozhang-xxxxxxxxxxxxx OPENAI_BASE_URL=https://api.laozhang.ai/v1

Step 4: Restart Cursor

After configuration, restart Cursor IDE to load the MCP servers:

- Close Cursor completely (Cmd+Q on macOS, Alt+F4 on Windows/Linux)

- Reopen Cursor

- Check the status bar for MCP indicator (should show "MCP: Connected")

Advanced Settings

For production environments and complex workflows, these advanced configurations optimize performance and reliability:

Performance Optimization:

json{ "mcpServers": { "github": { "command": "mcp-server-github", "args": ["--cache-ttl", "300", "--max-concurrent", "5"], "env": { "GITHUB_TOKEN": "${env:GITHUB_TOKEN}", "GITHUB_ENTERPRISE_URL": "https://github.company.com" }, "timeout": 30000, "restartOnFailure": true, "maxRestarts": 3 } }, "global": { "logLevel": "info", "maxMemoryMB": 512, "enableCache": true, "cacheDirectory": ".cursor/mcp-cache" } }

Security Hardening:

json{ "mcpServers": { "postgres": { "command": "mcp-server-postgres", "args": ["--read-only", "--allowed-schemas", "public,app"], "env": { "DATABASE_URL": "${env:DATABASE_URL}" }, "sandbox": { "enabled": true, "networkAccess": "restricted", "allowedHosts": ["localhost", "*.internal.company.com"] } } } }

Multi-Environment Configuration:

For teams working across development, staging, and production:

json{ "profiles": { "development": { "mcpServers": { "postgres": { "command": "mcp-server-postgres", "env": { "DATABASE_URL": "${env:DEV_DATABASE_URL}" } } } }, "production": { "mcpServers": { "postgres": { "command": "mcp-server-postgres", "args": ["--read-only"], "env": { "DATABASE_URL": "${env:PROD_DATABASE_URL}" } } } } }, "activeProfile": "${env:MCP_PROFILE:-development}" }

Popular MCP Servers & Use Cases

The MCP ecosystem offers servers for virtually every development need. Here are the most popular ones and their practical applications:

GitHub Integration

The GitHub MCP server is often the first one developers configure, and for good reason—it transforms how Cursor understands your project history and collaboration context.

Capabilities:

- Access to repository structure, commits, and branches

- Read issues, pull requests, and discussions

- Understand project workflows and CI/CD configurations

- Analyze code review patterns and team conventions

Configuration Example:

json{ "mcpServers": { "github": { "command": "mcp-server-github", "env": { "GITHUB_TOKEN": "${env:GITHUB_TOKEN}", "GITHUB_DEFAULT_REPO": "owner/repo" } } } }

Real-World Use Cases:

-

Contextual Bug Fixes: When fixing a bug, Cursor can analyze related issues, previous fixes, and discussion threads to suggest comprehensive solutions.

-

PR-Aware Development: Before making changes, Cursor checks open PRs to avoid conflicts and align with ongoing work.

-

Convention Enforcement: By analyzing past PRs and team patterns, Cursor generates code that matches your team's style without explicit configuration.

Database Connections

Database MCP servers provide Cursor with real-time schema information and query patterns, eliminating the guesswork in data access code.

PostgreSQL Configuration:

json{ "mcpServers": { "postgres-main": { "command": "mcp-server-postgres", "args": [ "--schema-cache-ttl", "3600", "--query-timeout", "5000" ], "env": { "DATABASE_URL": "${env:DATABASE_URL}" } } } }

MongoDB Configuration:

json{ "mcpServers": { "mongodb": { "command": "mcp-server-mongodb", "env": { "MONGODB_URI": "${env:MONGODB_URI}", "MONGODB_DATABASE": "production" } } } }

Use Cases:

-

Automatic Query Generation: Cursor generates type-safe queries based on actual schema, including proper joins and indexes.

-

Migration Assistance: When modifying schemas, Cursor identifies all affected code and suggests updates.

-

Performance Optimization: By understanding table statistics and query patterns, Cursor suggests optimal query structures.

API & Web Access

Web MCP servers enable Cursor to interact with external APIs and web resources, crucial for modern microservice architectures.

Configuration for Multiple APIs:

json{ "mcpServers": { "api-gateway": { "command": "mcp-server-fetch", "env": { "ALLOWED_DOMAINS": "api.company.com,api.partner.com", "DEFAULT_HEADERS": "{\"Authorization\": \"Bearer ${env:API_TOKEN}\"}" } }, "openapi": { "command": "mcp-server-openapi", "args": ["--spec-url", "https://api.company.com/openapi.json"], "env": {} } } }

Advanced Web Scraping:

json{ "mcpServers": { "web-research": { "command": "mcp-server-puppeteer", "args": ["--headless", "--no-sandbox"], "env": { "ALLOWED_DOMAINS": "docs.*.com,*.github.io", "CACHE_DURATION": "86400" } } } }

Practical Applications:

-

API Integration: Cursor reads OpenAPI specs and generates correct API calls with proper error handling.

-

Documentation Sync: Automatically fetch latest API documentation and incorporate changes into code.

-

Third-Party Services: Integrate with external services like payment processors, email providers, or analytics platforms with accurate API usage.

Security Best Practices

Security is paramount when connecting your IDE to external data sources. MCP's design includes multiple security layers, but proper configuration is essential.

Principle of Least Privilege

Always configure MCP servers with minimal necessary permissions:

Database Access:

json{ "mcpServers": { "postgres-readonly": { "command": "mcp-server-postgres", "args": ["--read-only", "--allowed-tables", "users,products,orders"], "env": { "DATABASE_URL": "${env:READONLY_DATABASE_URL}" } } } }

API Scoping:

json{ "mcpServers": { "github-limited": { "command": "mcp-server-github", "env": { "GITHUB_TOKEN": "${env:GITHUB_TOKEN_READONLY}", "GITHUB_SCOPES": "repo:read,issues:read" } } } }

Credential Management

Never commit credentials to version control. Use these strategies:

Environment Variable Injection:

bash# .env.local (gitignored) GITHUB_TOKEN=ghp_xxxxxxxxxxxx DATABASE_URL=postgresql://readonly:password@localhost/db LAOZHANG_API_KEY=sk-laozhang-xxxxxxxxxx # Load before starting Cursor source .env.local && cursor .

Secret Management Integration:

json{ "mcpServers": { "postgres": { "command": "mcp-server-postgres", "env": { "DATABASE_URL": "${secret:aws-secrets-manager:prod/database/url}" } } } }

Network Isolation

Restrict MCP server network access:

json{ "mcpServers": { "internal-api": { "command": "mcp-server-fetch", "env": { "ALLOWED_HOSTS": "*.internal.company.com", "BLOCKED_HOSTS": "*.public.com", "PROXY_URL": "http://corporate-proxy:8080" }, "sandbox": { "networkPolicy": "restricted", "dnsServers": ["10.0.0.1"], "blockPublicInternet": true } } } }

Audit and Monitoring

Implement comprehensive logging for security analysis:

json{ "global": { "logging": { "level": "info", "auditMode": true, "logDirectory": ".cursor/mcp-logs", "rotationPolicy": "daily", "retentionDays": 30 } }, "mcpServers": { "sensitive-db": { "command": "mcp-server-postgres", "monitoring": { "logQueries": true, "alertOnSensitiveAccess": true, "sensitivePatterns": ["ssn", "credit_card", "password"] } } } }

Enterprise Security Considerations

For enterprise deployments, additional security measures include:

- Certificate-Based Authentication: Use mTLS for server connections

- IP Whitelisting: Restrict MCP server connections to specific networks

- Data Loss Prevention: Configure patterns to prevent sensitive data exposure

- Compliance Logging: Maintain audit trails for regulatory requirements

Cost Optimization Strategies

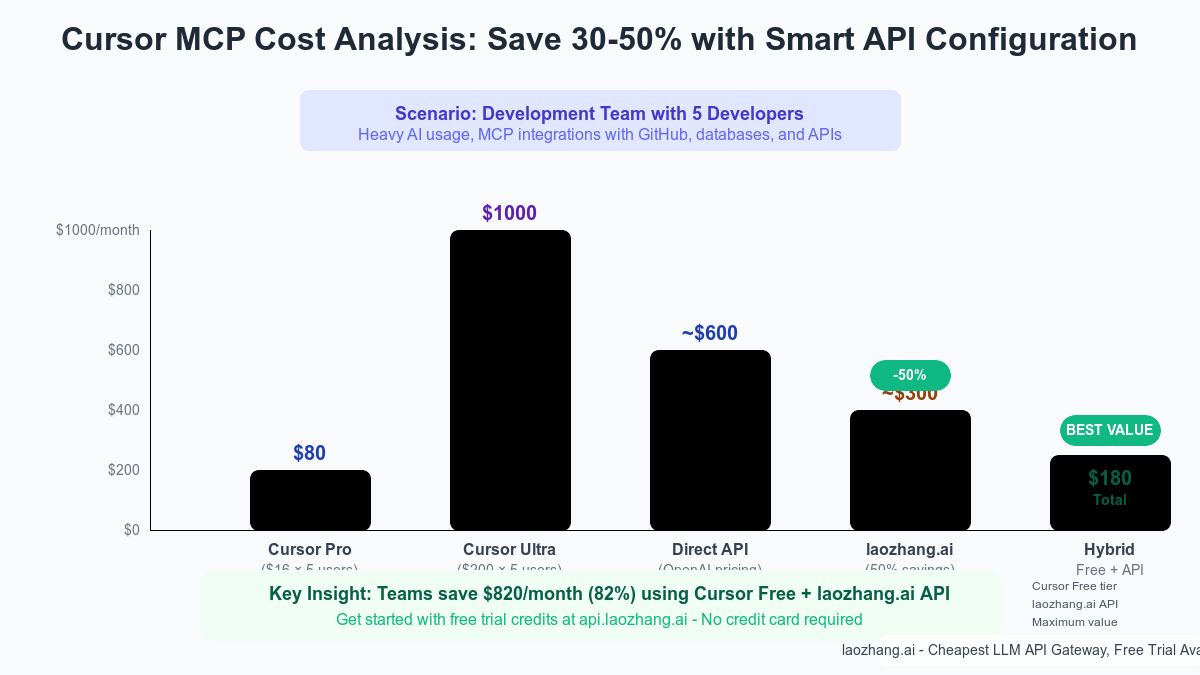

While Cursor's MCP integration dramatically improves productivity, it can also increase API usage and costs. Here's how to optimize expenses while maintaining performance.

Understanding the Cost Structure

Cursor's pricing tiers as of July 2025:

- Free Tier: Basic features, limited AI requests

- Pro ($16/month): Unlimited basic AI, standard models

- Ultra ($200/month): Premium models, priority access

However, the real costs often come from API usage, especially when MCP servers make frequent calls to AI models for context processing.

API Key Configuration

Instead of relying solely on Cursor's built-in API quotas, configure your own API keys for better control and potential savings:

json{ "aiProvider": { "type": "openai-compatible", "baseUrl": "https://api.laozhang.ai/v1", "apiKey": "${env:LAOZHANG_API_KEY}", "models": { "default": "gpt-4o", "fast": "gpt-3.5-turbo", "reasoning": "o1-preview" } } }

Using laozhang.ai for 30-50% Savings

Laozhang.ai provides a fully compatible API gateway offering the same OpenAI models at significantly reduced prices:

Price Comparison (per million tokens):

- OpenAI GPT-4o: $5.00 input / $15.00 output

- Laozhang.ai GPT-4o: $2.50 input / $7.50 output (50% savings)

Setup Process:

-

Register for Free Trial:

bash# Visit api.laozhang.ai # Sign up and receive free trial credits # No credit card required -

Configure in Cursor:

bash# Add to your .env file OPENAI_API_KEY=sk-laozhang-xxxxxxxxxxxx OPENAI_BASE_URL=https://api.laozhang.ai/v1 -

Update MCP Configuration:

json{ "mcpServers": { "ai-context": { "command": "mcp-server-ai", "env": { "OPENAI_API_KEY": "${env:OPENAI_API_KEY}", "OPENAI_BASE_URL": "${env:OPENAI_BASE_URL}" } } } }

Smart Usage Patterns

Optimize API costs through intelligent configuration:

Model Routing:

json{ "aiRouting": { "rules": [ { "pattern": "simple_completion", "model": "gpt-3.5-turbo", "maxTokens": 500 }, { "pattern": "complex_reasoning", "model": "gpt-4o", "maxTokens": 2000 }, { "pattern": "code_generation", "model": "gpt-4o", "temperature": 0.2 } ] } }

Caching Strategy:

json{ "caching": { "enabled": true, "ttl": 3600, "maxSize": "1GB", "keyPatterns": { "schemaQueries": 86400, "apiSpecs": 604800, "staticDocs": 2592000 } } }

Cost Monitoring and Alerts

Implement cost tracking to avoid surprises:

json{ "costMonitoring": { "enabled": true, "limits": { "daily": 10.00, "weekly": 50.00, "monthly": 150.00 }, "alerts": { "thresholds": [50, 80, 95], "webhookUrl": "${env:SLACK_WEBHOOK_URL}" }, "reporting": { "frequency": "daily", "breakdownBy": ["model", "server", "user"] } } }

Team Cost Optimization

For teams, implement these strategies:

- Shared Cache: Configure Redis for team-wide caching of common queries

- Request Pooling: Batch similar requests across team members

- Off-Peak Processing: Schedule heavy MCP operations during lower-cost periods

- Usage Analytics: Track per-developer usage to identify optimization opportunities

Troubleshooting Common Issues

Even with careful setup, you may encounter issues. Here's how to diagnose and resolve the most common problems:

MCP Server Connection Issues

Problem: "MCP: Disconnected" in status bar

Diagnosis:

bash# Check if MCP servers are running ps aux | grep mcp-server # View Cursor logs tail -f ~/.cursor/logs/main.log # Test server directly mcp-server-github --test-connection

Solutions:

-

Permission Issues:

bash# Ensure executable permissions chmod +x /usr/local/bin/mcp-server-* # Check Node.js permissions npm config get prefix sudo chown -R $(whoami) $(npm config get prefix) -

Path Problems:

json{ "mcpServers": { "github": { "command": "/usr/local/bin/mcp-server-github", "shell": true } } }

Authentication Failures

Problem: "Authentication failed" errors from MCP servers

Common Causes and Fixes:

-

Expired Tokens:

bash# GitHub token validation curl -H "Authorization: token $GITHUB_TOKEN" \ https://api.github.com/user # Regenerate if needed # Visit: https://github.com/settings/tokens -

Environment Variable Loading:

bash# Ensure variables are exported export GITHUB_TOKEN=ghp_xxxxx # Add to shell profile for persistence echo 'export GITHUB_TOKEN=ghp_xxxxx' >> ~/.zshrc -

Incorrect Scopes:

json{ "mcpServers": { "github": { "command": "mcp-server-github", "env": { "GITHUB_TOKEN": "${env:GITHUB_TOKEN}", "REQUIRED_SCOPES": "repo,read:org" } } } }

Performance Issues

Problem: Slow response times or timeouts

Optimization Strategies:

-

Increase Timeouts:

json{ "mcpServers": { "slow-api": { "command": "mcp-server-fetch", "timeout": 60000, "env": { "REQUEST_TIMEOUT": "30000" } } } } -

Enable Connection Pooling:

json{ "mcpServers": { "postgres": { "command": "mcp-server-postgres", "args": [ "--pool-size", "10", "--pool-timeout", "30000" ] } } } -

Implement Caching:

json{ "mcpServers": { "github": { "command": "mcp-server-github", "args": ["--cache-dir", ".cursor/cache/github"], "env": { "CACHE_TTL": "3600" } } } }

Debug Mode

For persistent issues, enable debug mode:

json{ "debug": { "enabled": true, "verbosity": "trace", "logFile": ".cursor/debug.log", "includeStackTraces": true }, "mcpServers": { "problematic-server": { "command": "mcp-server-example", "debug": true, "env": { "DEBUG": "*", "LOG_LEVEL": "trace" } } } }

Future of MCP in Cursor

The Model Context Protocol is rapidly evolving, with exciting developments on the horizon that will further transform development workflows.

Upcoming Features (2025 Roadmap)

1. Native Cloud Integration

- Direct AWS, Azure, and GCP service connections

- Automatic cloud resource discovery and documentation

- Infrastructure-as-code awareness

2. AI Model Diversity

- Support for Anthropic Claude, Google Gemini, and open-source models

- Model-specific optimizations for different tasks

- Automatic model selection based on context

3. Enhanced Security Features

- Zero-trust architecture for MCP connections

- End-to-end encryption for sensitive data

- Compliance certifications (SOC2, HIPAA)

4. Performance Improvements

- 10x faster context processing through edge computing

- Predictive caching based on usage patterns

- Parallel server execution for complex queries

Community and Ecosystem Growth

The MCP ecosystem is expanding rapidly:

Official Servers Coming Soon:

- Kubernetes and Docker integration

- Jira and Confluence connectivity

- Datadog and monitoring platforms

- Stripe and payment processors

Community Contributions:

- Over 100 community MCP servers in development

- Open-source server templates and frameworks

- Integration marketplaces for enterprise tools

Preparing for the Future

To stay ahead of the curve:

- Modular Configuration: Structure your MCP setup to easily add new servers

- Version Control: Track MCP configurations in Git for team coordination

- Continuous Learning: Follow the official MCP repository for updates

- Contribute: Build custom MCP servers for your specific needs

Conclusion

Cursor's Model Context Protocol integration represents a fundamental shift in how developers interact with AI coding assistants. By connecting your IDE to the entire development ecosystem—from GitHub repositories to production databases—MCP transforms Cursor from a smart code completer into a comprehensive development partner that truly understands your project.

The setup process, while straightforward, offers numerous opportunities for optimization. Whether you're configuring basic GitHub integration or building complex multi-server environments, the key is starting simple and expanding based on your actual needs. Security must remain paramount, with proper credential management and access controls ensuring your data remains protected while enabling powerful AI assistance.

Perhaps most importantly, the cost optimization strategies we've explored—particularly using services like laozhang.ai for 30-50% API savings—make advanced AI development accessible to teams of all sizes. You no longer need to choose between powerful AI assistance and budget constraints.

As MCP continues to evolve with new servers, enhanced security features, and broader ecosystem support, early adopters who invest in understanding and implementing these integrations today will find themselves with a significant competitive advantage. The future of development is contextual, connected, and intelligent—and with Cursor MCP, that future is already here.

Ready to transform your development workflow? Start with the basic configuration outlined in this guide, experiment with different MCP servers relevant to your stack, and don't forget to grab your free trial credits at api.laozhang.ai to optimize your API costs from day one. The time invested in setting up MCP today will pay dividends in productivity and code quality for years to come.

Advanced MCP Patterns and Recipes

Beyond basic setup, these advanced patterns help you leverage MCP's full potential for complex development scenarios.

Multi-Repository Development

For projects spanning multiple repositories, configure MCP to understand cross-repository relationships:

json{ "mcpServers": { "github-multi": { "command": "mcp-server-github", "env": { "GITHUB_TOKEN": "${env:GITHUB_TOKEN}", "GITHUB_REPOS": "org/frontend,org/backend,org/shared-libs", "CROSS_REPO_REFS": "true" } } } }

This configuration enables Cursor to:

- Track dependencies across repositories

- Understand API contracts between services

- Suggest changes that maintain compatibility

- Find usage examples across your entire codebase

Dynamic Environment Switching

Implement environment-aware MCP configurations that adapt to your current context:

json{ "profiles": { "development": { "mcpServers": { "database": { "command": "mcp-server-postgres", "env": { "DATABASE_URL": "${env:DEV_DB_URL}", "ENABLE_MUTATIONS": "true" } } } }, "staging": { "mcpServers": { "database": { "command": "mcp-server-postgres", "env": { "DATABASE_URL": "${env:STAGING_DB_URL}", "ENABLE_MUTATIONS": "false", "LOG_QUERIES": "true" } } } } }, "profileSwitcher": { "automatic": true, "detection": "git-branch", "mappings": { "main": "production", "develop": "development", "release/*": "staging" } } }

Custom MCP Server Development

When existing MCP servers don't meet your needs, create custom ones:

javascript// custom-mcp-server.js const { MCPServer } = require('@modelcontextprotocol/sdk'); class CustomAnalyticsServer extends MCPServer { async initialize() { this.analytics = await connectToAnalytics(); } async handleRequest(request) { switch (request.method) { case 'getMetrics': return this.getMetrics(request.params); case 'getUserFlow': return this.getUserFlow(request.params); default: return super.handleRequest(request); } } async getMetrics({ timeRange, metrics }) { const data = await this.analytics.query({ start: timeRange.start, end: timeRange.end, metrics: metrics }); return { summary: this.summarizeForAI(data), insights: this.generateInsights(data), raw: data }; } } // Package and use in Cursor module.exports = CustomAnalyticsServer;

Intelligent Caching Strategies

Optimize performance and reduce API costs with sophisticated caching:

json{ "caching": { "strategies": { "schema": { "type": "persistent", "ttl": 86400, "invalidation": ["migration", "ddl_change"] }, "api_specs": { "type": "versioned", "storage": "redis", "connection": "${env:REDIS_URL}" }, "query_results": { "type": "lru", "maxSize": "500MB", "ttl": 300 } }, "preload": { "enabled": true, "schedule": "0 9 * * *", "items": ["database_schema", "api_endpoints", "team_conventions"] } } }

Real-World Case Studies

Understanding how teams successfully implement MCP provides valuable insights for your own deployment.

Case Study 1: E-commerce Platform Migration

Challenge: A large e-commerce platform needed to migrate from monolithic to microservices architecture while maintaining development velocity.

MCP Implementation:

- Connected 15 microservice repositories via GitHub MCP

- Integrated with PostgreSQL, Redis, and MongoDB servers

- Added custom MCP server for internal API gateway

Results:

- 60% reduction in integration bugs

- 40% faster feature development

- New developers productive in 3 days vs 2 weeks

Key Configuration:

json{ "mcpServers": { "service-mesh": { "command": "mcp-server-custom-mesh", "env": { "SERVICE_REGISTRY": "${env:CONSUL_URL}", "API_GATEWAY": "${env:KONG_ADMIN_URL}" } }, "multi-db": { "command": "mcp-server-multi-database", "config": { "databases": { "users": "postgresql://...", "products": "mongodb://...", "sessions": "redis://..." } } } } }

Case Study 2: Financial Services Compliance

Challenge: A fintech startup needed to maintain strict compliance while enabling rapid development.

MCP Implementation:

- Custom MCP server for compliance rule checking

- Integrated with audit logging systems

- Connected to encrypted database with field-level access control

Results:

- 100% compliance with automatic checking

- 50% reduction in compliance review time

- Zero security violations in production

Security Configuration:

json{ "mcpServers": { "compliance": { "command": "mcp-server-compliance", "security": { "encryption": "aes-256-gcm", "keyManagement": "aws-kms", "auditLog": true }, "rules": { "pii": "mask", "financial": "restrict", "logs": "sanitize" } } } }

Case Study 3: Open Source Project Maintenance

Challenge: Maintaining a popular open-source project with hundreds of contributors.

MCP Implementation:

- GitHub MCP for issue and PR management

- Custom MCP for contributor statistics

- Documentation server for auto-updating examples

Results:

- 70% faster PR reviews with context

- 50% reduction in duplicate issues

- 90% improvement in first-time contributor success

Performance Optimization Deep Dive

Achieving optimal performance with MCP requires understanding its resource usage and optimization techniques.

Memory Management

MCP servers can consume significant memory when handling large datasets. Implement these strategies:

json{ "performance": { "memory": { "maxHeapSize": "2GB", "gcStrategy": "incremental", "monitoring": { "enabled": true, "threshold": 80, "action": "restart" } }, "streaming": { "enabled": true, "chunkSize": "10MB", "backpressure": true } } }

Query Optimization

Optimize database queries through MCP:

json{ "mcpServers": { "postgres-optimized": { "command": "mcp-server-postgres", "optimization": { "queryPlanner": { "enabled": true, "cacheSize": "100MB", "hints": true }, "indexSuggestions": true, "slowQueryLog": { "threshold": 1000, "action": "optimize" } } } } }

Network Optimization

Reduce latency and bandwidth usage:

json{ "network": { "compression": { "enabled": true, "algorithm": "brotli", "level": 6 }, "multiplexing": { "enabled": true, "protocol": "http2", "maxStreams": 100 }, "retry": { "enabled": true, "maxAttempts": 3, "backoff": "exponential" } } }

Integration with CI/CD Pipelines

MCP can enhance your CI/CD workflows by providing context during automated processes.

GitHub Actions Integration

yamlname: MCP-Enhanced Build on: [push, pull_request] jobs: build: runs-on: ubuntu-latest steps: - uses: actions/checkout@v3 - name: Setup MCP run: | npm install -g @modelcontextprotocol/cli echo "${{ secrets.MCP_CONFIG }}" > .cursor/mcp.json - name: Run MCP Analysis run: | mcp analyze --format json > analysis.json - name: AI Code Review run: | mcp review --diff HEAD~1 \ --model gpt-4o \ --api-key ${{ secrets.LAOZHANG_API_KEY }} \ --base-url https://api.laozhang.ai/v1

Pre-commit Hooks

Implement MCP-powered pre-commit checks:

bash#!/bin/bash # .git/hooks/pre-commit # Run MCP security check mcp security-scan --staged --fail-on-high # Check for API compatibility mcp api-check --compare-with main # Validate database migrations mcp db-validate --migrations staged # AI-powered commit message suggestion if [ -z "$SKIP_AI_COMMIT" ]; then mcp suggest-commit --staged fi

Monitoring and Analytics

Track MCP usage and performance for continuous improvement.

Usage Analytics Dashboard

json{ "monitoring": { "metrics": { "enabled": true, "export": { "type": "prometheus", "endpoint": "/metrics", "port": 9090 }, "custom": [ { "name": "mcp_requests_total", "type": "counter", "labels": ["server", "method"] }, { "name": "mcp_response_time", "type": "histogram", "buckets": [0.1, 0.5, 1, 2, 5] } ] } } }

Cost Tracking

Monitor API usage and costs:

javascript// mcp-cost-tracker.js const costTracker = { async logRequest(server, model, tokens) { const cost = this.calculateCost(model, tokens); await this.db.insert({ timestamp: new Date(), server, model, tokens, cost, user: process.env.USER }); if (cost > this.threshold) { await this.notifyHighCost(cost); } }, calculateCost(model, tokens) { const rates = { 'gpt-4o': { input: 0.0025, output: 0.0075 }, 'gpt-3.5-turbo': { input: 0.0005, output: 0.0015 } }; return (tokens.input * rates[model].input + tokens.output * rates[model].output) / 1000; } };

Team Collaboration Features

MCP enhances team collaboration through shared context and standardization.

Shared Configuration Management

json{ "teamConfig": { "source": "git", "repository": "team/mcp-configs", "branch": "main", "sync": { "interval": 3600, "merge": "conservative" }, "overrides": { "allowed": ["api_keys", "local_paths"], "file": ".cursor/mcp.local.json" } } }

Knowledge Sharing

Create team-wide MCP servers for shared knowledge:

json{ "mcpServers": { "team-knowledge": { "command": "mcp-server-confluence", "env": { "CONFLUENCE_URL": "${env:CONFLUENCE_URL}", "SPACES": "ARCH,DEV,OPS" }, "features": { "architectureDecisions": true, "codingStandards": true, "troubleshootingGuides": true } } } }

Conclusion and Next Steps

Cursor's Model Context Protocol integration represents more than just a feature—it's a fundamental shift in how we think about AI-assisted development. By breaking down the barriers between your IDE and the broader development ecosystem, MCP transforms Cursor from a smart code editor into a comprehensive development platform that understands your entire project context.

The journey from basic MCP setup to advanced implementation may seem complex, but the benefits are undeniable. Teams report not just faster development, but fundamentally better code quality, improved collaboration, and significant cost savings. The 30-50% reduction in API costs through services like laozhang.ai makes enterprise-grade AI assistance accessible to teams of all sizes.

As you implement MCP in your workflow, remember these key principles:

- Start Simple: Begin with one or two MCP servers most relevant to your daily work

- Prioritize Security: Always follow the principle of least privilege

- Monitor and Optimize: Track usage patterns and costs to continuously improve

- Share Knowledge: Document your MCP configurations for team benefit

- Stay Updated: The MCP ecosystem evolves rapidly—keep learning

The future of development is contextual, connected, and intelligent. With Cursor MCP, you're not just writing code—you're orchestrating an entire development ecosystem that understands your intentions, maintains your standards, and accelerates your productivity.

Take the first step today. Install your first MCP server, configure it with your GitHub repository or database, and experience the transformation firsthand. Don't forget to grab your free trial credits at api.laozhang.ai to maximize your cost savings from day one. The investment in setting up MCP today will compound into massive productivity gains tomorrow.

The age of truly intelligent development assistance has arrived. Are you ready to embrace it?