The landscape of AI API pricing has undergone dramatic shifts in 2025, with Anthropic's Claude leading a revolution in both capability and cost structure. Whether you're a startup founder calculating runway, an enterprise architect designing scalable systems, or a developer exploring AI integration, understanding Claude's pricing model is crucial for making informed decisions. This comprehensive guide dissects every aspect of Claude API pricing, from the latest model costs to advanced optimization strategies that can reduce your expenses by up to 70%.

The recent launch of Claude 4 in May 2025 marked a watershed moment in AI pricing strategy. With Claude Opus 4 setting new benchmarks in coding capabilities (achieving 72.5% on SWE-bench) and Claude Sonnet 4 delivering enterprise-grade performance at a fraction of the cost, the pricing landscape has become both more competitive and more complex. Add to this the introduction of the Max subscription tier, enhanced caching capabilities, and the emergence of cost-effective API gateway solutions, and you have a pricing ecosystem that rewards informed decision-making.

Understanding Claude's Dual Pricing Model

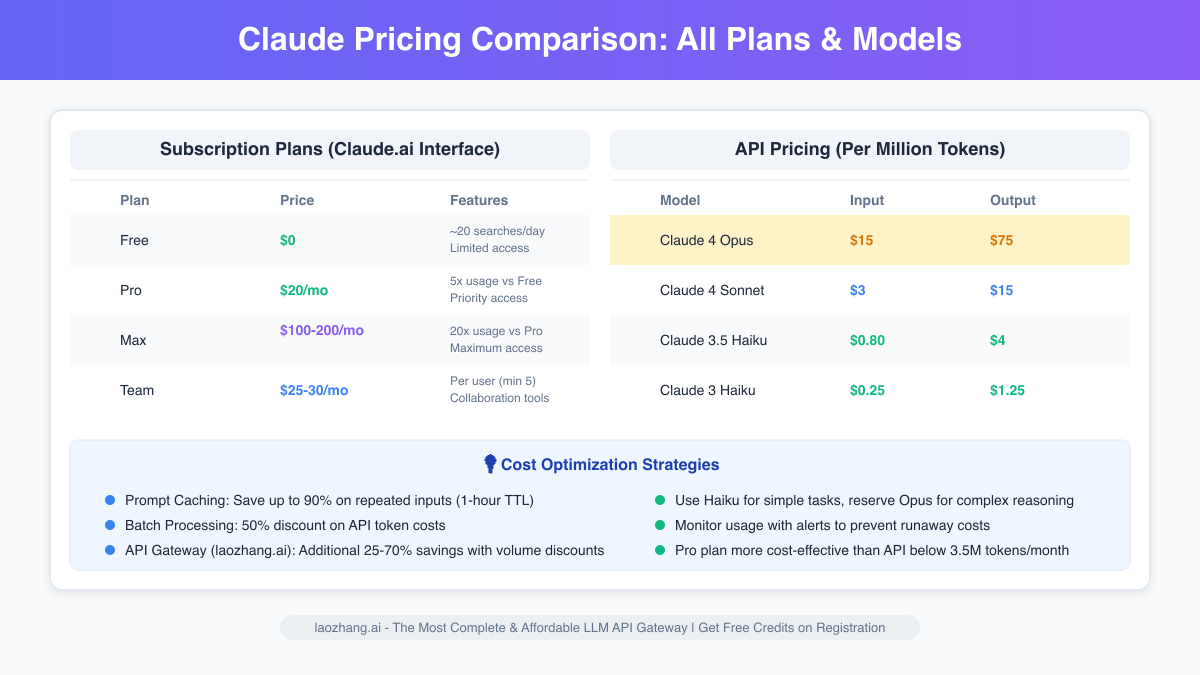

Claude's pricing architecture operates on two distinct but complementary tracks: subscription-based access through the Claude.ai interface and pay-as-you-go API pricing for programmatic integration. This dual approach reflects Anthropic's understanding that different users have fundamentally different needs and usage patterns.

The subscription model caters to individuals and teams who primarily interact with Claude through the web interface. Starting with the free tier offering approximately 20 searches per day, the pricing scales through Pro at $20 per month (or $17 monthly when billed annually), up to the recently introduced Max plans at $100 and $200 per month. Each tier represents a significant jump in usage allowance, with Pro users enjoying 5x the capacity of free users, and Max subscribers accessing up to 20x the Pro tier's limits.

On the API side, pricing follows a token-based model where you pay for what you use. The latest Claude 4 models command premium pricing: Claude Opus 4 at $15 per million input tokens and $75 per million output tokens, while Claude Sonnet 4 offers a more balanced option at $3 per million input tokens and $15 per million output tokens. For budget-conscious applications, Claude 3.5 Haiku provides exceptional value at just $0.80 per million input tokens and $4 per million output tokens.

The Real Cost of AI: Breaking Down Your Monthly Bill

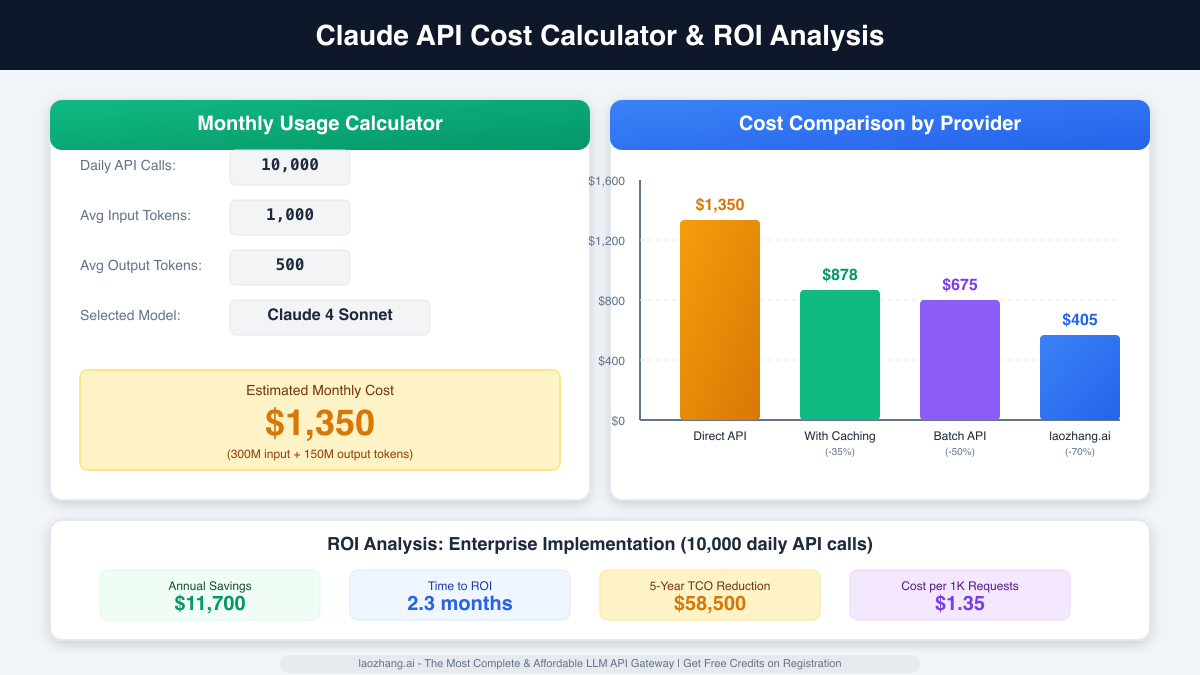

Understanding theoretical pricing is one thing; calculating actual costs for real-world applications is another. Let's examine a typical scenario: a customer service automation system processing 10,000 queries daily, with each interaction averaging 1,000 input tokens and generating 500 output tokens.

Using Claude Sonnet 4, this translates to 300 million input tokens and 150 million output tokens monthly. At standard rates, your monthly bill would reach $1,350—a significant investment for many businesses. However, this raw calculation doesn't tell the complete story. Anthropic has introduced several cost-saving mechanisms that can dramatically reduce this figure.

Prompt caching, one of the most impactful features, can reduce costs by up to 90% for repeated queries. With the extended 1-hour cache time to live (TTL), applications with predictable query patterns can achieve substantial savings. In our customer service example, if 60% of queries involve similar contexts (product information, policies, FAQs), effective caching could reduce the monthly cost to approximately $878—a 35% reduction without any compromise in quality or response time.

Advanced Cost Optimization Strategies

The path to cost-effective AI implementation requires a multi-faceted approach. Beyond the obvious benefits of caching, several strategies can compound to create dramatic cost reductions. Batch processing, available through Anthropic's API, offers a flat 50% discount for non-time-sensitive operations. This makes it ideal for content generation, data analysis, and other tasks where immediate responses aren't critical.

Model selection represents another crucial optimization lever. While Claude Opus 4 delivers unmatched performance for complex reasoning and coding tasks, many applications can achieve excellent results with more economical models. Claude 3.5 Haiku, at approximately 5% of Opus 4's cost, handles straightforward queries, content summarization, and basic analysis tasks admirably. Implementing intelligent routing—where queries are directed to the appropriate model based on complexity—can reduce costs by 60-80% while maintaining user satisfaction.

The emergence of API gateway services has introduced a game-changing option for high-volume users. Services like laozhang.ai aggregate demand across multiple users, securing volume discounts that individual developers couldn't access. By routing your Claude API calls through laozhang.ai's infrastructure, you can achieve immediate cost savings of 25-70% depending on your usage volume. Their pricing model is particularly attractive for startups and growing businesses, offering enterprise-grade reliability at startup-friendly prices. With features like automatic failover, detailed analytics, and support for multiple AI models through a unified API, laozhang.ai transforms API management from a cost center into a strategic advantage.

ROI Analysis: When Claude Pays for Itself

The decision to invest in Claude API access shouldn't be made in isolation—it requires careful consideration of return on investment. Recent enterprise data reveals compelling success stories: 74% of organizations report their AI initiatives meeting or exceeding ROI expectations, with particularly strong results in customer service, content creation, and software development.

Consider a real-world example: a software development team using Claude Opus 4 for code review and generation. With developers costing $150,000 annually, a 20% productivity improvement translates to $30,000 in value per developer per year. For a team of 10, that's $300,000 in annual value. Even at premium API rates, the monthly cost of $5,000-$10,000 for extensive Claude usage delivers a remarkable ROI within just two months.

The financial case becomes even stronger when considering indirect benefits. Reduced error rates, faster time-to-market, and improved code quality compound the direct productivity gains. One e-commerce platform reported that implementing Claude-powered customer service reduced support costs by 65% while improving satisfaction scores—a combination that directly impacted both expenses and revenue.

Choosing the Right Plan: A Strategic Framework

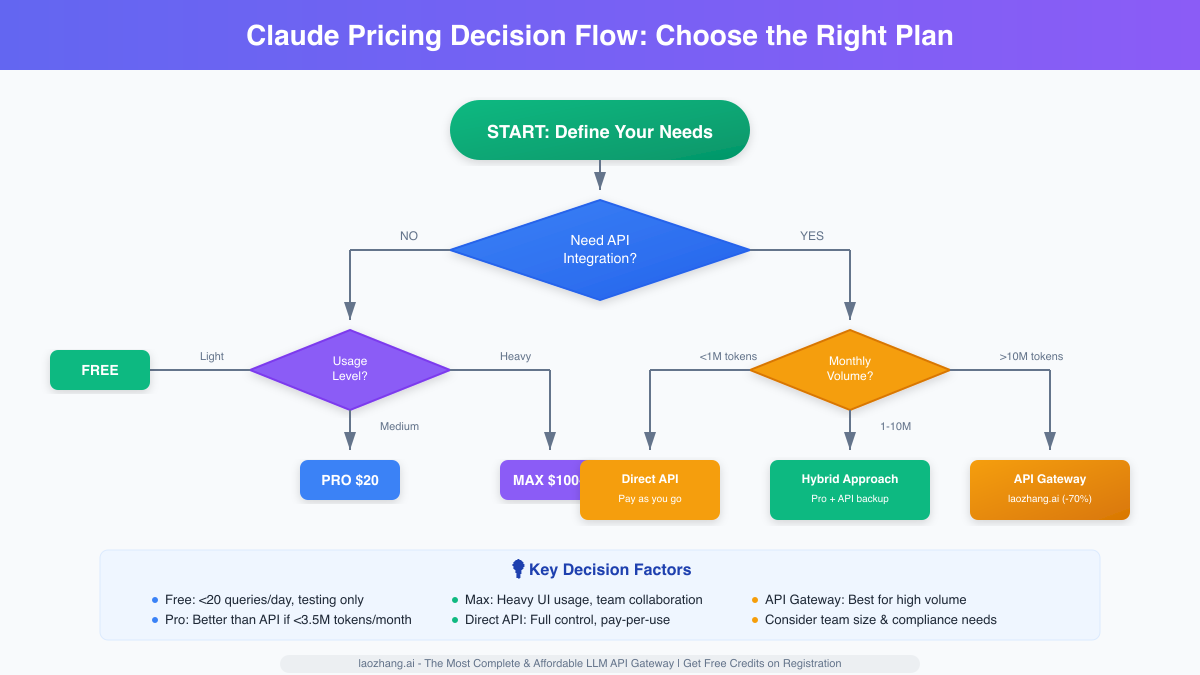

Selecting the optimal Claude access method requires aligning your technical requirements with business constraints. The decision tree starts with a fundamental question: do you need programmatic API access, or will the web interface suffice?

For individuals and small teams primarily using Claude for research, writing, and interactive problem-solving, the subscription tiers offer compelling value. The Pro plan at $20 monthly makes economic sense for anyone who would otherwise spend more than $17.70 on API tokens—roughly equivalent to processing 3.5 million tokens monthly with Claude Sonnet 4. The new Max plans cater to power users and small teams who need consistent, high-volume access without the complexity of API integration.

API access becomes essential when you need to integrate Claude into applications, automate workflows, or process data at scale. Here, the calculus shifts from simple cost comparison to total cost of ownership (TCO). Direct API access provides maximum flexibility and control but requires handling rate limits, error recovery, and usage monitoring. For many organizations, especially those processing over 10 million tokens monthly, API gateway services offer an attractive middle ground—combining the flexibility of API access with simplified management and significant cost savings.

The Hidden Costs: What Vendors Don't Tell You

While published pricing provides the foundation for cost calculations, several hidden factors can significantly impact your total spend. Rate limits, often overlooked in initial planning, can force architectural decisions that increase complexity and cost. Direct API access starts with restrictive limits that can throttle growth, requiring investment in queue management and request distribution systems.

Development time represents another hidden cost. Implementing robust error handling, retry logic, and monitoring for direct API integration can consume weeks of developer time. At typical developer rates, this easily adds $10,000-$20,000 to your initial implementation cost. API gateway services like laozhang.ai include these features by default, effectively subsidizing your development costs through their infrastructure investment.

Compliance and security considerations add another layer of complexity. Enterprise users must ensure their AI usage meets regulatory requirements, necessitating audit trails, data residency controls, and security certifications. While Anthropic provides enterprise-grade security, implementing proper controls and monitoring can require significant additional investment.

Future-Proofing Your AI Investment

The rapid evolution of AI capabilities and pricing models demands a flexible approach to platform selection. Anthropic's recent 25% price reduction for Claude 3.5 Sonnet and the introduction of new model variants signal a trend toward more competitive pricing and specialized options. Building your implementation with switching costs in mind ensures you can adapt as the market evolves.

Abstraction layers prove invaluable for maintaining flexibility. Rather than hard-coding Claude-specific implementations, wrapping API calls in a service layer allows seamless switching between models or providers. This approach also enables A/B testing different models for various use cases, optimizing both performance and cost continuously.

Multi-model strategies are becoming increasingly common among sophisticated users. By leveraging Claude for complex reasoning and coding tasks while routing simpler queries to more economical alternatives, organizations can optimize their AI spend without compromising capabilities. API gateways that support multiple providers through a unified interface make this approach practical, eliminating the complexity of managing multiple vendor relationships.

Implementation Best Practices from the Field

Success with Claude API implementation often comes down to execution details that separate efficient operations from costly mistakes. Request batching, though conceptually simple, requires careful balance. Too aggressive batching increases latency; too conservative leaves money on the table. The sweet spot typically involves batching similar requests within 100-200ms windows, achieving cost benefits without perceptible delays.

Implementing circuit breakers prevents cascade failures and unexpected costs. When API errors occur, exponential backoff with jitter prevents thundering herd problems while maintaining system responsiveness. More importantly, setting hard limits on daily API spend prevents runaway costs from bugs or unexpected usage spikes. Many teams have learned this lesson expensively—one misplaced loop can generate thousands of dollars in API charges within hours.

Monitoring and alerting deserve special attention. Beyond simple usage tracking, sophisticated implementations monitor cost per transaction, model performance metrics, and usage patterns. Anomaly detection can identify optimization opportunities and potential issues before they impact your budget. Tools that visualize API usage patterns often reveal surprising insights—like discovering that 30% of API calls could be served from cache or that certain query types consistently choose suboptimal models.

The Competitive Landscape: Claude in Context

While this guide focuses on Claude pricing, understanding the competitive landscape helps contextualize your investment. OpenAI's GPT-4 family, Google's Gemini, and open-source alternatives each offer different price-performance trade-offs. Claude's premium pricing reflects its superior performance in specific domains—particularly coding, complex reasoning, and maintaining context over long conversations.

The market dynamics favor API aggregators and gateways that provide access to multiple models. Services like laozhang.ai capitalize on this trend, offering unified access to Claude, GPT-4, Gemini, and other models through a single API. This approach not only simplifies integration but enables dynamic model selection based on query characteristics, optimizing both cost and performance automatically.

Enterprise adoption patterns reveal interesting insights. While OpenAI maintains market share leadership, Anthropic has doubled its enterprise presence from 12% to 24% in the past year. The primary drivers? Superior safety features, consistent performance, and transparent pricing. For organizations where reliability and predictability matter more than rock-bottom pricing, Claude's value proposition resonates strongly.

Conclusion: Maximizing Value in the AI Era

Mastering Claude API pricing requires more than understanding rate cards—it demands a strategic approach that aligns technical capabilities with business objectives. The combination of subscription tiers and API pricing provides flexibility for organizations at every stage, from individual developers experimenting with AI to enterprises deploying mission-critical applications.

The key to success lies in continuous optimization. Start with clear usage projections, implement comprehensive monitoring, and remain flexible as your needs evolve. Whether you choose direct API access for maximum control or leverage gateway services like laozhang.ai for simplified management and cost savings, the goal remains constant: extracting maximum value from your AI investment.

As we look toward the remainder of 2025 and beyond, the trend toward more accessible, cost-effective AI seems certain to continue. Early adopters who build flexible, efficient implementations today position themselves to capitalize on future improvements in both capability and pricing. The question isn't whether to invest in AI capabilities like Claude, but how to do so intelligently, efficiently, and with clear sight of the return on investment.

For those ready to take the next step, whether through direct API integration or cost-optimized gateway services, the path forward is clear. Register with laozhang.ai (https://api.laozhang.ai/register/?aff_code=JnIT ) to receive free credits and experience how intelligent API management can transform your AI costs from a concern into a competitive advantage. In the rapidly evolving world of AI, the best time to optimize your approach is now.