The dreaded message appears just as you're making progress: "You've reached your file upload limit." For millions of ChatGPT users, this frustrating notification has become an unwelcome daily companion, disrupting workflows and crushing productivity at the worst possible moments. What once felt like unlimited AI-powered image analysis has transformed into a carefully rationed resource, forcing users to fundamentally rethink how they interact with one of the world's most powerful vision models.

The evolution of ChatGPT's image upload restrictions tells a larger story about the challenges of scaling AI services. When GPT-4 with vision capabilities launched in late 2023, users marveled at its ability to understand and analyze images with human-like comprehension. Screenshots could be debugged, handwritten notes transcribed, complex diagrams explained, and creative projects critiqued—all within seconds. The possibilities seemed endless, and for a brief moment, they were.

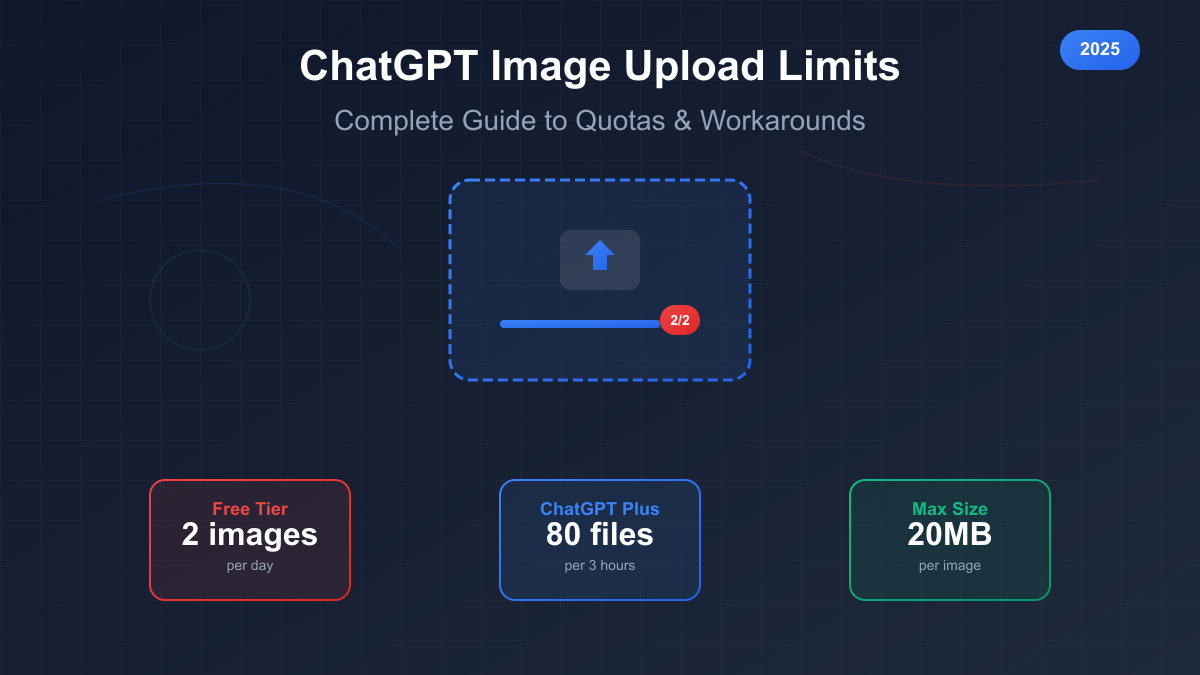

Fast forward to 2025, and the landscape has dramatically shifted. Free tier users, who once enjoyed 3-5 daily image uploads, now face a mere 2 images per day—a 60% reduction that has rendered the service nearly unusable for any serious work. Even paying ChatGPT Plus subscribers, despite their $20 monthly investment, encounter the byzantine "80 files per 3-hour rolling window" system that seems designed more to confuse than clarify. The promise of AI-augmented visual understanding has collided with the harsh realities of computational costs and infrastructure limitations.

This comprehensive guide cuts through the confusion to deliver exactly what you need: clear explanations of current limits across all tiers, practical solutions to common errors, and proven strategies to maximize your available quota. Whether you're a frustrated free user seeking alternatives, a Plus subscriber trying to optimize your workflow, or a developer considering API integration, you'll find actionable insights tailored to your specific needs. We'll explore not just the what, but the why behind these limitations, empowering you to make informed decisions about your AI tool stack.

Current ChatGPT Image Upload Limits Explained

Understanding ChatGPT's current image upload limitations requires navigating a complex web of restrictions that vary dramatically across subscription tiers. The system has evolved from relatively generous allowances to increasingly stringent quotas, reflecting both technical constraints and business imperatives. Let's break down exactly what each tier offers in 2025, cutting through marketing language to reveal the practical realities users face daily.

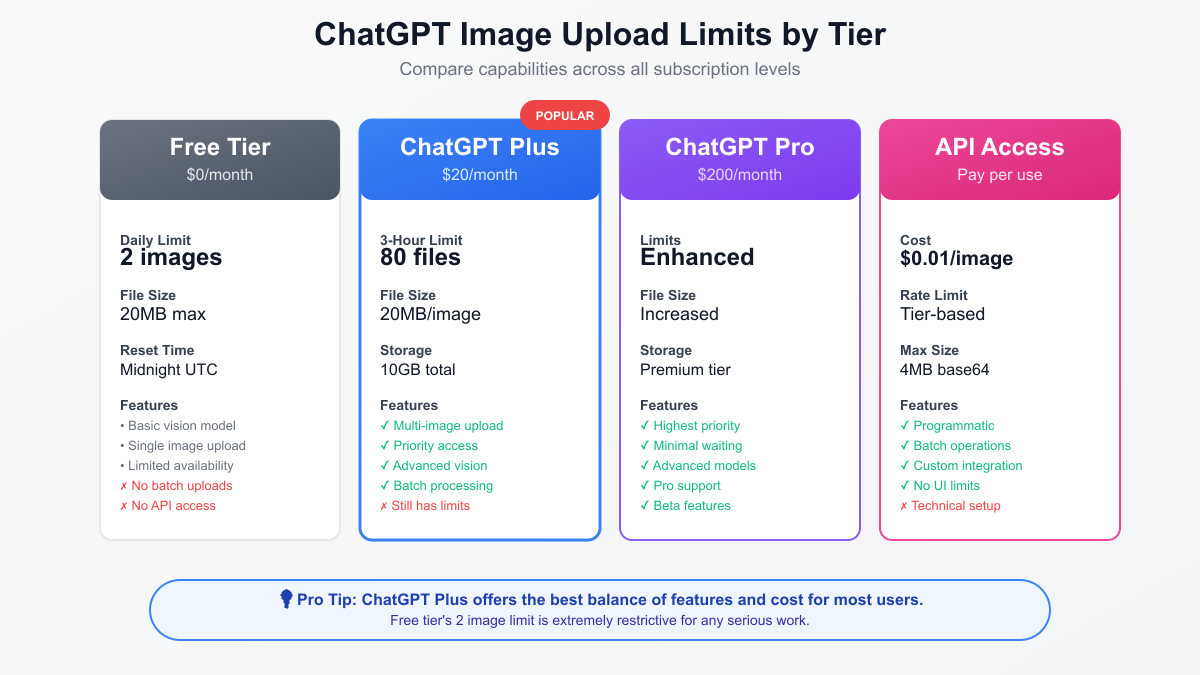

Free tier users bear the brunt of recent restrictions, with access slashed to a mere 2 images per day. This dramatic reduction from the previous 3-5 image allowance represents more than just a numerical decrease—it fundamentally alters the service's utility. The reset occurs at midnight UTC, creating additional confusion for users in different time zones who must calculate when their meager allowance refreshes. These 2 images must cover all use cases: from analyzing screenshots to getting help with homework, leaving no room for experimentation or iterative improvements.

ChatGPT Plus subscribers, paying $20 monthly, encounter a more complex but generous system: 80 files per 3-hour rolling window. However, this headline number obscures important nuances. The "80 files" encompasses all uploads—not just images but also text documents, PDFs, and other file types. More critically, this operates on a rolling window system that continuously tracks your usage over the past three hours, rather than providing a clean reset every three hours. This means uploading 80 images at once leaves you completely locked out for the next three hours, forcing users to carefully distribute their usage throughout the day.

The storage dimension adds another layer of complexity. Plus users receive 10GB of personal storage, while organizational accounts can access up to 100GB. Every uploaded image counts against this quota, and once filled, users must delete old files before uploading new ones. The system provides no easy way to bulk manage these files, turning storage management into a tedious manual process that further erodes productivity.

ChatGPT Pro, at a steep $200 monthly, promises "enhanced limits" without specifying exact numbers—a deliberate opacity that frustrates potential subscribers evaluating the 10x price jump. User reports suggest substantially higher quotas, possibly 200-300 files per rolling window, with priority processing and minimal wait times. However, the lack of transparent documentation makes it impossible to determine whether Pro tier justifies its premium pricing for image-heavy workflows.

Technical specifications apply uniformly across all tiers: individual images cannot exceed 20MB, though the practical limit often sits lower due to processing timeouts. Supported formats include PNG, JPEG, GIF, and WebP for images, with PDF support for document analysis. The system accepts images up to 2048x2048 pixels, though larger images undergo automatic compression that can affect analysis quality. These technical constraints, while reasonable for typical use cases, create friction for professionals working with high-resolution images or specialized formats.

The real impact of these limitations becomes clear when examining typical daily workflows. A marketing professional reviewing campaign materials might exhaust their Plus quota within the first hour of work. A student analyzing lecture slides for a single course could blow through their free tier allowance before finishing the first chapter. These aren't edge cases—they represent the fundamental mismatch between user needs and current restrictions.

Understanding the Rolling Window System

The rolling window system employed by ChatGPT Plus represents one of the most misunderstood aspects of the platform's image upload limitations. Unlike traditional quota systems that provide clear reset points, the rolling window creates a dynamic, continuously shifting availability that many users find counterintuitive. This sophisticated approach serves OpenAI's infrastructure management needs while creating significant user experience challenges.

At its core, the rolling window tracks every file upload with a precise timestamp, then continuously evaluates how many uploads occurred within the past 180 minutes. When you attempt a new upload, the system counts all uploads from the last three hours—if that number is less than 80, your upload proceeds. If not, you must wait until the oldest uploads "age out" of the three-hour window. This creates a fluid situation where availability doesn't arrive all at once but trickles in as individual uploads expire.

Consider a practical example that illustrates the system's complexity. A user uploads 30 images at 9:00 AM while preparing a presentation, then needs to analyze 30 more screenshots at 10:00 AM for debugging work. After uploading these, they've used 60 of their 80 slots. When an urgent request arrives at 11:00 AM requiring 25 image analyses, they face a partial lockout—only 20 slots remain available. The first batch won't fully clear until 12:00 PM, creating a frustrating wait that disrupts their workflow.

The psychological impact of this system cannot be overstated. Users accustomed to daily or hourly resets from other services expect similar behavior from ChatGPT. Instead, they encounter a system where availability constantly shifts, making it impossible to plan around specific reset times. This uncertainty breeds frustration and encourages users to hoard their uploads, using them more sparingly than necessary out of fear of hitting limits at critical moments.

The rolling window also interacts poorly with typical work patterns. Most users concentrate their ChatGPT usage during specific periods—morning planning sessions, afternoon analysis work, or evening creative projects. The rolling window punishes this natural clustering, forcing users to artificially distribute their usage across time periods that may not align with their actual needs. A graphic designer who typically reviews all client feedback in a morning session must now spread this work throughout the day, fragmenting their focus and reducing efficiency.

Technical implications compound user frustration. The system counts failed uploads against your quota, meaning network errors or format issues can consume precious slots without providing value. There's no grace period or retry mechanism—once an upload is attempted, successful or not, it counts against your rolling window. This harsh approach particularly impacts users with unreliable internet connections or those working with varied file formats that may occasionally fail validation.

The contrast with competitor approaches highlights the rolling window's user-hostile nature. Google Gemini provides clear per-conversation limits that reset with each new chat. Claude.ai offers per-message quotas that users can easily track and plan around. Even services with daily limits provide predictable reset times that users can work with. ChatGPT's rolling window stands alone in its complexity and opacity, raising questions about whether the infrastructure benefits justify the significant user experience costs.

Complete Tier Comparison Guide

The landscape of ChatGPT subscription tiers reveals a carefully crafted progression designed to push users up the pricing ladder while maintaining the illusion of choice. Each tier targets specific user segments with restrictions calibrated to create just enough friction to encourage upgrades without driving users to competitors entirely. Understanding these tiers in detail enables informed decisions about which level—if any—aligns with your actual needs versus marketing promises.

Free tier access in 2025 represents a shadow of its former self, reduced to a token offering that barely demonstrates the platform's capabilities. With just 2 images per day and a midnight UTC reset, free users essentially receive a demo rather than a functional service. The psychological anchoring here is deliberate—users experience just enough value to understand what they're missing, while the severe limitations make any serious work impossible. For students, researchers, or professionals evaluating ChatGPT's vision capabilities, the free tier provides insufficient access for meaningful testing.

ChatGPT Plus occupies the sweet spot of OpenAI's pricing strategy, offering enough capability to enable real work while maintaining sufficient limitations to preserve Pro tier appeal. The 80 files per 3-hour window sounds generous until you realize this encompasses all file types, not just images. Heavy users report exhausting their quota within the first hour of focused work. The $20 monthly price point matches competitors like Claude.ai but delivers arguably less value given the complex restrictions. Plus subscribers also gain priority access during high-traffic periods, though this advantage diminishes as more users upgrade to escape free tier limitations.

The mysterious ChatGPT Pro tier at $200 monthly exists in a realm of deliberate ambiguity. OpenAI provides no concrete specifications, referring only to "enhanced limits" and "minimal waiting." User reports suggest dramatically higher quotas—possibly 10x Plus tier—but without official documentation, potential subscribers cannot make informed decisions. This opacity serves OpenAI's interests, allowing them to adjust Pro tier benefits without public commitment while maintaining pricing flexibility. Pro tier likely makes sense only for enterprise users with OpenAI relationships that clarify actual benefits.

API access introduces an entirely different model: pay-per-use pricing at approximately $0.01 per image analysis. This transparent approach appeals to developers and businesses requiring predictable costs and programmatic access. However, the API involves technical implementation requirements and lacks ChatGPT's conversational interface. Rate limits vary by tier, starting at restrictive levels for new accounts and scaling with usage history and payment reliability. For high-volume users, API costs can quickly exceed subscription prices, making careful calculation essential.

The hidden dimensions of tier comparison extend beyond simple quotas. Response latency varies significantly, with free tier users experiencing 30-second delays during peak times while Pro subscribers enjoy near-instantaneous processing. Model quality may also differ—though OpenAI doesn't acknowledge this, users report more detailed and accurate image analyses on higher tiers. Storage management complexity increases with tier, as higher quotas mean more uploaded files to track and manage. These subtle differences compound the visible restrictions, creating a more significant gap between tiers than marketing materials suggest.

When evaluating tiers, consider total cost of ownership beyond subscription fees. Plus tier's limitations often force users to maintain secondary accounts or supplement with competitor services, effectively doubling costs. Time lost to managing quotas, waiting for availability, and working around restrictions represents a hidden expense that can exceed monetary costs. For many users, a combination approach—Plus subscription for general use, API for high-volume needs, and competitor services for overflow—provides the most practical solution despite its complexity.

Common Error Messages and Solutions

Encountering error messages while trying to upload images to ChatGPT has become a daily frustration for millions of users. These errors range from clear quota notifications to cryptic failures that consume upload slots without providing value. Understanding each error type, its underlying cause, and practical solutions can mean the difference between maintained productivity and hours of wasted effort.

"You've reached your file upload limit" stands as the most common and frustrating error, appearing without warning just when you need to analyze another image. This message indicates you've exhausted your quota for the current period—daily for free users, within the rolling 3-hour window for Plus subscribers. The error provides no helpful information about when uploads will become available again, forcing users to guess or maintain external tracking. For free users, the solution is straightforward but painful: wait until midnight UTC. Plus subscribers face a more complex calculation, needing to determine when their oldest uploads exit the 3-hour window.

Upload failures present a particularly insidious problem, as they consume quota slots despite providing no value. The generic "Upload failed - Please try again" message masks various underlying issues: network timeouts, server overload, format validation failures, or file corruption. Each attempted upload, successful or not, counts against your limit. This harsh reality means a poor internet connection or busy server can waste your entire daily allocation. Solutions include ensuring stable connectivity, pre-validating file formats, and timing uploads during off-peak hours (typically 2-6 AM Pacific Time).

File size errors occur when images exceed the 20MB limit, though practical limitations often kick in earlier. Large files may timeout during upload even if technically within limits, resulting in the dreaded failed upload that still consumes quota. The error message "File size exceeds maximum limit" at least provides clear guidance, unlike timeout failures that leave users guessing. Solutions involve pre-processing images using tools like TinyPNG or Squoosh to reduce file size while maintaining visual quality. Aim for under 10MB to ensure reliable uploads.

Format-related errors arise from ChatGPT's specific requirements, which aren't always clearly communicated. While the platform claims support for common formats (PNG, JPEG, GIF, WebP), certain encoding variations or metadata structures can trigger rejections. The "Unsupported file format" error might appear even for seemingly standard images. Solutions include re-saving images in standard formats, stripping metadata using tools like ImageOptim, and avoiding less common formats like HEIC or RAW files. When in doubt, convert to JPEG with standard encoding.

The particularly frustrating "Something went wrong" represents ChatGPT's catch-all error for various backend issues. This vague message might indicate server overload, account-specific problems, or temporary system glitches. Users report this error appearing more frequently during peak usage times and often resolving spontaneously. Solutions include clearing browser cache and cookies, trying different browsers or incognito mode, and if persistent, contacting support with specific timestamp information. Some users find success by starting fresh conversations rather than continuing long threads.

Network-related errors manifest in various ways, from explicit timeout messages to silent failures where uploads appear to complete but images never process. These issues particularly affect users with slower connections or those uploading multiple large files. The platform's lack of upload progress indicators exacerbates the problem, leaving users unsure whether to wait or retry. Solutions include using wired connections when possible, uploading during low-traffic periods, and breaking multiple images into separate uploads rather than attempting batch processing.

Why ChatGPT Has These Strict Limits

Understanding the reasoning behind ChatGPT's increasingly restrictive image upload limits requires examining the intersection of technical constraints, business economics, and strategic positioning. While users experience these limitations as arbitrary frustrations, they stem from complex realities that shape how AI services operate at scale. Peeling back these layers reveals why simple solutions remain elusive and why limits will likely persist or even tighten.

The computational cost of image analysis dwarfs text processing by orders of magnitude. When you upload an image to ChatGPT, the system doesn't simply "look" at it—it performs complex multi-stage processing. First, the image undergoes preprocessing: format validation, malware scanning, and size optimization. Next, the vision model (GPT-4V) processes the image through multiple neural network layers, extracting features, identifying objects, understanding relationships, and preparing comprehensive internal representations. This process requires 10-50 times more computational resources than analyzing equivalent text.

Infrastructure costs compound exponentially with scale. OpenAI serves millions of users globally, each potentially uploading multiple images daily. The GPU clusters required for vision processing represent massive capital investments, with individual units costing tens of thousands of dollars. Unlike text processing, which can leverage various optimization techniques, image analysis requires full model attention for each pixel. Energy consumption alone for image processing creates substantial operational costs, with data centers consuming megawatts of power continuously.

The business model mathematics reveal why generous limits proved unsustainable. Early adoption phases often feature generous allowances to drive user growth and platform adoption. However, as usage scales, the cost per free user can exceed $10 monthly just for image processing—an unsustainable loss leader even for well-funded companies. The reduction from 5 to 2 images for free users likely reflects crossing a critical threshold where infrastructure costs threatened overall service viability.

Abuse prevention adds another dimension to limit calculations. Without restrictions, malicious actors could overwhelm the system with automated uploads, either for denial-of-service attacks or to extract maximum value without payment. Image processing's resource intensity makes it an attractive target for exploitation. Rate limits serve as a crucial defense mechanism, ensuring fair access for legitimate users while preventing system gaming. The rolling window system, while user-unfriendly, effectively prevents burst abuse patterns that simpler daily limits might allow.

Competitive dynamics influence limit decisions in complex ways. While tighter restrictions risk driving users to competitors, they also signal market maturity and push toward sustainable business models. OpenAI likely calculates that their technical advantages and ecosystem lock-in offset user frustration from limits. Additionally, as competitors face similar scaling challenges, industry-wide restrictions may emerge, reducing the competitive disadvantage of any single platform's limits.

The fundamental tension between accessibility and sustainability drives these limitations. OpenAI must balance multiple constituencies: researchers expecting free access for academic work, casual users wanting to explore AI capabilities, businesses requiring reliable service, and investors demanding path to profitability. Current limits represent an uneasy compromise that satisfies none fully but maintains service viability. As infrastructure costs potentially decrease with technological advances, limits might relax—but growing demand could equally drive further restrictions.

Optimization Strategies That Actually Work

Maximizing your ChatGPT image upload capacity requires moving beyond simple tips to implementing systematic strategies that work with, not against, the platform's limitations. Successful users have developed sophisticated approaches that can effectively double or triple their usable capacity through smart planning and technical optimization. These strategies, refined through community experience and technical analysis, provide practical solutions to the daily frustration of hitting upload limits.

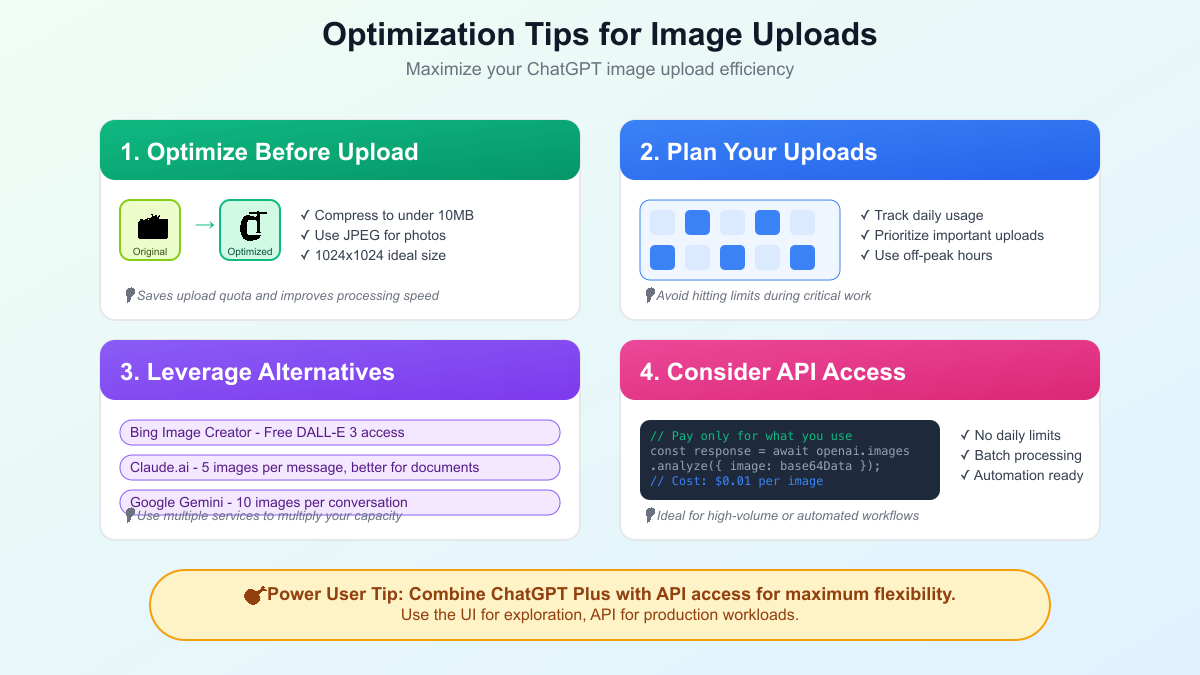

Image preprocessing stands as the foundational optimization technique that every user should master. Before uploading any image, reduce its dimensions to the sweet spot of 1024x1024 pixels—large enough for ChatGPT to analyze effectively but small enough to process quickly and efficiently. Compression tools like TinyPNG or Squoosh can reduce file sizes by 60-80% without visible quality loss. This preprocessing not only ensures reliable uploads but also speeds up processing time, allowing you to accomplish more within your quota. Users report that properly optimized images process 2-3x faster than raw uploads.

Strategic timing transforms random upload patterns into predictable workflows. Plus subscribers should distribute uploads across their workday rather than clustering them during morning sessions. Upload 15-20 images per hour rather than exhausting your quota in one burst. This approach maintains continuous availability while aligning with natural work rhythms. Free tier users should time their 2 daily uploads for maximum impact—one during morning planning and one for afternoon verification, ensuring critical tasks receive AI assistance.

Multi-modal approaches reduce reliance on image uploads while maintaining analytical capabilities. Instead of uploading multiple screenshots to debug code, upload one representative image and provide detailed text descriptions for variations. ChatGPT's ability to maintain context means you can reference previously uploaded images in subsequent queries without re-uploading. This technique proves particularly valuable for iterative design work or code debugging where changes are incremental.

Batch planning revolutionizes workflow efficiency for Plus subscribers. Rather than uploading images as needs arise, collect all materials requiring analysis and process them in planned sessions. Allocate your 80-file quota strategically: 30 for morning analysis, 30 for afternoon work, and 20 reserved for unexpected needs. This approach prevents the frustration of hitting limits during critical tasks while ensuring consistent availability throughout the workday.

Alternative service orchestration multiplies effective capacity without additional cost. Maintain accounts with Google Gemini (10 images per conversation), Claude.ai (5 images per message), and Bing Image Creator (free DALL-E access). Develop a routing strategy based on task requirements: use ChatGPT for complex analysis requiring conversation context, Gemini for quick image identification, Claude for document analysis, and Bing for creative tasks. This multi-platform approach can provide 50+ daily image analyses without hitting any single platform's limits.

Technical optimizations for power users include browser automation and API integration. Chrome extensions can track upload counts and predict availability windows. Bookmarklets can quickly compress and format images before upload. For developers, implementing a simple proxy that routes requests across multiple services based on availability provides seamless access. While these approaches require technical setup, they eliminate the constant mental overhead of quota management.

Community-sourced strategies continue evolving as users discover new optimizations. Some users report success with "image stitching"—combining multiple related images into single uploads, then asking ChatGPT to analyze specific sections. Others leverage ChatGPT's improved memory to build persistent visual contexts that reduce re-upload needs. The key lies in viewing limits not as hard barriers but as design constraints that encourage creative problem-solving.

Best Alternative Services for Image Analysis

When ChatGPT's image upload limits prove insurmountable, a robust ecosystem of alternative AI services stands ready to fill the gap. Each platform offers unique advantages and trade-offs, creating opportunities for users to build multi-service workflows that transcend any single platform's limitations. Understanding these alternatives enables strategic decisions about when to use ChatGPT versus when to leverage other services for optimal results.

Google Gemini emerges as the most compelling free alternative, offering 10 images per conversation without daily limits. This generous allocation, combined with Google's computational infrastructure, provides reliable performance even during peak times. Gemini excels at straightforward image identification, OCR tasks, and visual question-answering. However, its conversational abilities lag behind ChatGPT, making it less suitable for complex analytical tasks requiring extended context. The integration with Google Workspace creates natural workflows for users already within Google's ecosystem.

Claude.ai by Anthropic targets professional users with superior document analysis capabilities. While limited to 5 images per message, Claude's strength lies in processing complex documents, PDFs, and mixed media content. Its ability to maintain context across long documents surpasses ChatGPT, making it ideal for research, legal review, or technical documentation analysis. The $20 monthly subscription matches ChatGPT Plus pricing while offering more predictable availability and clearer usage tracking.

Bing Image Creator provides an interesting backdoor to OpenAI's technology, offering free access to DALL-E 3 through Microsoft's infrastructure. While primarily focused on image generation rather than analysis, Bing's visual search capabilities complement its creative features. The weekly credit system (25 boosts) provides more flexibility than daily limits, allowing users to concentrate usage when needed. Integration with Microsoft Edge and Windows creates seamless workflows for PC users.

Meta AI represents the wild card in the image analysis landscape, currently offering unlimited uploads as part of Meta's aggressive market entry strategy. Available through WhatsApp, Instagram, and Messenger, Meta AI provides convenient access for casual users already within Meta's ecosystem. However, privacy concerns and the likelihood of future restrictions make it unsuitable as a primary solution. Best used as overflow capacity when other services hit limits.

Specialized alternatives serve niche requirements better than general-purpose platforms. For code screenshot analysis, Pieces for Developers offers superior IDE integration. Medical professionals might prefer specialized platforms with HIPAA compliance. Researchers benefit from tools like Mathpix for equation recognition or PlantNet for botanical identification. These focused tools often provide unlimited usage within their narrow domains.

The emerging aggregator ecosystem deserves special attention for power users. Services like laozhang.ai provide unified APIs across multiple AI providers, handling the complexity of managing different services while presenting a single interface. These platforms offer compelling value propositions: simplified integration, consolidated billing, and automatic failover between providers. For developers building AI-powered applications, aggregators eliminate the need to implement multiple provider integrations independently.

Building an effective multi-service strategy requires matching platform strengths to specific use cases. Use ChatGPT for complex analytical tasks benefiting from conversational context. Route simple identifications to Gemini's generous free tier. Send document analysis to Claude for superior accuracy. Leverage specialized tools for domain-specific needs. This orchestrated approach can provide virtually unlimited image analysis capacity while optimizing for cost and capability.

API Access: When It Makes Sense

The decision to implement API access represents a fundamental shift from consumer-friendly interfaces to developer-oriented solutions. While ChatGPT's web interface frustrates with opaque limits and rolling windows, the API offers transparent pay-per-use pricing and programmatic control. However, this power comes with technical complexity and different economic considerations that make it suitable for specific use cases rather than general users.

API pricing appears straightforward at $0.01 per image analysis, but real costs quickly compound with usage. A modest workflow analyzing 100 images daily translates to $30 monthly—already exceeding ChatGPT Plus subscription costs. Heavy users processing 500+ images daily face $150+ monthly bills, approaching Pro tier pricing without its benefits. The economic crossover point where API becomes cost-effective typically occurs only for highly variable usage patterns or when programmatic access provides significant workflow advantages.

Technical implementation presents barriers that filter out casual users. API integration requires programming knowledge, secure credential management, and error handling logic. Unlike the web interface's forgiving conversation context, API calls must be precisely formatted with proper base64 encoding for images. Rate limits apply per-minute rather than rolling windows, requiring queue management for burst processing. These technical requirements mean API access suits developers and technical teams rather than general business users.

The compelling use cases for API access center on automation and integration possibilities. E-commerce platforms can automatically analyze product images for quality control. Content moderation systems can screen uploads before publication. Development teams can integrate visual debugging directly into their CI/CD pipelines. These scenarios leverage API strengths: predictable costs, programmatic control, and seamless integration with existing systems.

For users seeking middle ground between ChatGPT's interface and direct API complexity, services like laozhang.ai offer compelling alternatives. These API aggregators provide simplified integration, often with better pricing through volume discounts. They handle authentication complexity, provide unified interfaces across multiple AI providers, and offer features like automatic failover. For many use cases, aggregators deliver API benefits without full implementation complexity.

The decision framework for API adoption should consider multiple factors beyond simple cost comparison. Evaluate whether your use case requires programmatic access or if interface-based solutions suffice. Calculate total costs including development time, not just per-image pricing. Consider whether usage patterns are predictable enough for subscription models or variable enough to benefit from pay-per-use. Factor in technical team capabilities and ongoing maintenance requirements.

Real implementation experiences reveal important nuances. API responses lack the conversational refinement of ChatGPT's interface, sometimes requiring prompt engineering to achieve comparable results. Image size limitations (4MB base64 encoded) prove more restrictive than the web interface's 20MB limit. Error handling becomes critical, as failed API calls still incur costs. Success requires treating API integration as a development project rather than a simple service switch.

Tracking and Managing Your Usage

The absence of visible usage counters in ChatGPT transforms quota management from a simple task into a complex challenge requiring external tracking systems. Successful users develop sophisticated monitoring approaches that provide visibility into their consumption patterns, predict availability windows, and optimize upload timing. These systems range from simple manual logs to automated solutions that transform the user experience.

Manual tracking methods provide immediate improvement over flying blind. Create a simple spreadsheet with columns for timestamp, number of images, and calculated expiration time (timestamp + 3 hours for Plus users). Each upload gets logged with precise time, creating a running record of when slots become available. This basic approach, while requiring discipline, provides complete visibility into usage patterns and availability windows. Users report that manual tracking, despite its tedium, fundamentally changes how they interact with the platform—transforming reactive frustration into proactive planning.

Browser-based solutions offer automation without complex setup. Bookmarklets can inject tracking interfaces directly into ChatGPT's interface, maintaining counts and predicting availability. Browser extensions like "ChatGPT Upload Tracker" provide floating widgets showing remaining quota and time until next available slot. These tools typically store data locally, preserving privacy while offering convenience. The psychological benefit of seeing exact availability proves as valuable as the functional tracking itself.

Advanced users implement comprehensive monitoring systems using automation tools. Zapier or Make.com workflows can automatically log uploads to Google Sheets, send notifications when quotas approach limits, and even distribute usage across multiple accounts. Python scripts running locally can provide desktop notifications when upload windows open. These sophisticated approaches require technical setup but eliminate mental overhead entirely.

Data analysis of tracking logs reveals valuable optimization opportunities. Visualizing usage patterns often exposes inefficiencies—clustering during specific hours, wasted uploads on failed attempts, or underutilization during available windows. Users frequently discover they're operating at 60-70% efficiency due to poor timing. Armed with data, workflows can be restructured to align with natural availability patterns, effectively increasing usable capacity without changing quotas.

Predictive modeling takes tracking to the next level. By analyzing historical usage patterns, users can forecast future availability with surprising accuracy. Simple moving averages predict typical daily consumption. More sophisticated approaches use machine learning to account for weekly patterns, project deadlines, and seasonal variations. While overkill for casual users, professionals managing critical workflows find predictive modeling invaluable for capacity planning.

The most effective tracking systems share common characteristics: they're frictionless to use, provide real-time visibility, and integrate naturally with existing workflows. Whether choosing manual logs or automated systems, the key lies in consistent implementation. The investment in setting up tracking pays dividends through reduced frustration, improved planning, and effective capacity utilization that can feel like a quota increase.

Workarounds and Creative Solutions

The ChatGPT user community has developed an impressive array of workarounds that push the boundaries of what's possible within platform limitations. These creative solutions, refined through collective experimentation, demonstrate human ingenuity when faced with artificial constraints. While respecting terms of service, these approaches maximize available resources through clever optimization rather than system exploitation.

Image stitching emerges as one of the most effective techniques for multiplying analysis capacity. Rather than uploading four separate screenshots, users combine them into a single 2x2 grid image, then ask ChatGPT to analyze specific quadrants. This approach works particularly well for comparing variations, showing process steps, or presenting multiple examples. Advanced practitioners create templates with labeled sections, making it easy for ChatGPT to reference specific areas. This technique can effectively quadruple image capacity for suitable use cases.

Descriptive preprocessing flips the traditional workflow, using ChatGPT's text capabilities to reduce image dependencies. Instead of uploading multiple product photos for analysis, upload one representative image and provide detailed text descriptions of variations. ChatGPT's impressive ability to maintain context means it can extrapolate from the single visual reference combined with textual details. This hybrid approach proves particularly effective for design iterations, code debugging, or any scenario with incremental changes.

Time zone arbitrage leverages global usage patterns for optimal availability. ChatGPT's servers experience predictable load variations following global work patterns. Users report significantly better availability during 2-6 AM Pacific Time, when both American and European users are offline. Scheduling non-urgent batch processing during these windows ensures smooth uploads and faster processing. Some dedicated users even adjust their sleep schedules to work during optimal windows—a testament to how severely limits impact workflows.

Collaborative quota sharing within teams creates multiplied capacity through coordination. Organizations implement "image request" systems where team members submit needs to designated uploaders who manage quotas efficiently. Shared tracking spreadsheets enable real-time coordination, preventing simultaneous limit hitting. Some teams rotate primary users daily, ensuring everyone has access when needed while maintaining aggregate capacity. This approach requires trust and communication but effectively multiplies available resources.

Context preservation techniques minimize re-upload needs through strategic conversation management. ChatGPT's improved memory capabilities mean previously analyzed images can be referenced in new conversations without re-uploading. Users develop "visual libraries" within conversations, uploading reference sets that can be invoked later through description. This approach requires careful conversation management but can extend effective capacity by 30-50% for suitable workflows.

The most creative solutions often combine multiple techniques. One marketing team developed a system using image stitching for initial concepts, descriptive preprocessing for iterations, collaborative sharing for final reviews, and time-zone optimization for batch processing. This multi-faceted approach transformed their workflow from constant limit frustration to smooth operation within existing constraints. The key insight: viewing limits as design constraints rather than barriers encourages innovative problem-solving that often improves overall workflow efficiency.

Future of ChatGPT Image Limits

Historical patterns in ChatGPT's limit evolution reveal clear trajectories that suggest future directions. The initial generous allowances followed by progressive restrictions mirror classic platform monetization strategies. Understanding these patterns, combined with competitive pressures and technical developments, enables informed predictions about where image upload limits are headed and how users should prepare.

Short-term projections for the next six months suggest modest relaxation of Plus tier limits, possibly increasing to 100 files per 3-hour window. This increment would address user frustration while maintaining clear differentiation from Pro tier. Free tier limits will likely remain at 2 images daily or potentially decrease further to 1 image, completing the transition to a pure demonstration tier. API pricing might see reductions to match competitive pressure, possibly dropping to $0.008 per image as infrastructure costs decrease.

Medium-term evolution over 12-18 months points toward fundamental restructuring of the limit system. The confusing rolling window will likely yield to clearer daily or hourly quotas that users can easily understand and plan around. Expect introduction of "overflow" pricing—allowing Plus users to purchase additional capacity on-demand without upgrading tiers. This hybrid model satisfies power users experiencing occasional spikes while maintaining predictable revenue streams.

Competitive dynamics will significantly influence limit evolution. As Google, Anthropic, and Meta mature their offerings, OpenAI faces pressure to match or exceed competitor allowances. However, the industry trend toward sustainable business models suggests all platforms will eventually implement similar restrictions. The current period of generous competitor limits represents customer acquisition strategies rather than long-term positioning. Users should expect industry-wide standardization around sustainable limit levels.

Technical advancements offer hope for expanded limits without proportional cost increases. Improved model efficiency, specialized hardware, and optimized inference pipelines continuously reduce per-image processing costs. However, demand growth typically outpaces efficiency gains. The democratization of AI image analysis drives exponential usage growth that infrastructure struggles to match. Net result: modest limit increases lagging behind user demand growth.

Strategic recommendations for users center on building platform-agnostic workflows. Develop systems that can seamlessly switch between providers as limits and pricing evolve. Invest in learning prompt engineering techniques that maximize single-image analysis value. Build tracking and automation systems that work across platforms. Most importantly, view current generous competitor offerings as temporary advantages to be leveraged while available.

The long-term equilibrium will likely see ChatGPT Plus stabilizing around 150-200 images daily, Free tier offering 5-10 images, and API pricing reaching $0.005 per image. These levels balance sustainability with usability, allowing serious work while encouraging paid upgrades. Users who prepare for this reality by optimizing workflows and diversifying platform usage will navigate the transition smoothly. Those expecting return to early generous limits will face continued disappointment as the industry matures toward sustainable models.

Quick Reference Guide

Essential information for managing ChatGPT image upload limits, distilled into immediately actionable reference material:

Current Limits by Tier:

- Free: 2 images per day, reset at midnight UTC

- Plus: 80 files per 3-hour rolling window, 20MB per image

- Pro: Enhanced limits (unspecified), minimal waiting

- API: ~$0.01 per image, rate limits vary by tier

Error Message Solutions:

- "You've reached your file upload limit" → Check rolling window timing or wait for UTC midnight

- "Upload failed" → Reduce file size, check format, retry during off-peak hours

- "File size exceeds limit" → Compress to under 10MB using TinyPNG

- "Unsupported format" → Convert to JPEG or PNG, strip metadata

Optimization Checklist:

- Preprocess images to 1024x1024 pixels

- Compress files to under 10MB

- Distribute uploads across time windows

- Track usage in external spreadsheet

- Set up alternative service accounts

Best Alternative Services:

- Quick analysis: Google Gemini (10 free images per conversation)

- Document processing: Claude.ai (5 images per message)

- Creative tasks: Bing Image Creator (25 weekly credits)

- API needs: laozhang.ai or direct OpenAI API

Key Formulas:

- Next available slot (Plus) = Upload time + 3 hours

- Daily API cost = Images × $0.01

- Effective capacity = (80 files ÷ 3 hours) × active hours

Emergency Workflow:

- Hit ChatGPT limit → Switch to Gemini

- Gemini unavailable → Try Claude.ai

- Need API access → Consider laozhang.ai

- All else fails → Wait for rolling window reset

Conclusion

The journey through ChatGPT's image upload limits reveals a complex landscape where technical limitations, business pressures, and user needs collide. What began as a revolutionary capability—AI that could see and understand images with human-like comprehension—has evolved into a carefully rationed resource that requires strategic planning and creative workarounds to use effectively. The shift from abundance to scarcity marks a critical transition in AI service delivery that extends far beyond ChatGPT.

The most successful users are those who've adapted their workflows to work with, rather than against, these limitations. They've built tracking systems to monitor usage, developed preprocessing routines to maximize each upload, and created multi-platform strategies that leverage the entire AI ecosystem. These adaptations, born from necessity, often result in more efficient workflows than the original unrestricted approach. The constraints have forced a level of intentionality and optimization that improves overall productivity.

Looking ahead, the trajectory is clear: limits are here to stay and will likely become standard across all AI platforms as the industry matures. The current period of competitive generosity from Google, Anthropic, and others represents a temporary land grab rather than sustainable positioning. Users who build flexible, platform-agnostic workflows today will navigate tomorrow's restrictions smoothly. Those hoping for a return to unlimited access will face continued disappointment.

The practical path forward involves accepting limits as a design constraint while maximizing available resources through smart optimization. Start tracking your usage today—even simple logging dramatically improves quota efficiency. Experiment with alternative services to understand their strengths and build fallback options. Most importantly, focus on extracting maximum value from each image upload through better prompts, preprocessing, and strategic timing.

ChatGPT's image capabilities remain transformative despite the frustrating limitations. The ability to analyze, understand, and discuss visual content with AI represents a fundamental advance in human-computer interaction. By mastering the strategies outlined in this guide—from technical optimizations to creative workarounds—you can maintain productive workflows within current constraints while preparing for future evolution. The limits may define the boundaries, but within those boundaries, remarkable possibilities still exist.